My first op-ed in @WIRED: how the AI feedback loops I helped build at YouTube can amplify our worst inclinations, and what to do about it.

wired.com/story/the-toxi…

1/

YouTube bans sexual videos. What happened?

2/

3/

nytimes.com/2019/06/03/wor…

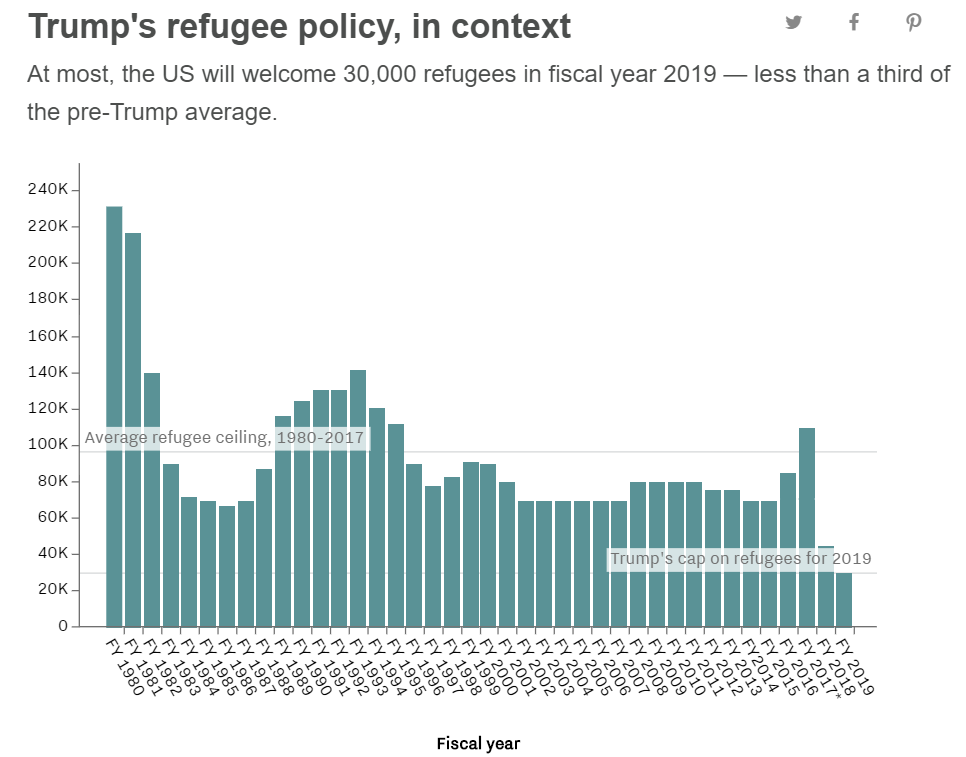

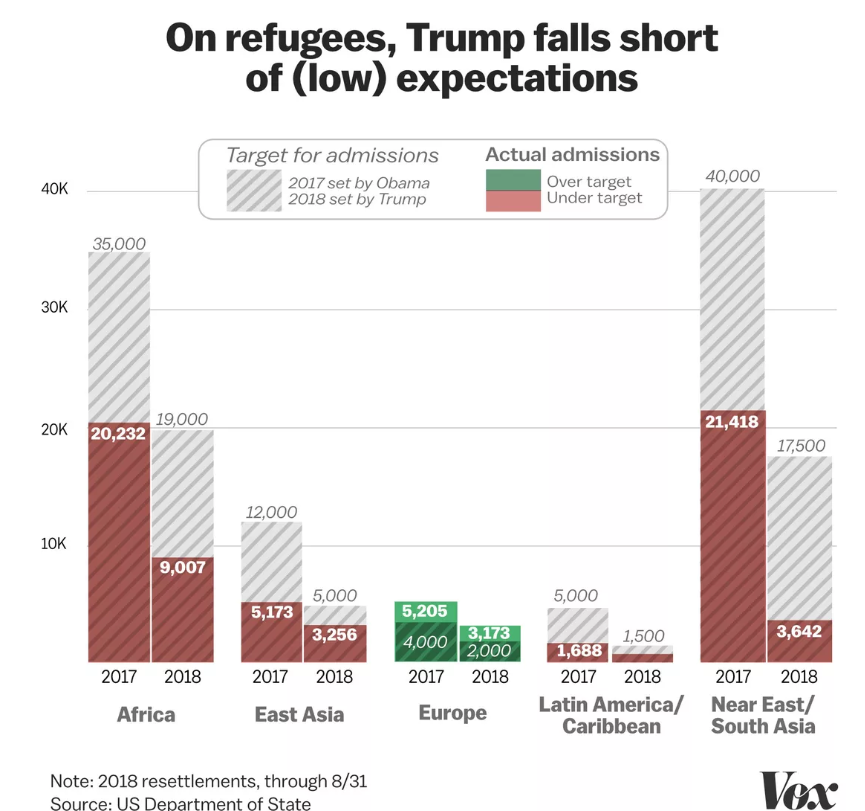

Let's take a look at the big picture. 5/

Are these content neutral? 6/

1) The type of content that hyper-engaged users like gets more views

2) Then it gets recommended more, since the AI maximizes engagement

3) Content creators will notice and create more of it

4) People will spend even more time on it

7/

Some of our worst inclinations, such as misinformation, rumors, divisive content generate hyper-engaged users, so they often get *favored* by the AI. 9/

Justin Amash said "Our politics is in a partisan death spiral". Is this "death spiral" good for engagement? Certainly: partisans are hyper-active users. Hence, they benefit from massive AI amplification. 10/

washingtonpost.com/opinions/justi…

11/

facebook.com/notes/mark-zuc…

15/

But part of the reason why people click on this content is because they trust @YouTube 16/

theguardian.com/commentisfree/… 20/

Users:

=> Stop trusting Google/YouTube blindly

Their AI is working in your best interest only if you want to spend as much time as possible on the site. Otherwise, their AIs may work against you, to make you waste time or manipulate you. 21/

=> Be more transparent about what your AI decides

=> Align your "loss function" on what users really want, not pure engagement 22/

=> Create a special legal status for algorithmic curators

=> Demand some level of transparency for recommendations. This will help understand the impact of AI, and boost competition & innovation

IBM advocated for legislation:

23/