1/ I'm very happy to give a little thread today on our paper accepted at ICLR 2021!

🎉🎉🎉

In this paper, we show how to build ANNs that respect Dale's law and which can still be trained well with gradient descent. I will expand in this thread...

openreview.net/forum?id=eU776…

🎉🎉🎉

In this paper, we show how to build ANNs that respect Dale's law and which can still be trained well with gradient descent. I will expand in this thread...

openreview.net/forum?id=eU776…

2/ Dale's law states that neurons release the same neurotransmitter from all of their axonal terminals.

en.wikipedia.org/wiki/Dale%27s_…

Practically speaking, this implies that neurons are either all excitatory or inhibitory. It's not 100%, nothing is in biology, but it's roughly true.

en.wikipedia.org/wiki/Dale%27s_…

Practically speaking, this implies that neurons are either all excitatory or inhibitory. It's not 100%, nothing is in biology, but it's roughly true.

3/ You may have wondered, "Why don't more people use ANNs that respect Dale's law?"

The rarely discussed reason is this:

When you try to train an ANN that respects Dale's law with gradient descent, it usually doesn't work as well -- worse than an ANN that ignores Dale's law.

The rarely discussed reason is this:

When you try to train an ANN that respects Dale's law with gradient descent, it usually doesn't work as well -- worse than an ANN that ignores Dale's law.

4/ In this paper, we try to rectify this problem by developing corrections to standard gradient descent to make it work with Dale's law.

Our hope is that this will allow people in the Neuro-AI field to use ANNs that respect Dale's law more often, ideally as a default.

Our hope is that this will allow people in the Neuro-AI field to use ANNs that respect Dale's law more often, ideally as a default.

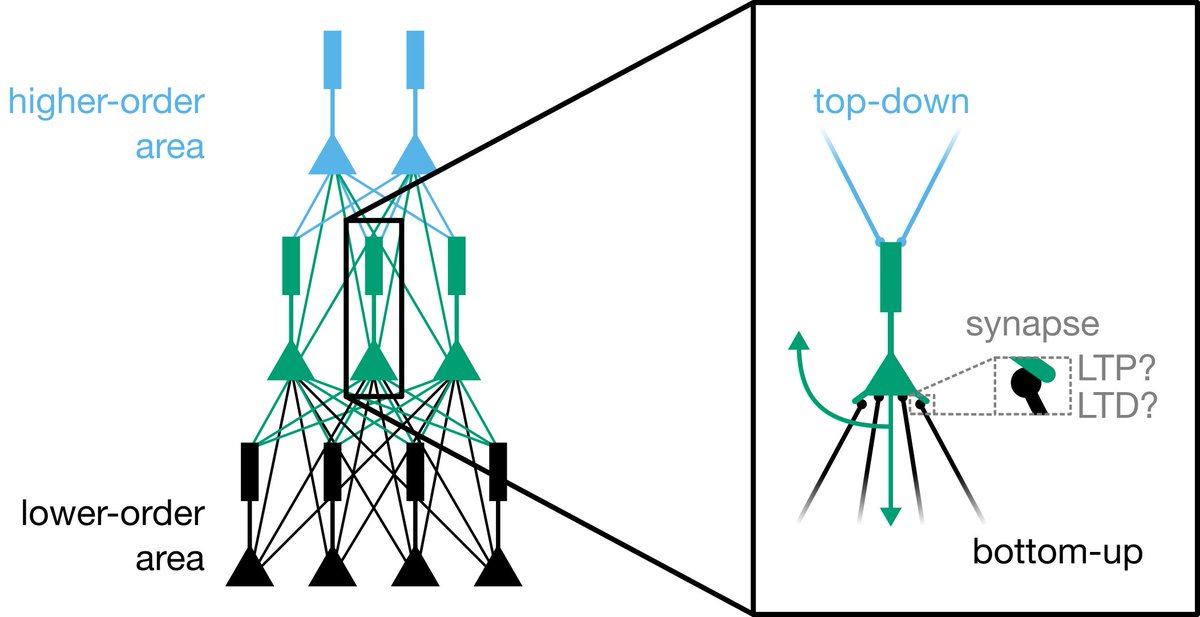

5/ To begin with, we assume we are dealing with feed-forward inhibitory interneurons, so that inhibitory units receive inputs from the layer below and project within layer. Note though, that all of our results can generalise to recurrent inhibition using a rolled out RNN.

6/ To make gradient descent work well in these networks (which we call "Dale's ANNs", or "DANNs") we identify two important strategies.

7/ First, we develop methods for initialising the network so that it starts in a regime that is roughly equivalent to layer norm.

8/ Second, inspired by natural gradients, we use the Fisher Information matrix to show that the interneurons have a disproportionate effect on the output distribution, which requires a correction term to their weight-updates to prevent instability.

9/ When we implement these changes, we can match a standard ANN that doesn't obey Dale's law!

Not so for ANNs that obey Dale's law and doesn't use these tactics!

(Here are MNIST results, ColumnEI is a simple ANN with columns in the weight matrices forced to be all + or -)

Not so for ANNs that obey Dale's law and doesn't use these tactics!

(Here are MNIST results, ColumnEI is a simple ANN with columns in the weight matrices forced to be all + or -)

11/ There are many next steps! But, an immediate one will be developing PyTorch and TensorFlow modules with these corrections built-in to make it easy for other researchers to build and train DANNs.

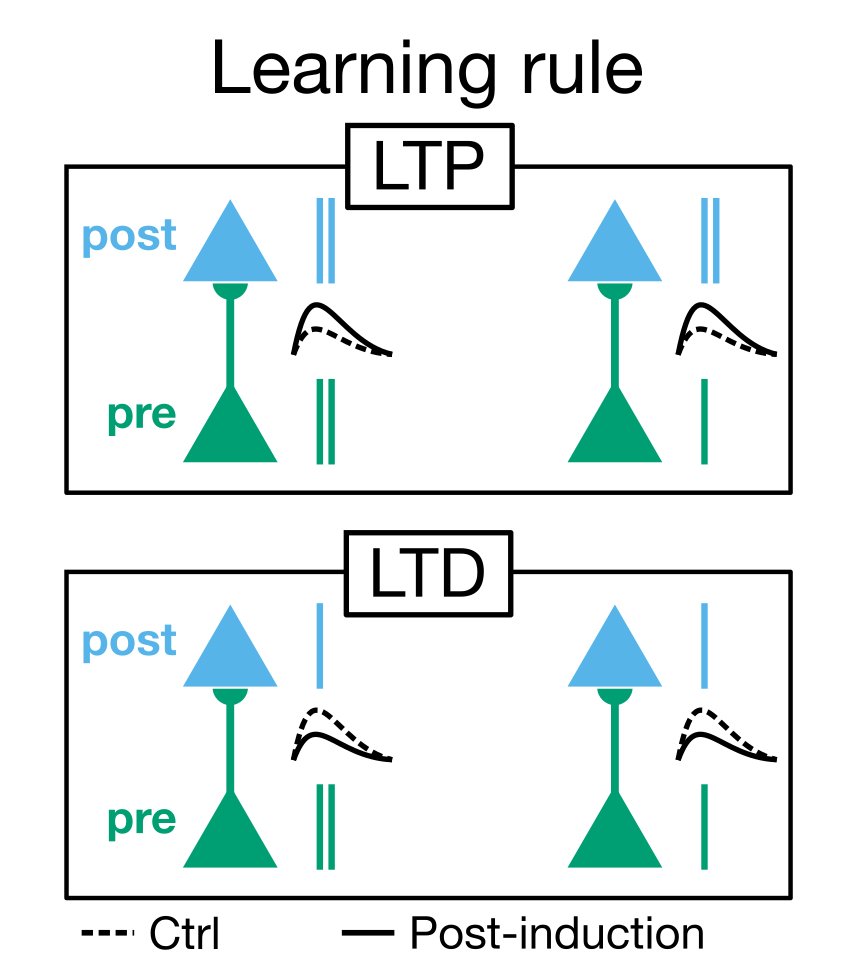

12/ This work also raises interesting Qs about inhibitory plasticity. It can sometimes be tough to induce synaptic plasticity in interneurons. Is that because the brain has it's own built-in mechanisms that correct for an outsized impact of interneurons on output behaviour?

13/ Another Q: why does the brain use Dale's law? We were only able to *match* standard ANNs. No one has shown *better* results with Dale's law. So, is Dale's law just an evolutionary constraint, a local minima in the phylogenetic landscape? Or does it help in some other way?

14/ Recognition where due: this work was lead by Jonathan Cornford (left), with important support from Damjan Kalajdzievski (right) and several others.

Thanks everyone for your awesome work on this!

Thanks everyone for your awesome work on this!

15/ Thanks also to @CIFAR_News, @HBHLMcGill, @IVADO_Qc, @NSERC_CRSNG, @TheNeuro_MNI, and @Mila_Quebec for making this work possible.

16/ Also, thanks to the @iclr_conf organizers and our AC and reviewers! Our reviews were very constructive, we really appreciated it. #ICLR2021

• • •

Missing some Tweet in this thread? You can try to

force a refresh