Expected value is one of the most fundamental concepts in probability theory and machine learning.

Have you ever wondered what it really means and where does it come from?

The formula doesn't tell the entire story right away.

💡 Let's unravel what is behind the scenes! 💡

Have you ever wondered what it really means and where does it come from?

The formula doesn't tell the entire story right away.

💡 Let's unravel what is behind the scenes! 💡

First, let's take a look at a simple example.

Suppose that we are playing a game. You toss a coin, and

• if it comes up heads, you win $1,

• but if it is tails, you lose $2.

Should you even play this game with me? 🤔

We are about to find out!

Suppose that we are playing a game. You toss a coin, and

• if it comes up heads, you win $1,

• but if it is tails, you lose $2.

Should you even play this game with me? 🤔

We are about to find out!

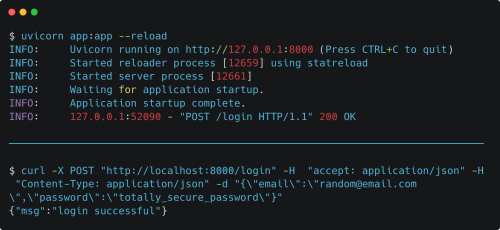

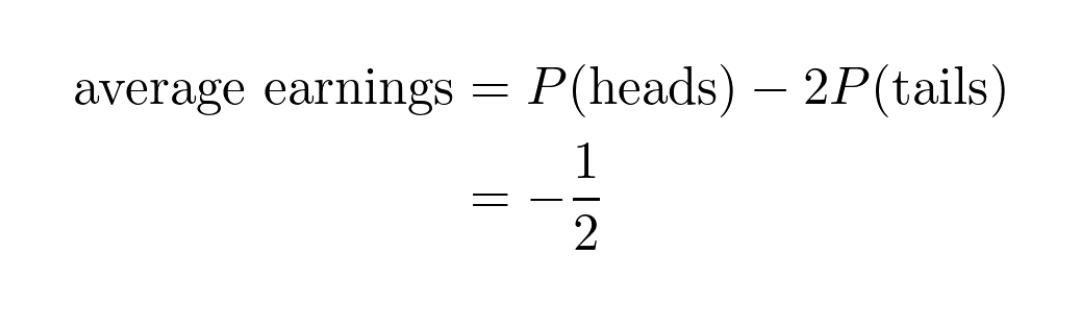

After 𝑛 rounds, your earnings can be calculated by the number of heads times 1 minus the number of tails times 2.

If we divide total earnings by 𝑛, we obtain the average earnings per round.

What happens if 𝑛 approaches infinity? 🤔

If we divide total earnings by 𝑛, we obtain the average earnings per round.

What happens if 𝑛 approaches infinity? 🤔

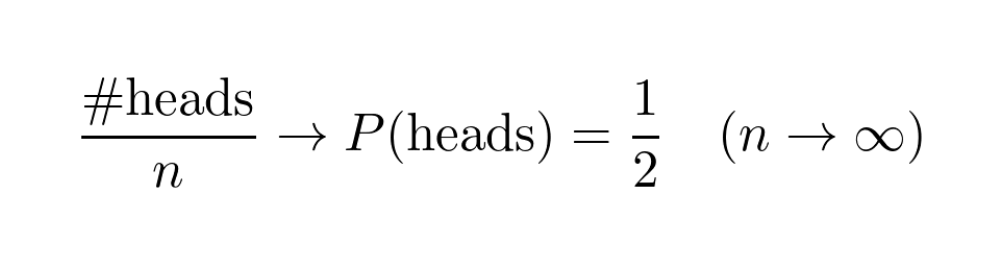

As you have probably guessed, the number of heads divided by the number of tosses will converge to the 𝑝𝑟𝑜𝑏𝑎𝑏𝑖𝑙𝑖𝑡𝑦 of a single toss being heads.

In our case, this is 1/2.

(Similarly, tails/tosses also converges to 1/2.)

In our case, this is 1/2.

(Similarly, tails/tosses also converges to 1/2.)

So, your average earnings per round are -1/2. This is the 𝑒𝑥𝑝𝑒𝑐𝑡𝑒𝑑 𝑣𝑎𝑙𝑢𝑒.

By the way, you definitely shouldn't play this game. 😉

💡 How can we calculate the expected value for a general case? 💡

By the way, you definitely shouldn't play this game. 😉

💡 How can we calculate the expected value for a general case? 💡

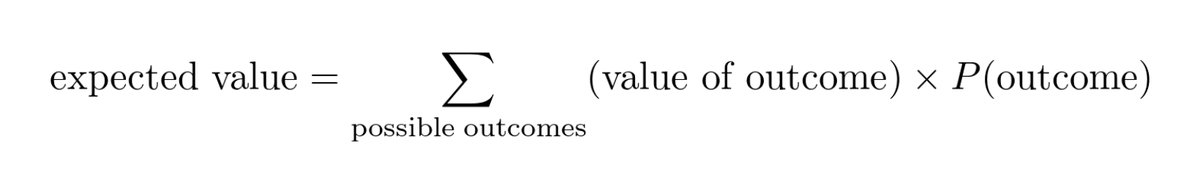

Suppose that similarly to the previous example, the outcome of your experiments can be quantified. (Like throwing a dice or making a bet at the poker table.)

The expected value is just the average outcome you have per experiment when you let it run infinitely. ♾️🤯

The expected value is just the average outcome you have per experiment when you let it run infinitely. ♾️🤯

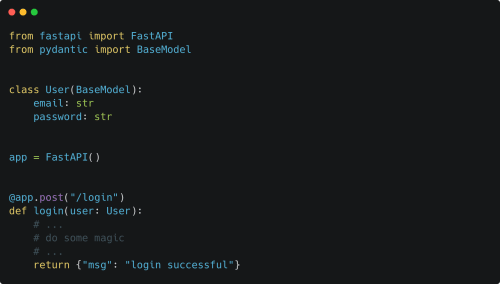

The formula above is simply the expected value in English.

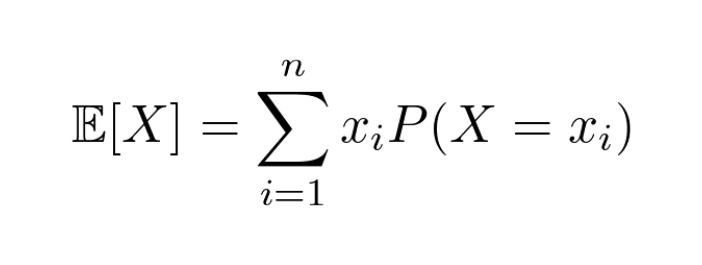

If we formally denote the variable describing the outcome of the experiment with 𝑋 and its possible values with 𝑥ᵢ, we get back the formula in the first tweet.

It looks much easier now, isn't it?

If we formally denote the variable describing the outcome of the experiment with 𝑋 and its possible values with 𝑥ᵢ, we get back the formula in the first tweet.

It looks much easier now, isn't it?

This concept came up recently when I gave this explanation to a friend.

Inspired by @haltakov and his awesome recent thread (

Next time, I am planning to explain entropy! What do you think?

Inspired by @haltakov and his awesome recent thread (

https://twitter.com/haltakov/status/1358852194565558276), I figured this can be interesting for a lot of you!

Next time, I am planning to explain entropy! What do you think?

Update: I have just posted a thread explaining the formula behind entropy, as promised! Check it out!

https://twitter.com/TivadarDanka/status/1360237067826065411

• • •

Missing some Tweet in this thread? You can try to

force a refresh