It takes a single picture of an animal for my son to start recognizing it everywhere.

Neural networks aren't as good as we are, but they are good enough to be competitive.

This is a thread about neural networks and bunnies.

🧵👇

Neural networks aren't as good as we are, but they are good enough to be competitive.

This is a thread about neural networks and bunnies.

🧵👇

A few days ago, I discussed how networks identify patterns and use them to extract meaning from images.

Let's start this thread right from where we ended that conversation.

(2 / 16)

Let's start this thread right from where we ended that conversation.

https://twitter.com/svpino/status/1359107240519749637?s=20

(2 / 16)

Let's assume we use these four pictures to train a neural network. We tell it that they all contain a bunny 🐇.

Our hope is for the network to learn features that are common to these images.

(3 / 16)

Our hope is for the network to learn features that are common to these images.

(3 / 16)

It's reasonable to think that the network will find the following:

▫️ There's an eye

▫️ There's fur

▫️ There are long ears

The neural network's understanding of a "bunny" will become the combination of "eye + fur + long ears."

(4 / 16)

▫️ There's an eye

▫️ There's fur

▫️ There are long ears

The neural network's understanding of a "bunny" will become the combination of "eye + fur + long ears."

(4 / 16)

At this point, I'm obligated to disclose that neural networks don't technically focus on high-level concepts like "eyes" or "ears" because they don't know about such things.

But don't let facts get in the middle of a good story.

😉

(5 / 16)

But don't let facts get in the middle of a good story.

😉

(5 / 16)

There's something else in these pictures that will likely get picked up by the network.

See all of that green grass?

If these are all the pictures that we use to train our network, I'm sure the grass will be a strong indicator.

Ridiculous, right?

(6 / 16)

See all of that green grass?

If these are all the pictures that we use to train our network, I'm sure the grass will be a strong indicator.

Ridiculous, right?

(6 / 16)

Neural networks don't know better.

They are incapable of focusing on what we know to be relevant.

They generalize by finding common patterns, and we all agree that grass is very prominent in all four pictures.

(7 / 16)

They are incapable of focusing on what we know to be relevant.

They generalize by finding common patterns, and we all agree that grass is very prominent in all four pictures.

(7 / 16)

How do we solve this problem?

How do we teach our network to focus on the stuff that matters and discard what doesn't?

One way is by showing it more pictures.

(8 / 16)

How do we teach our network to focus on the stuff that matters and discard what doesn't?

One way is by showing it more pictures.

(8 / 16)

What would happen if we now show the attached image to the network?

There's still an eye, fur, and long ears. But there's no grass!

Internally, the network will start decreasing the importance of the presence of green grass.

(9 / 16)

There's still an eye, fur, and long ears. But there's no grass!

Internally, the network will start decreasing the importance of the presence of green grass.

(9 / 16)

Four more pictures later, and the grass is probably not a relevant feature anymore.

Most of the images don't have grass or anything green, so the network will learn to ignore those patterns.

The eyes, ears, and fur are still very relevant!

(10 / 16)

Most of the images don't have grass or anything green, so the network will learn to ignore those patterns.

The eyes, ears, and fur are still very relevant!

(10 / 16)

In this thread, I've been taking advantage of your capacity to recognize high-level concepts to explain how neural networks work.

In reality, there's a lot of variability in "eyes," "ears," and "fur." We need many images to "teach" those concepts to a network.

(11 / 16)

In reality, there's a lot of variability in "eyes," "ears," and "fur." We need many images to "teach" those concepts to a network.

(11 / 16)

The more specific we want to get with our classification, the harder it will be for the network.

Look at these two pictures. Recognizing the bunny is not hard, but what if we wanted to differentiate these pictures by their species?

(12 / 16)

Look at these two pictures. Recognizing the bunny is not hard, but what if we wanted to differentiate these pictures by their species?

(12 / 16)

Personally, I don't know if these bunnies are from the same species, but assuming they aren't, high-level concepts (eyes, ears, fur) will not be enough.

We need more specificity, and our network will require many more images to identify relevant patterns.

(13 / 16)

We need more specificity, and our network will require many more images to identify relevant patterns.

(13 / 16)

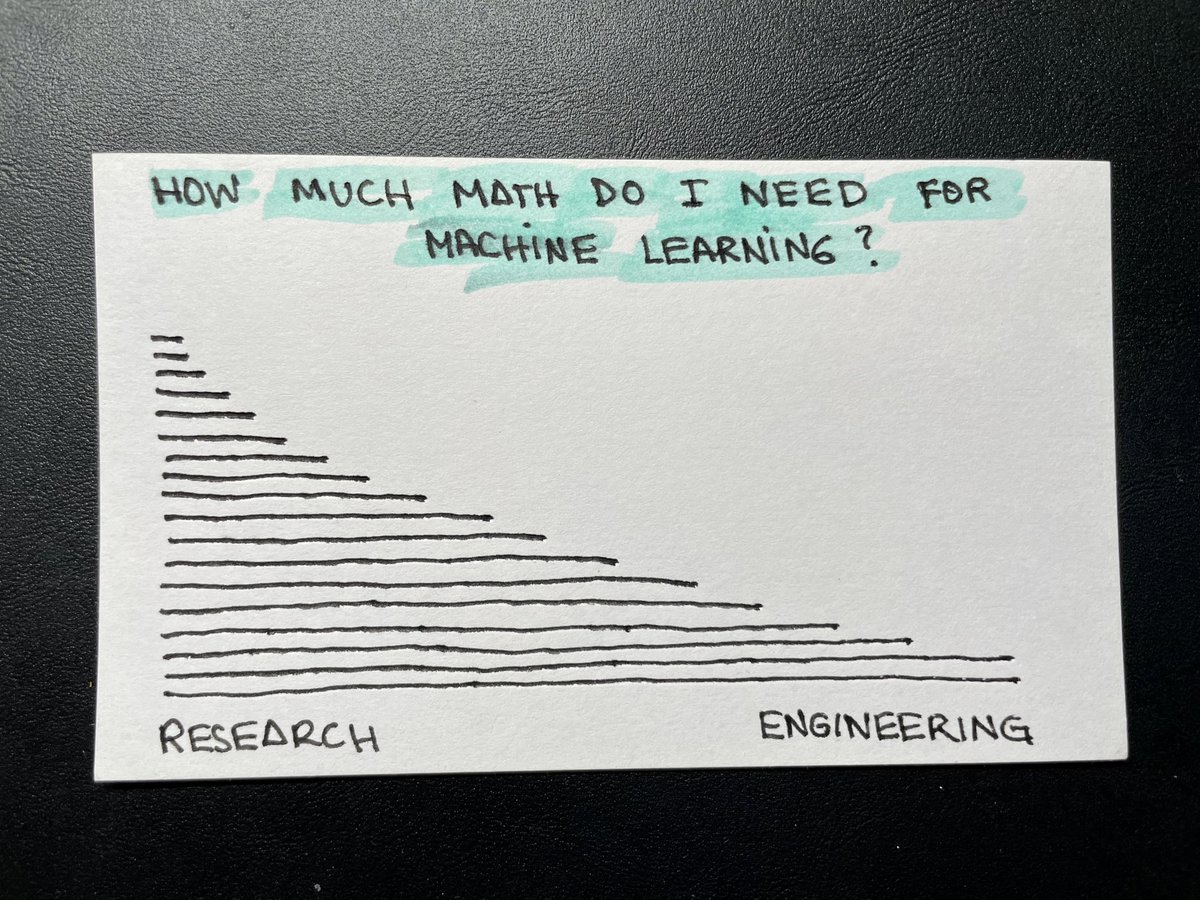

A common question I get:

"How many images do I need?"

At this point, the answer may be obvious: "It depends."

To identify a bunny apart from an elephant, we need fewer images than to classify bunnies based on their individual species.

(14 / 16)

"How many images do I need?"

At this point, the answer may be obvious: "It depends."

To identify a bunny apart from an elephant, we need fewer images than to classify bunnies based on their individual species.

(14 / 16)

The more visually complex your problem is, the more likely it is that you'll need more images to achieve proper generalization.

Every situation is different.

(15 / 16)

Every situation is different.

(15 / 16)

A couple of notes before closing:

1. The goal of this thread is to build intuitions, not to be factually correct about the technical details of how neural networks work.

2. There are different techniques to interpret images. Not all of them require a lot of data.

(16 / 16)

1. The goal of this thread is to build intuitions, not to be factually correct about the technical details of how neural networks work.

2. There are different techniques to interpret images. Not all of them require a lot of data.

(16 / 16)

If you enjoy these attempts to make machine learning a little more intuitive, follow me and let me know below what I should be covering in my next thread.

• • •

Missing some Tweet in this thread? You can try to

force a refresh