They’re increasingly being driven around the bend by his refusal to perform an elaborate apology, or even admit error. It’s pretty awesome to watch. One of the guys trying to justice him into submission is a AI higher up at Chase bank lol.

https://twitter.com/kareem_carr/status/1376159528606130176

As I predicted, the place there really pushing back hard is on his claim that the problems are fixable. This claim is a direct threat to the entire AI ethics project so it can’t stand. They’ll keep at him over it.

Here’s what I’m seeing in his responses, though: he’s smart enough to see that the larger points re: societal bias are obvious, & he has enough intersectional armor that he doesn’t have to pretend they’re saying something novel/deep/complex/technical. Greatness.

This is making the weekend of everyone who has been bullied and dogpiled on here for making similar points. Carr’s woke creds are impeccable, he can read the linked papers & understand them, he can spot weak work, & he’s just not playing along. Nobody else has this combo.

There are all kinds of shenanigans going on in his replies & in the field that rely on ppl falling into one of three camps: 1) not sophisticated enough to spot the bait-&-switch, or 2) aligned & therefore unwilling to call it out, or 3) terrified into silence.

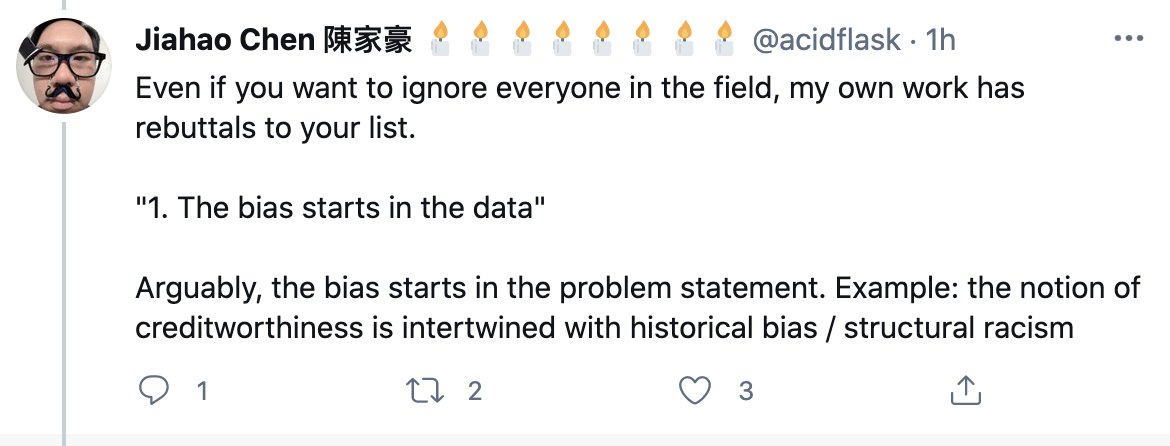

I'm going to risk a block here by taking Chase guy's responses to his thread & showing how the game works. The point that society is biased is obvious, Carr says it's obvious, & it's also not the kind of (statistical bias) he's talking about. Next!

https://twitter.com/acidflask/status/1376165270549639169

LOL no. All of the attempts to demonstrate that the algos themselves introduce racism — all of them, without exception — reduce to: algo amplifies signal w/ more representation in the data, & suppresses signal w/ less representation. That's all of it.

https://twitter.com/acidflask/status/1376165593200660480

Anyone who takes the time to read the papers cited knows this. I go into it in detail in my opening newsletter post. Models amplify & suppress, as do maps, sculptures, writing, etc. There's now a cottage industry in mapping this point to different domains

doxa.substack.com/p/googles-colo…

doxa.substack.com/p/googles-colo…

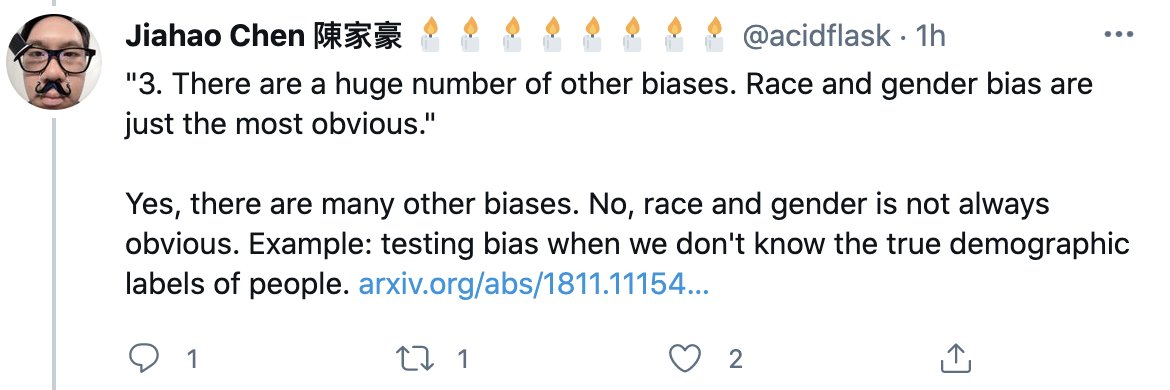

This response is just gold. It's both A) clearly not what Carr meant by "obvious," and B) runs afoul of the fact that this same crowd also argues how problematic it is to label ppl's identity characteristics absent info re: their self-ID.

https://twitter.com/acidflask/status/1376165850982531080

Finally, everyone knows this. Carr surely knows it. It's unfortunate that you can get clout on Twitter & in "AI ethics" generally by repeating it as if it's some devastating & novel insight that few are aware of.

https://twitter.com/acidflask/status/1376166389652860929

Anyway, this is exactly what Chen is doing here from his opening tweet, & Carr just call him on it directly. You just never see this from someone with Carr's status. Amazing. Inject it straight into my veins.

https://twitter.com/kareem_carr/status/1376184132196306946

WTH man I am now a huge fan of Kareem Carr. We don't actually agree on anything that matters EXCEPT for how to have an argument with other people. But I think that is enough. Actually, maybe that's everything.

Chase guy has now protected his account. That's ok, I have the tweets here. Here are the tweets of his I'm replying to, in the order I'm replying.

This is great. This is exactly how this entire game works.

https://twitter.com/AttilaTheLund/status/1376197941468131333

Ok, the gloves are coming off. Now the same crowd that came for LeCun is openly coming for Carr. As I said, they will not let "it's fixable" stand. As long as Carr stays focused on the substance & on specifics, they can't touch him. It's all a bunch of elaborate derailing tactics

I had planned to work on something totally different Monday for the next newsletter, but I think I should do a deep dive on how the bias argument is working in the AI ethics field. Because there's a bunch of derailing & misdirection happening.

• • •

Missing some Tweet in this thread? You can try to

force a refresh