I've written a notebook showing three additional functions for helping you navigate the @fastdotai source code and save you potentially hours of time: gist.github.com/muellerzr/3302…

1/

1/

Why did I write this? Navigating the source code for fastai can be hard sometimes, especially trying to consolidate all the patch and typedispatch functionalities (especially because typedispatch doesn't show up in the __all__!)

So, how does this work? 2/

So, how does this work? 2/

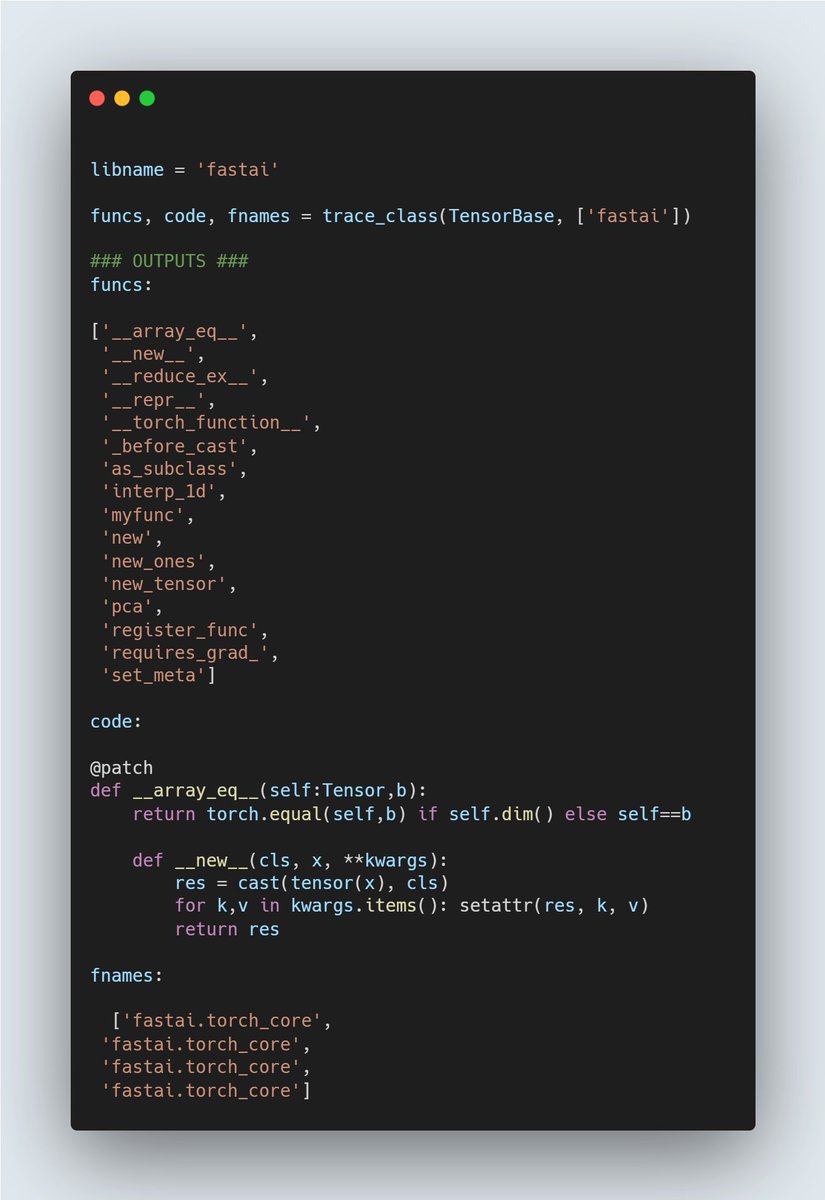

The first function, `trace_class`, will grab all functions in a class up to a particular library. This is helpful in scenarios where a class inherits something (like a torch tensor) but you only care about the super class level (like TensorBase):

3/

3/

This will also grab any patched functions, making it easy to see absolutely every function available, and its source code.

Next we have `trace_func`, which is similar to trace_class, where it will just grab the source code for some function, and its stem:

4/

Next we have `trace_func`, which is similar to trace_class, where it will just grab the source code for some function, and its stem:

4/

Now, why this particular one? It's just a handy convivence function I've found helpful and use pragmatically.

Finally, we have the `trace_dispatch`. TypeDispatch functions are a bit more of a headache, as there's multiple versions of the "same" source code. 5/

Finally, we have the `trace_dispatch`. TypeDispatch functions are a bit more of a headache, as there's multiple versions of the "same" source code. 5/

So what do we do? We grab all of them, letting you see the source code for every input as it changes, and fully see its behavior in one place:

Hope this helps some folks when it comes to debugging and examining how fastai's source code looks :)

• • •

Missing some Tweet in this thread? You can try to

force a refresh