I agree that a single deep learning network can only interplay. But a GAN or a self-play network can obviously extrapolate. The question though is where is ingenuity coming from? medium.com/intuitionmachi…

I'm actually very surprised that @ecsquendor who has good videos summarizes the state of understanding in deep learning is fawning over @fchollet ideas. I'm perplexing what Chollet calls extrapolation:

https://twitter.com/fchollet/status/1221194257043582976

There's also this depiction of different kinds of generalization. I do find it to be very odd and outright wrong.

https://twitter.com/fchollet/status/1230283303774605313

This depiction of accumulation of narrow skills that learning enough skills gets you to a higher level of intelligence is a naive schema. Furthermore, it doesn't help to define the categorization of generalization in this way.

It's as if general intelligence is similar to learning to play the piano or learning how to do math. With enough practice we become proficient in creating our own music or inventing new math. But these two examples assume general intelligence.

Music and math have their own vocabularies. Where do these original vocabularies come from? They come from inventions of a general intelligence. Now ask, where does the vocabulary of general intelligence come from? There is no external designer for this.

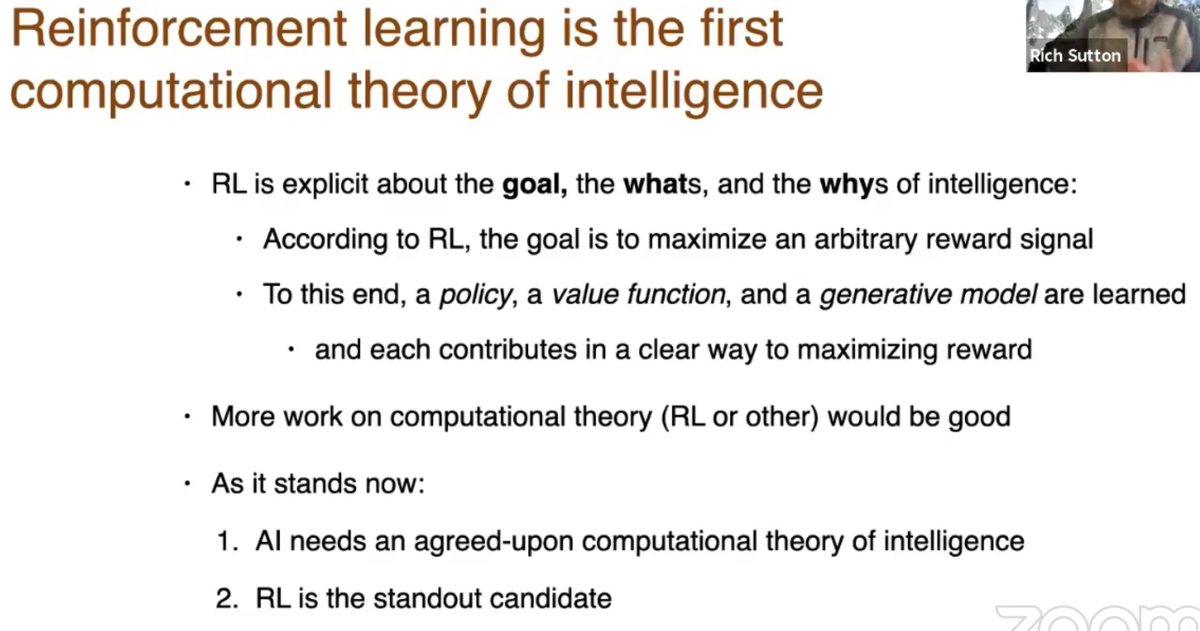

To understand general intelligence you have to at least start with at what Marr called the computational level. Sutton is correct in his formulation of RL as a computational level.

There are many alternative computations theories. Any discussion about an approach to intelligence should begin here and not via a premature and arbitrary delineation of categories of generalizations or interpolations. The world generalization is in fact a nebulous concept.

An interesting observation about people who seem to have the highest intelligence also have a peculiar mix of personalities. The big five set which if you tend toward the extreme in all 5 dimensions. Openness Conceintious Extraversion Agreeableness and Calmness.

It's the cognitive inclination of an agent that leads to general intelligence. One might call this more broadly as the intrinsic motivation. This is different from what Sutton proposes as reward is enough. Reward depends on motivation. Not sure how you can avoid this.

The human species has general intelligence as a consequence of their biological capabilities and their unique motivations. Primates are almost biologically like humans, but they don't have the flexible jaws and the dextrous hands of humans.

But what distinguishes humans from primates is the inclination towards shared intentionality. Take this collection of capabilities and a drive towards cooperative behavior and you get general intelligence. Absent these and you don't get there, the reward does not matter!

The biosphere has a multitude of subjective beings. There is an immense benefit for one being to predict the behavior of another subjective being. Herbivores predict plants, carnivores predict herbivores, social carnivores predict other social carnivores, and so on.

In general though, the purpose of prediction is to seek energy. In physics energy is a scalar quantity and have this quality that it's interchangeable (i.e. fungible). This fungibility is not exactly present in complex systems.

This is because what is considered energy differs for every subjective being that consumes energy. Plants can consume sunlight, humans cannot. Humans today need money to get energy. Money is a kind of energy.

It's fascinating that all complex multicellular creatures are composed of eukaryotes. Cells that are symbiosis of a bacteria and an archea (i.e. mitochondria). That mitochondria is the battery of the cell.

The reason why biology is not like physics is because its got these batteries. There are like memories of energy. In the models of how life originates, the focus is on how persistent structures are created out of energy.

What is often overlooked is how biology has created persistent energy stores that are capable of modulating the actions of a subjective agent. Complex behavior implies control of the release of energy. Physics does not cover this control.

The point here is that all intelligence revolves around the acquisition and control of energy. In human civilization this translates to the acquisition and control of money. The game is different for each different species, but it always revolves around some kind of energy.

This is perhaps why Friston is so found with his Free Energy principle as the core of cognition. Homeostatis also revolves around the idea of energy conservation. Rewards are also just a proxy to energy.

What is often missing in this framing is that complex systems have multitudes of complexity and each level of complexity has a different kind of energy that drives it. Competence at each level implies competence in the control and acquisition of this energy.

Where does the competence of these skills come from?

Does it also not feel a bit odd that the intelligence (or competence) to acquire different kinds of energy exist in the multitude of single-celled and multicellular organisms that occupy the biosphere?

There's immense complexity that resides inside all of biology. This is where general intelligence comes from. It's in the wetware. Understanding biology implies understanding cognition. The ironic thing is, computers are not biological.

@threadreaderapp unroll

• • •

Missing some Tweet in this thread? You can try to

force a refresh