Learning to play Kuhn Poker with Monte Carlo Counterfactual Regret Minimization (MC-CFR) in #python

📝 Code/Tutorial: nn.labml.ai/cfr/index.html

This isn't deep learning. But it'll be interesting if you do machine learning, like incomplete information games or play #poker.

🧵👇

📝 Code/Tutorial: nn.labml.ai/cfr/index.html

This isn't deep learning. But it'll be interesting if you do machine learning, like incomplete information games or play #poker.

🧵👇

2/8) Kuhn Poker is a simple 2-player betting game with three cards (A, K, Q). A single card is dealt to each player. Players take turns betting chips and the player with the higher card wins the chips. If a player folds the other player wins the chips.

👇

👇

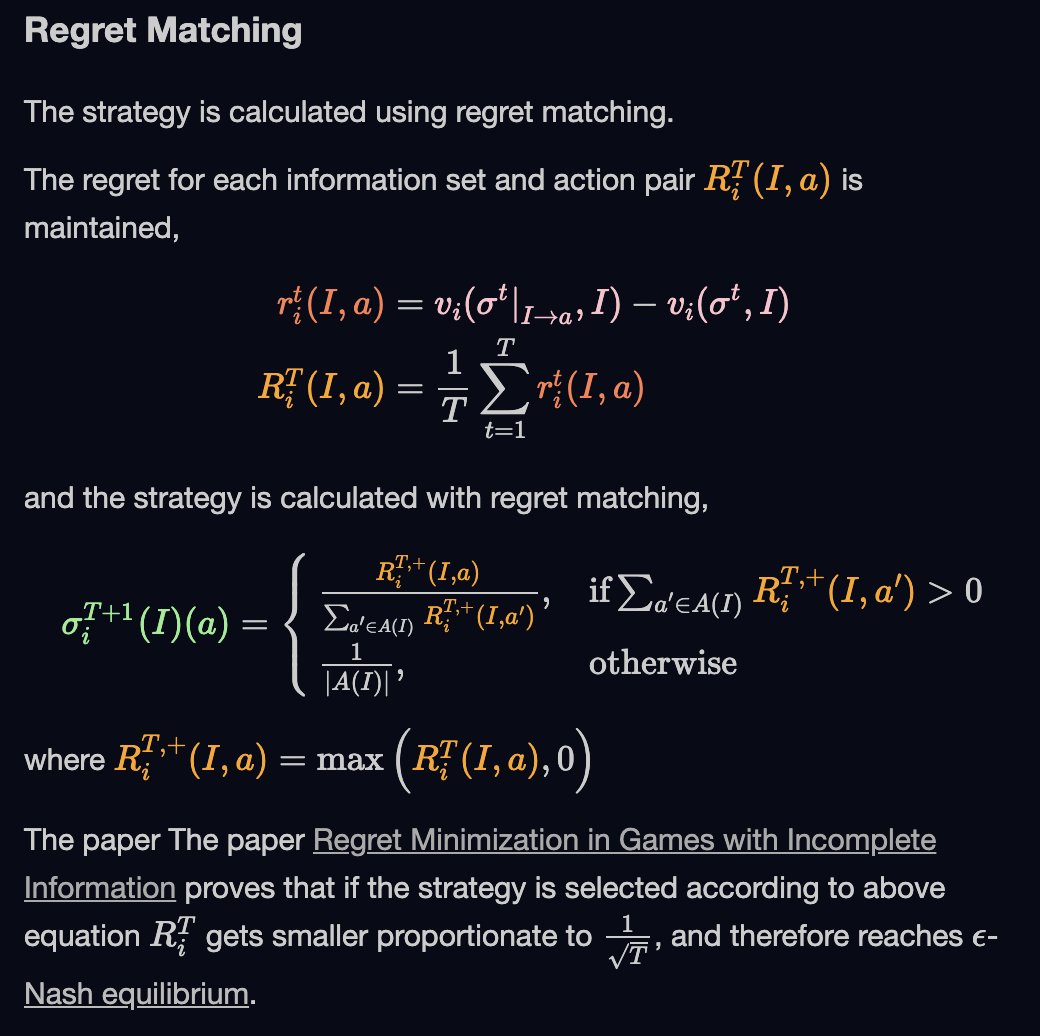

3/8) CFR finds the Nash equilibrium with self-play. In each iteration, it calculates the regret of following the current strategy instead of playing each action. Then it updates the strategy with regret matching:

strategy = regret of action/total regret of all actions

👇

strategy = regret of action/total regret of all actions

👇

4/8) The average of the strategies throughout the iterations gets close to the Nash equilibrium as we iterate.

Nash equilibrium is a state where no player can increase their expected payoff by changing their strategy.

👇

Nash equilibrium is a state where no player can increase their expected payoff by changing their strategy.

👇

5/8) The strategy is a function of "information set" and gives a probability distribution across actions. An "information set" is the state of the game that’s visible to the player.

👇

👇

6/8) Our implementation is accompanied by a lengthy introduction to CFR and MCCFR. The MCCFR implementation is abstracted from the game Kuhn Poker and we will add Leduc Poker implementation soon.

👇

👇

7/8) Here’s the Kuhn Poker experiment: nn.labml.ai/cfr/kuhn/index…

Colab Notebook with some visualizations:

colab.research.google.com/github/lab-ml/…

Results in @weights_biases:

wandb.ai/vpj/kuhn_poker…

👇

Colab Notebook with some visualizations:

colab.research.google.com/github/lab-ml/…

Results in @weights_biases:

wandb.ai/vpj/kuhn_poker…

👇

8/8) This implementation is based on a tutorial @vpj wrote about a year ago:

github.com/vpj/poker/blob…

We will add implementations for Leduc poker and more efficient variants of CFR such as public chance sampling (PCS) if you find it useful.

github.com/vpj/poker/blob…

We will add implementations for Leduc poker and more efficient variants of CFR such as public chance sampling (PCS) if you find it useful.

• • •

Missing some Tweet in this thread? You can try to

force a refresh