While we're doing a Detection Engineering AMA, how do you build these sorta skills if you want to do that job for a living? Big question, but I'd focus on three areas for early career folks...

https://twitter.com/chrissanders88/status/1408056413956497408

Investigative Experience -- Tuning detection involves investigating alerts from signatures so you need to be able to do that at some level. A year or two of SOC experience is a good way to start.

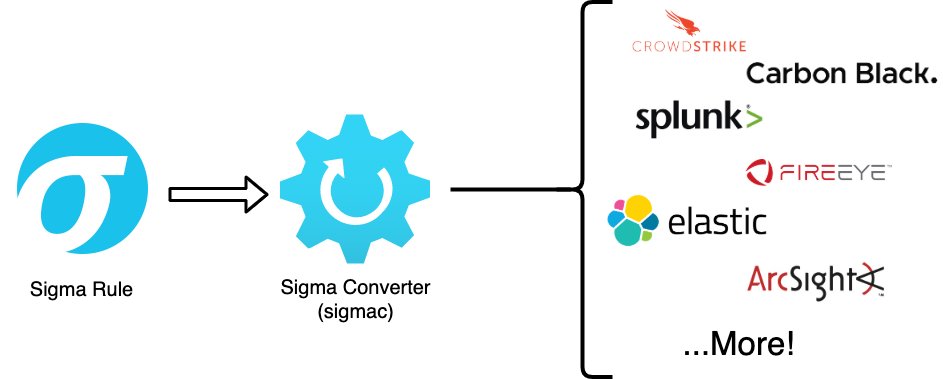

Detection Syntax -- You have to be able to express detection logic. Suricata for network traffic, Sigma for logs, YARA for files. Learn those and you can detect a lot of evil. They translate well to vendor-specific stuff.

Attack Simulation -- You need to know how attacks manifest in files/logs/systems. Setup something like

@DetectionLab and recreate attack scenarios, treating it like a science experiement. Also, get comfortable using malware sandboxes.

@DetectionLab and recreate attack scenarios, treating it like a science experiement. Also, get comfortable using malware sandboxes.

If you can do those things you can probably do this job (non-technical skills aside). No, you don't need to be able to write code. No, you don't need to be into AI/ML. Most detection engineering starts with signatures.

After that, it gets more specialized and evidence/platform-dependent.

You can learn these things on your own. You can also take classes to accelerate that learning (networkdefense.io).

Overall, I think detection engineering is one of the more accessible places for folks to join infosec bc the feedback mechanisms within teams are plentiful.

Overall, I think detection engineering is one of the more accessible places for folks to join infosec bc the feedback mechanisms within teams are plentiful.

You can find these jobs with the big security vendors, larger localized SOCs, or managed service providers. They're pretty amenable to remote work, too!

• • •

Missing some Tweet in this thread? You can try to

force a refresh