1/ Challenge time has ended, so here comes the solution. Why did I challenge you to estimate the prevalence of the left-out group, you may ask yourself?. Because, finding on your own teaches things.

2/ In this massive thread I won't give you the fish either, but I will give you all the tools to figure out the massive analysis errors that are made in most of the studies of this type. You may remember the missing deaths conundrum.

3/ Some believe these are signs of conspiracies; IMHO the problem is low skills in analyzing real-world messy data, so everybody just copy what others have done. In this case, most studies mimic the published protocols for the actual trials. And make the same mistakes.

4/ So after this little digression, let's get our hands dirty. Shall we?

If you don’t remember you can lookup the materials you will need here:

If you don’t remember you can lookup the materials you will need here:

https://twitter.com/federicolois/status/1413661066433867782

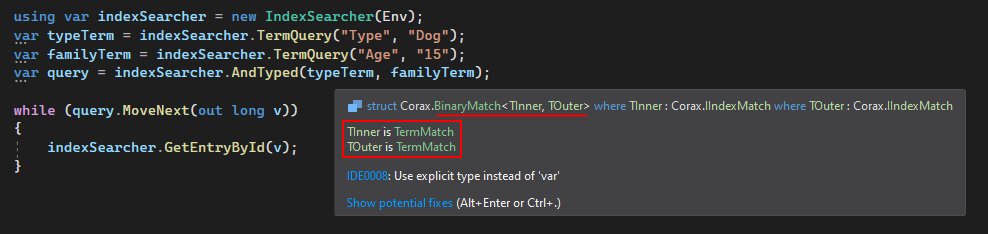

5/ Let’s start from the oddity. If you look at Figure 2 you will find that something is odd there. 5 seconds after being shown this picture I said: “WTF???” and @gerdosi can vouch as he tagged me specifically. As we had discussed the weird negative efficacy of the first 14 days.

6/ You know when you know what you are looking for, it is very simple to figure out when things are not in the proper place you would expect them to be. 84% efficacy with the same number of events per person's days? Color me unimpressed.

7/ For someone that has been bitching about studies making the same mistakes over and over and over again, it was not surprising at all if the make the same errors. If those were “the good numbers”, well the outcome couldn’t be that good.

8/ So the next question I would ask myself is: “Is it possible given the details they provide to work backwards and reintroduce a reasonable estimation of what has been left out?” The keyword there is “reasonable”, more on that later.

9/ For this process it doesn't matter if the authors wanted to obfuscate or made an honest mistake. If they provide enough raw data to work, you can constrain the parameters to get reasonable estimations.

10/ As they provide the >60 incidence ratio, the first step is to estimate the incidence in the <60 as a way to understand how different >60 and <60 are in terms of event incidence. That is simple to do, because we know the “Total Persons Days” and “Events of Interest”.

11/ You may have noticed something interesting there, results do agree with the observation that most deaths happen in the >60 cohort regardless of the cohort you are in. There is also something interesting, check the ratio between deaths. That’s good, or so I thought.

12/ Now we can do the same thing as the paper did to calculate our extended incidence. When comparing the amount of events that happen during surveillance time we find it is higher for the middle cohort. Negative efficacy anyone?

13/ There is also something else that is interesting. See the difference between the total incidence in the <60 and how the second cohort evens out the impact of the fully vaccinated cohort so you end up in the mean. Things that make you say...

14/ From this alone we can conclude “the second cohort” has *double the risk of dying* than the “no intervention” cohort. This is bad regardless of how you look at it. But did I mention everyone makes the same mistakes?

15/ As they claim on Table 2 we have some removed data. Do you remember where you have seen that? I do.

https://twitter.com/federicolois/status/1404202274390482946

16/ Can we ‘reintroduce’ (aka estimate) what has been left out? In most cases, no. In this case, yes we can, that’s the point of this whole thread. So let's start constraining the solutions using population data. That allows us to realize if we are making a big mistake.

17/ Understanding the eligible world allows you to estimate how many people were included (impossible to do if this wouldn't be a population study).

18/ Chile has roughly 19M, of which 19.5% is below 14 years old (ineligible) that gives us 15.2M to work with. But, 1.6M was out and FONASA accounts for roughly 80% of the population.

19/ This is where constraints are interesting, see how our estimation has not deviated too much from the actual eligible population? 12.2M - 1.6M = 10.6M … So we are off by 0.5M people and we didn't subtract the amount of population between 14yo to 16yo. Neat right? :D

20/ We also know from Worldmeter (we did the same with Israel) that the total cases during the study period (February 2nd - May 1st) was 470740 detected cases. “Cases” is a worthless metric for epidemic behavior but it is kinda useful in this context. Why?

21/ Because we have 2 numbers in the study. The one with the subtracted days of interests and the one they recorded on the population (found in the supplementary). As you can see they are slightly different.

22/ Armed with this knowledge we can estimate the incidence rate for these populations. Remember, the amount of events per 1000 persons/day. Ohhh, I know you are seeing what I am seeing.

23/ This is an oddity. The rate for the study is far lower than the Table S1.4 incidence which is also lower than the ineligible population. Does the ineligible population tests more? That’s a good riddle in itself. But isn’t it odd that the whole table has 13% more events?

24/ But you know, you can do the same thing to not only infections, you can do that for something more interesting. Like deaths.

25/ Holy Molly!!! So the incidence rate from the study is no less than 13% lower than what you find in the remaining. What are the odds??? This is the moment you were waiting for, this is where you decide what to do. There is no coming back from here.

26/ Now we enter the danger zone. Why? Because we will start to estimate values, we need to be extra careful and check and recheck against the constraints we have built along the way. This is where everybody messes up, so I hope I am not making a mistake myself.

27/ We know from Table S1 that the total events found was 248645 and because I was scared to have done this wrong, I did the right thing and contacted the authors… they confirmed that this is the total events found (I have the email proof).

28/ What does this mean? It means that we can know how many events were excluded in the interesting timeframe, that is the 14 days after each event as it was stated under Table 2.

29/ If we start with the 248645 events and we subtract the events found in Table 2 we get:

248645 - (185633 + 20865 + 12286) = 29861 … So, now we need to attribute to first or second dose, for that we linearly distributed according to the ratio of infections from Table S3.2

248645 - (185633 + 20865 + 12286) = 29861 … So, now we need to attribute to first or second dose, for that we linearly distributed according to the ratio of infections from Table S3.2

30/ Some may say: “Hey but you are assuming stuff there”. I would say: YES. But they should have done this, not me. I would argue though, this is a fair assumption as it does not change the end result. I know what the end result is, remember. ;)

31/ Next question is: “How many would have died if ‘X’ additional had been infected”. For estimating that we take the after first and after second dose death ratio as an estimate. From that we have an expectation of 713 deaths for the first and 408 for the second.

32/ I asked this number to the authors also, but they tactically ignored the question. Do not presume guilt here. I asked if they had it, probably they didn’t bother to, as I mentioned, everybody makes the same mistake over and over again.

33/ Armed with this new knowledge the new death distribution looks a bit different. Considering that the out of study where either vaccinated with Pfizer, belong to the <16yo that have negligible risk and/or the 20% not covered by FONASA. Numbers add up.

34/ To make the incidence estimation we need to also add the contribution of person-days from all those newly accounted infections that were removed. For that I am going to make a “controversial” choice. I will use the mean average persons-days per person.

35/ Why controversial? Because most first dose infections would have been less than 14 days, therefore I am giving the unvaccinated cohort an unfair treatment. I am giving the vaccinated cohort more person days, which dilutes the incidence [this is key].

36/ Now we can really estimate the incidence of events in each cohort. By excluding the 14 days after even you mess up your actual incidence and therefore your efficacy and conclusions. QED the paper and estimation dont agree. Surprising result right?

37/ I will say this: Peer review is broken. This kind of thing should never be published as is and should be catch in peer review. Because authors have this data and could at any time correct it, but we cannot inspect it as it is deemed ‘private’.

38/ I don’t blame the authors, they did exactly what everybody else is doing and made the same mistakes. It could be possible that some of the actual numbers are different, but that begs the question: How much better do they have to be to fix this?

39/ On a keynote, these mistakes may kill people and that is why scientists have to be extremely cautious with this data. Meanwhile, in the land of the “Liberté, égalité, fraternité” as mostly everywhere else nobody understands statistics.

40/

• • •

Missing some Tweet in this thread? You can try to

force a refresh