The MobileNet family of convolutional architectures uses depth-wise convolutions where the channels of the input are convolved independently.

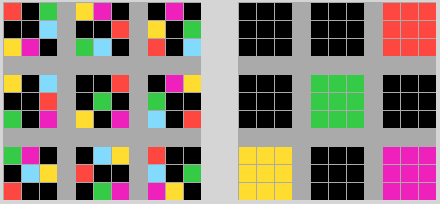

Their basic building block is called the "Inverted Residual Bottleneck", compared here with the basic blocks in ResNet and Xception (dw-conv for depth-wise convolution).

And, from the same family of architectures, EfficientNetB0. Very similar but obtained through an automated neural architecture search. Notice the 5x5 convolutions.

Illustrations from "Practical ML for Computer vision" amazon.com/Practical-Mach…

Illustrations from "Practical ML for Computer vision" amazon.com/Practical-Mach…

• • •

Missing some Tweet in this thread? You can try to

force a refresh