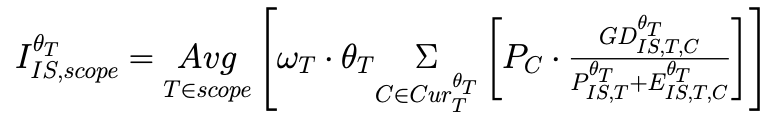

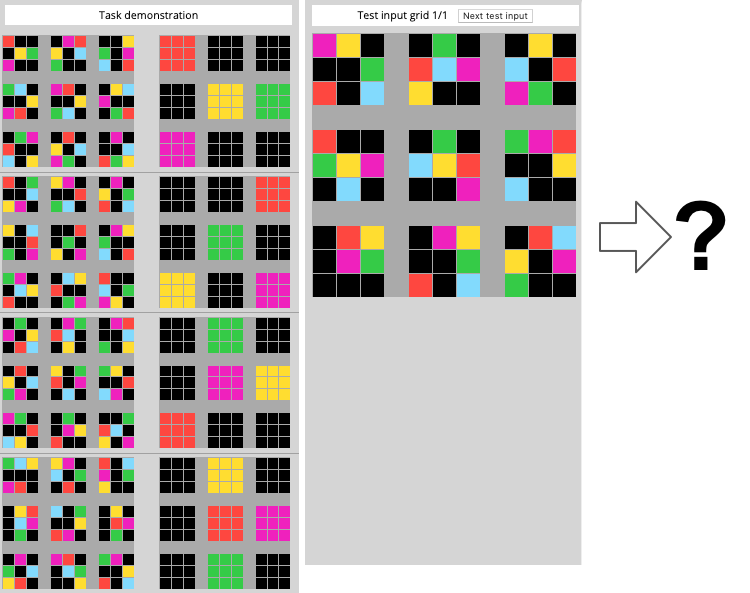

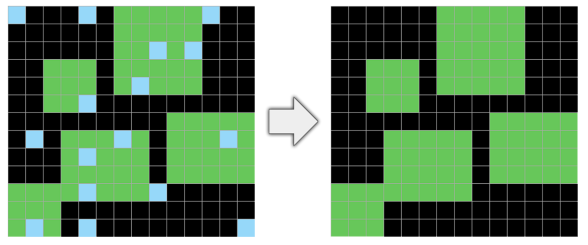

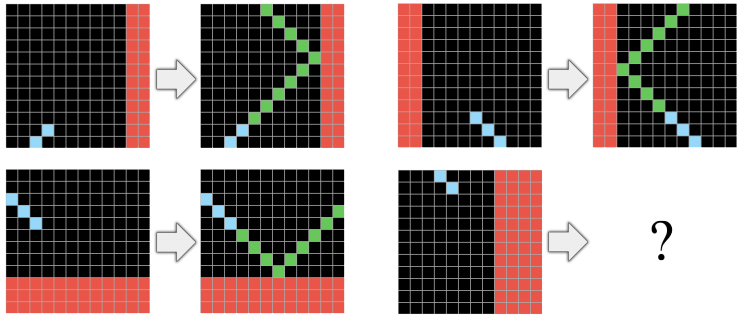

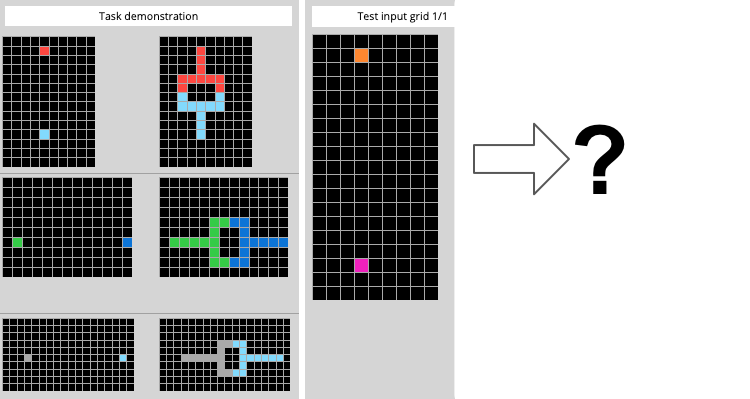

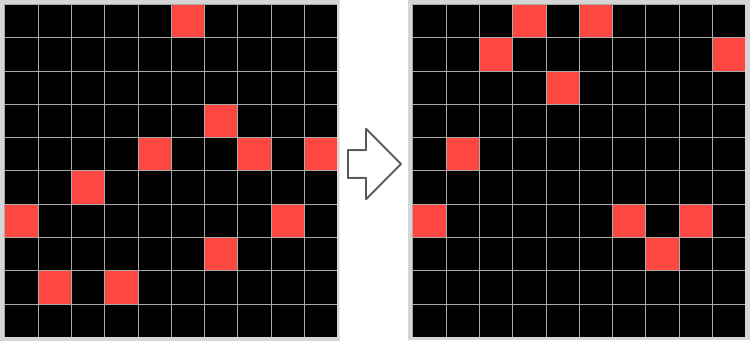

arxiv.org/abs/1911.01547 “On the measure of intelligence” where he proposes a new benchmark for “intelligence” called the “Abstraction and Reasoning corpus”.

Highlights below ->

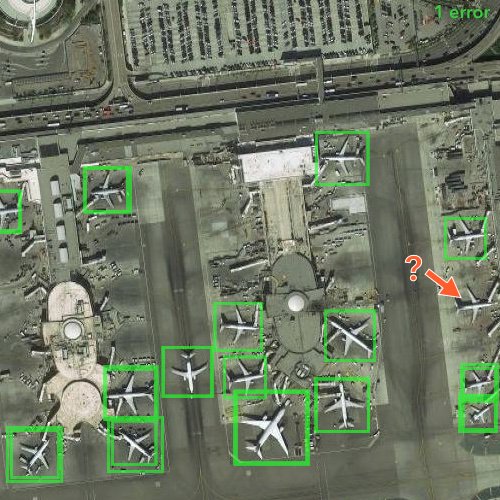

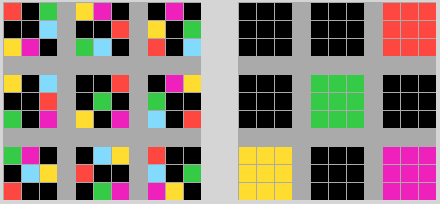

I find it incredibly easy for humans but i have no idea how to program something that would solve it without being super-specialized...

- They are relatively easy for humans

- The performance of any known AI system on them is close to zero

Which means that there is only one way to go for AI: UP!

🥳

github.com/fchollet/ARC

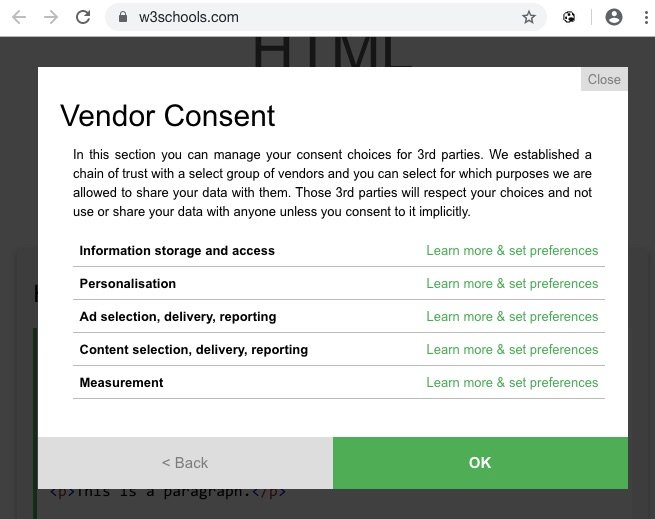

Git clone the repo and open the HTML interface in a browser. It’s a lot of fun to play with! 😃