Continued thoughts

Why should Tesla’s rate of improvement in planning and control should exceed its competitors

🧵

Why should Tesla’s rate of improvement in planning and control should exceed its competitors

🧵

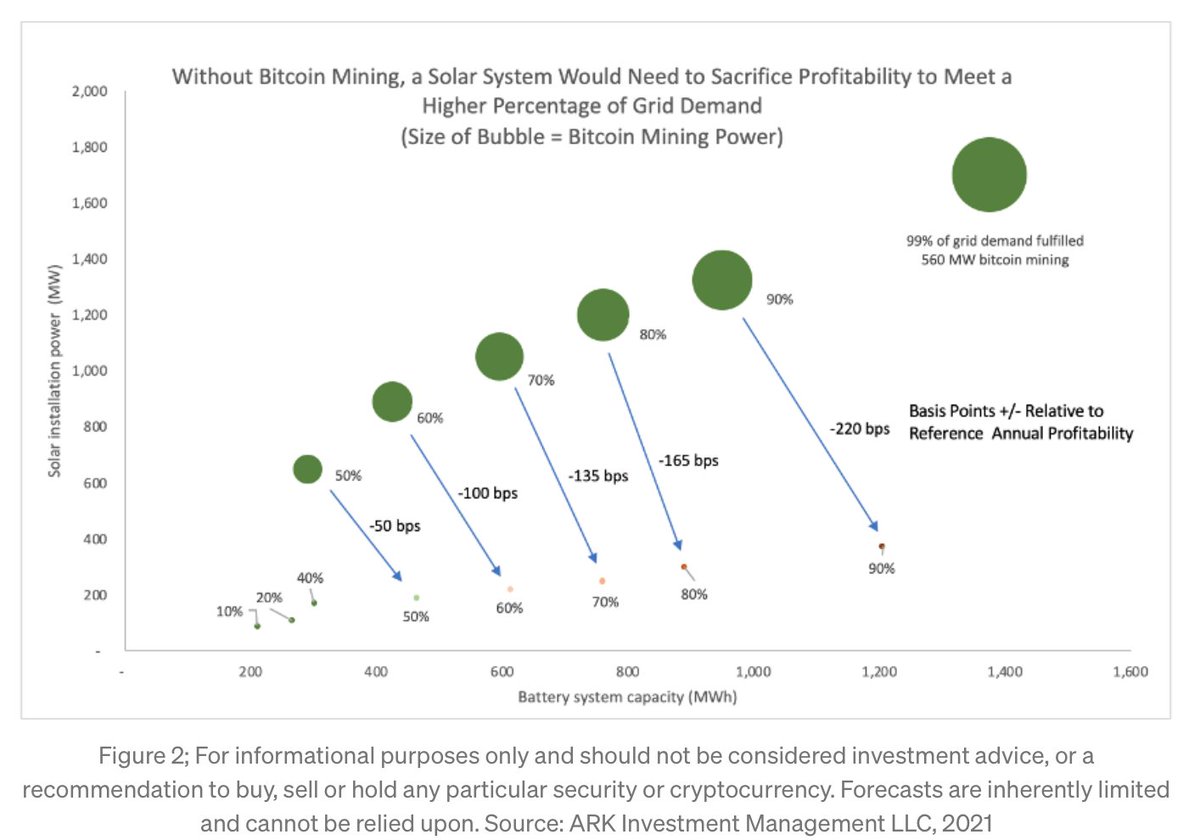

https://twitter.com/wintonARK/status/1429904355931541521

One reason why Tesla's planning module should improve at a higher velocity than that of its competitors is due to an undeniable advantage in error-finding.

Tesla can measure simulated intervention rates on its human-driven fleet by running its software in shadow mode.

/1

Tesla can measure simulated intervention rates on its human-driven fleet by running its software in shadow mode.

/1

Further, upon updating its planning module it can more easily verify/refute the efficacy of the change, again by running in shadow mode.

Put simply, due to its fleet Tesla has a much quicker driving-policy error-detection-->solution-verification loop than its competitors.

/2

Put simply, due to its fleet Tesla has a much quicker driving-policy error-detection-->solution-verification loop than its competitors.

/2

During AI day, in answer to a question, Elon Musk estimated that each 9 of accuracy was an order of magnitude more difficult.

This is likely true for Tesla due to its data-surplus.

For its data-constrained competitors each 9 is probably 2 orders of magnitude more difficult.

/3

This is likely true for Tesla due to its data-surplus.

For its data-constrained competitors each 9 is probably 2 orders of magnitude more difficult.

/3

The crude logic: 1) for each incremental 9 of accuracy there are an order of magnitude more error-types that you have to solve (in essence the corner-cases get more "weird" and less generalizable)… and

/4

/4

2) Each incremental corner-case is an order of magnitude less discoverable (you, by definition, have to put 10x more miles on the road to discover the incremental error.)

So each incremental 9 of accuracy requires two orders of magnitude more testing/data.

/5

So each incremental 9 of accuracy requires two orders of magnitude more testing/data.

/5

Operating with an unconstrained data-set (relative to competitors) Tesla only sees a 10x increase in the complexity of the problem as it reduces the intervention rate by each order of magnitude.

/6

/6

Tesla's robotaxi competitors, however, are likely now operating in a data-constrained regime and need to figure out a way to increase their data take-rates by two orders to achieve the additional order of magnitude reduction in intervention rate required to commercialize.

/7

/7

This all assumes that the problem of robotaxi (at least in Western markets) is tractable with the more traditional algorithmic approaches that Tesla (and others) are currently using.

(@elonmusk and the team unabashedly believe this to be true)

/8

(@elonmusk and the team unabashedly believe this to be true)

/8

It could turn out, however, that the robotaxi problem's ramifying complexity requires a neural-net based approach to driving policy.

(This is my personal belief)

/9

(This is my personal belief)

/9

If a driver-out solution requires neural nets then the advantage of having access to a massive ongoing corpus of real-world data only magnifies.

In effect, the utility of Tesla’s fleet data is even greater than Elon and team currently appreciate.

/10

In effect, the utility of Tesla’s fleet data is even greater than Elon and team currently appreciate.

/10

In sum: still early days for Tesla's robotaxi planning and control, and its more generalizable approach on perception has spotted its competitors a multi-year lead on this thorniest problem in robotaxi.

But Tesla seems to have solved for acceleration rather than velocity.

/11

But Tesla seems to have solved for acceleration rather than velocity.

/11

The harder that robotaxi driving policy proves to be, the more critical real-world data access will prove in solving it.

In effect, Tesla's competitive positioning has improved as the problem has proven to be more challenging than leading competitors anticipated.

/12

In effect, Tesla's competitive positioning has improved as the problem has proven to be more challenging than leading competitors anticipated.

/12

It remains to be seen if Tesla's current algorithmic approach will prove sufficient to commercialize robotaxi, but if not, the direction of travel requires more data and more compute, areas where the company has placed itself ahead of the field.

(More to come later).

/13

(More to come later).

/13

• • •

Missing some Tweet in this thread? You can try to

force a refresh