In my blog post about GitHub Copilot/Codex (tmabraham.github.io/blog/github_co…), I pointed out lack of knowledge of newer libraries like @fastdotai v2. Testing @OpenAI Codex yesterday, it provided an almost working (regex was off by one character😛) example of fastai v2 code

A few observations:

1. You have to specifically ask for fastai v2 code, but then the import needs to be changed "fastai2.vision.all" →"fastai.vision.all"

1. You have to specifically ask for fastai v2 code, but then the import needs to be changed "fastai2.vision.all" →"fastai.vision.all"

2. It has understanding of the differences between the fastai v1 and v2 APIs (correct use of ImageDataLoaders, the fine_tune function new to v2, use of item_tfms to resize before batching)

3. I went back to GitHub Copilot and tried to prompt it to write fastai v2 code but it seems to fail (only providing fastai v1 code). So it seems like the Codex models provided in OpenAI API are trained on more recent data.

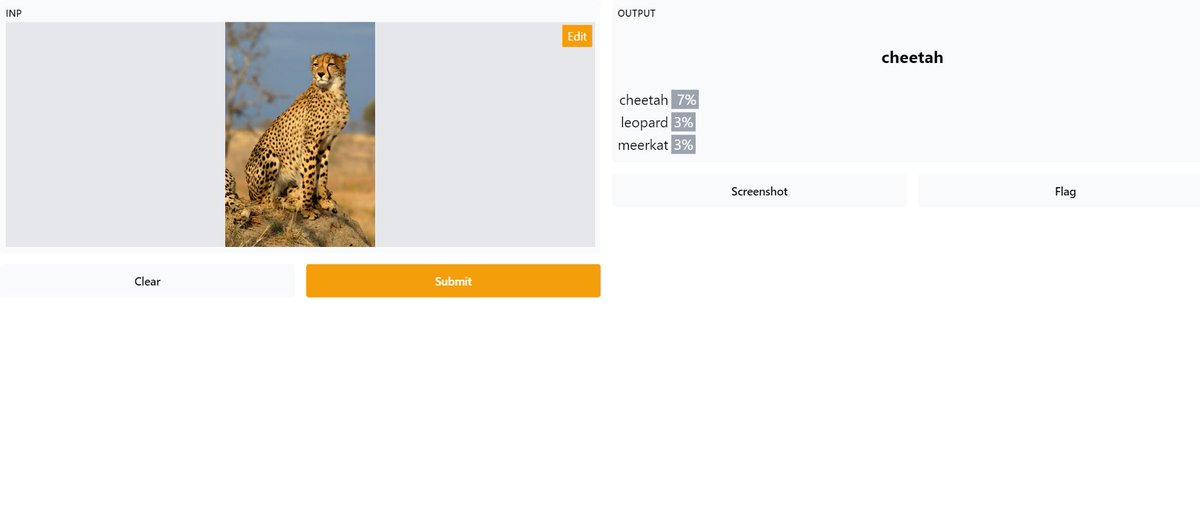

Adding to the main thread a completely working (except for the fastai2 import) example with the Caltech 101 dataset:

https://twitter.com/iScienceLuvr/status/1440771841451192335

• • •

Missing some Tweet in this thread? You can try to

force a refresh