❗️India must take official notice of the #FacebookFiles immediately❗️

IFF has asked the Standing Committee on IT to initiate an inquiry into the @FrancesHaugen & @szhang_ds revelations and invite them & #FacebookIndia reps for official testimony 👇🏽 🧵 1/9

internetfreedom.in/your-mom-was-r…

IFF has asked the Standing Committee on IT to initiate an inquiry into the @FrancesHaugen & @szhang_ds revelations and invite them & #FacebookIndia reps for official testimony 👇🏽 🧵 1/9

internetfreedom.in/your-mom-was-r…

Stay with us as we break down the myth vs. reality of #Facebook, according to the company's own documentation!

First up: Political #misinformation and violent extremism. Can Facebook stand up to the challenge? According to reports, the answer is a NO. 2/9

First up: Political #misinformation and violent extremism. Can Facebook stand up to the challenge? According to reports, the answer is a NO. 2/9

Does #Facebook mislead the public about proactive hate-speech removal? The answer is YES — Facebook removes only 5% of the hate on its platforms!

Not just that, but the #FacebookFiles reveal that their platforms are literally primed for virality that promotes #hatespeech. 3/9

Not just that, but the #FacebookFiles reveal that their platforms are literally primed for virality that promotes #hatespeech. 3/9

Negative mental and physical health implications for children and teenagers? #Facebook owned #Instagram checks all the boxes! ⬇️

Read IFF’s full analysis: internetfreedom.in/your-mom-was-r… 4/9

Read IFF’s full analysis: internetfreedom.in/your-mom-was-r… 4/9

#Facebook facilitates "human exploitation and trafficking" according to its own internal documentation!

The "three stages of the human exploitation lifecycle" enabled by Facebook are: recruitment, facilitation and exploitation. #FacebookFiles 5/9

The "three stages of the human exploitation lifecycle" enabled by Facebook are: recruitment, facilitation and exploitation. #FacebookFiles 5/9

When Facebook says it prioritises “meaningful social interactions”, it means that the algorithm prioritises content withs a higher probability of getting any interaction (like reactions & shares).

However, it is precisely these priorities that increase divisive content. 6/9

However, it is precisely these priorities that increase divisive content. 6/9

If you thought that Facebook's "community guidelines" applied equally to all its users, irrespective of reach or prominence, you were wrong.

In a document titled “Whitelisting”, Facebook admits that many "pages, profiles and entities [were] exempted from enforcement”. 7/9

In a document titled “Whitelisting”, Facebook admits that many "pages, profiles and entities [were] exempted from enforcement”. 7/9

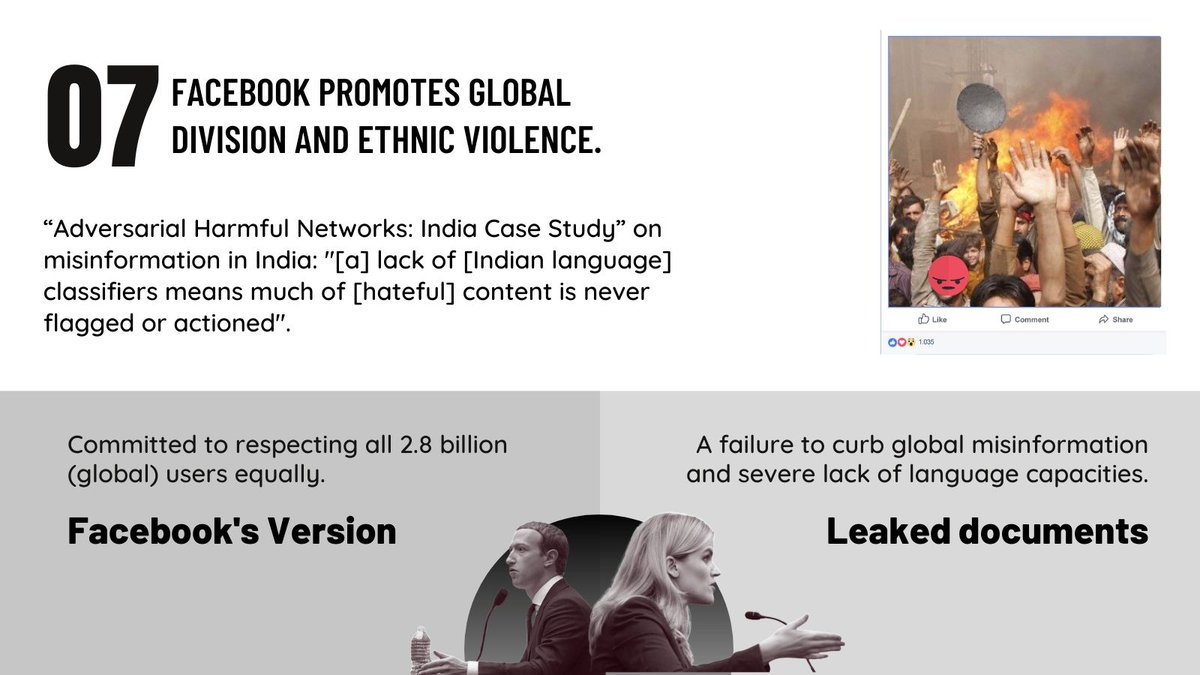

Now revealed beyond doubt: Facebook spends most of its attention and global budget against misinformation and hate speech on English-speaking countries in the Western world, *even though* most of its users exist in other areas around the globe. #FacebookFiles 8/9

And if these issues weren't enough, Facebook also lied to its investors & shareholders about: its user base, advertising reach + content production on the platform.

It's no wonder that @FrancesHaugen has asked #Facebook to declare "moral bankruptcy"👇🏽 9/9

theguardian.com/us-news/2021/o…

It's no wonder that @FrancesHaugen has asked #Facebook to declare "moral bankruptcy"👇🏽 9/9

theguardian.com/us-news/2021/o…

Follow us to stay updated on the latest analyses around #FacebookFiles in India. With its largest user base in the country, Facebook owes answers to India and Indian authorities.

Keep our work going! Invest in India's digital rights today 🗣

internetfreedom.in/donate/

Keep our work going! Invest in India's digital rights today 🗣

internetfreedom.in/donate/

• • •

Missing some Tweet in this thread? You can try to

force a refresh