The anonymous people behind ivm meta dot com have put together a response to this excellent piece. Their main argument is that it doesn't look at all the evidence

So, following Scott Alexander's fine example, let's briefly review the prophylaxis literature 1/n

So, following Scott Alexander's fine example, let's briefly review the prophylaxis literature 1/n

https://twitter.com/GidMK/status/1461080114536476676

2/n Here are all 15 prophylaxis studies, in their wonderful glory

I'm going to try and be brief, but we'll see how that goes

I'm going to try and be brief, but we'll see how that goes

3/n First up, Shouman. In this 'randomized' study, the authors ceased allocating people into the control group at some point, no allocation concealment, and massive differences between groups at baseline

Incredibly low-quality. Exclude

Incredibly low-quality. Exclude

https://twitter.com/GidMK/status/1452498110345715712?s=20

4/n Then we've got Carvallo, twice. This is the study with numeric mistakes in the reporting of results, and where the hospitals and researchers involved have said they have never heard of the study

Likely fraud. Exclude

Likely fraud. Exclude

https://twitter.com/stephaniemlee/status/1433551906480148480?s=20

5/n Next, Behera #1. A case-control study that matched on gender, age, and date of diagnosis. Obviously, this is not sufficient to control for confounding from any number of reasons

6/n However, worse still there appear to be serious concerns about both this and the other Behera study. At least one of the data files has demonstrable concerns

https://twitter.com/sTeamTraen/status/1424873885682569219?s=20

7/n On top of that, at an absolute minimum, both studies were conducted on the same patient population at the same time with almost exactly the same number of positive tests

Many red flags. Exclude

Many red flags. Exclude

https://twitter.com/GidMK/status/1453171733402316800?s=20

8/n Moving on, we've got Hellwig. This study and Tanioka ran fundamentally the same analysis, so I'd group them together

The analysis is, in a word, shit

The analysis is, in a word, shit

https://twitter.com/GidMK/status/1436201903788998657?s=20

9/n Both studies are ecological in nature, and simply compare places where ivermectin was used in mass drug administration programs in Africa against reported cases/deaths

10/n Obviously, this is a very weak methodology that you'd usually exclude from any meta-analysis. However, in addition (for the reasons outlined in the thread above), it's also gibberish

Exclude

Exclude

11/n Benigaud. Short case-series on 69 people who received ivermectin while living in a nursing home, compared to a synthetic control drawn from other nursing homes

Pretty useless as evidence. Exclude

Pretty useless as evidence. Exclude

12/n Next, Alam et al. Non-randomized trial conducted in Bangladesh, which found vast benefits for ivermectin as prophylaxis

13/n This is obviously very low-quality, given that the methodology is scant, there's virtually no information on key metrics of interest (COVID-19 exposure, for example), and as an observational trial we'd usually exclude it simply because of those issues

14/n But on top of that, there's some incorrect/impossible numbers in the document, there are passages that appear to have been copied from other work (h/t @JackMLawrence), and the attack rate in the control group is wildly implausible

Exclude

Exclude

15/n Next, we've got IVERCOR PREP, which it turns out is a single slide tweeted out once by an anonymous account

Obviously exclude, but I thought I'd investigate further anyway

Obviously exclude, but I thought I'd investigate further anyway

16/n I emailed the senior author on the IVERCOR paper, and asked about this slide. It turns out, this was merely an illustrative slide from a presentation on the paper given by one of the authors, and he was shocked that anyone would be using it as evidence of anything

17/n That brings us to Chahla et al. The second randomized trial on our list!

It's really, deeply shit medrxiv.org/content/10.110…

It's really, deeply shit medrxiv.org/content/10.110…

18/n The randomization process makes allocation concealment impossible. They report 100% follow-up of healthcare personnel over several weeks. There are numeric errors in the tables. They have issues with the sample size

So many red flags. Exclude

So many red flags. Exclude

19/n Moving past the second Behera study and Tanioka, which I've discussed already, brings us to the first half-decent study so far, Seet. A cluster-RCT from Singapore that divided dorms into different treatments, and looked at the effect on risk of COVID

ijidonline.com/article/S1201-…

ijidonline.com/article/S1201-…

20/n There are definitely some quibbles here. Clustering at the dorm level leads to issues with sharing of meds (that the authors acknowledge). Also, there were some issues with part of the primary outcome measure of seropositivity

21/n However, the study is reasonably solid as such things go. For the primary, pre-registered outcome measure there was a small, non-significant benefit for ivermectin compared to vitamin C (65% vs 70% infected)

22/n There was a lower proportion of people reporting symptoms in the ivermectin group when compared to placebo, but since this was open-label and that wasn't part of the main cluster-controlled analysis it's hard to interpret that result

Include

Include

23/n Here we're getting to the last few studies. Morgenstern is an observational study from the Dominican Republic that used a propensity scoring model to compare healthcare workers who did and didn't take ivermectin cureus.com/articles/63131…

24/n From an initial sample of 943, the authors here whittled their numbers down to 542 in an odd series of exclusions. Why you'd discard half your sample in an observational trial is confusing to me

25/n The propensity model controlled for gender (m/f), exposure (l/m/h), and role (doctor/nurse/assistant/other)

That's just...not sufficient. Huge potential for residual confounding, especially as they had to discard ~1/4 of their sample to use the propensity model

That's just...not sufficient. Huge potential for residual confounding, especially as they had to discard ~1/4 of their sample to use the propensity model

26/n This is the sort of study that might've been useful if the authors had had 20,000 patients matched across dozens of variables, but this methodology doesn't eliminate key biases on a sample this small

Exclude

Exclude

27/n Then we've got Mondal. This is a slightly odd serosurvey of healthcare workers, where the authors ran a logistic regression looking at factors that predict a positive IgG result for SARS-CoV-2

onlinejima.com/read_journals.…

onlinejima.com/read_journals.…

28/n As far as uncontrolled studies go, it's a bit weak. Some of the percentages reported in the paper are off, the numbers don't quite match, and the methodology is pretty mediocre. Massive potential for residual confounding, and also no real control group

Exclude

Exclude

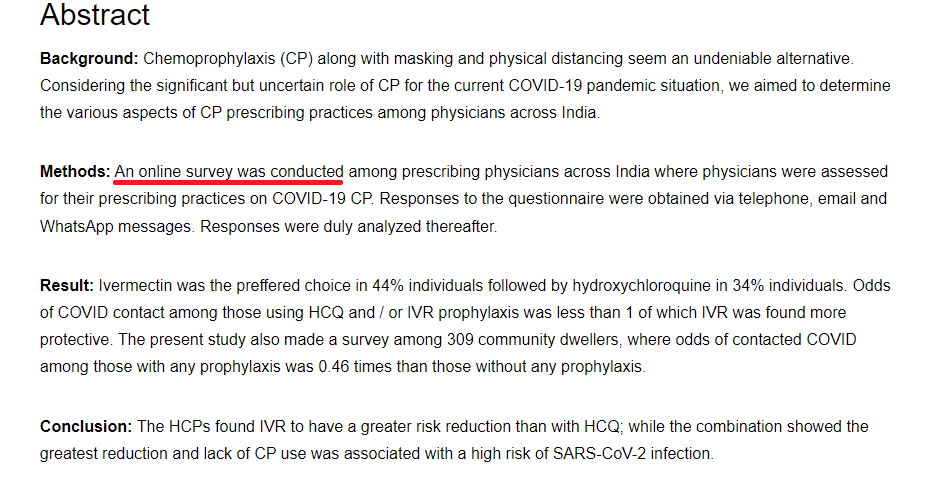

29/n Finally, the newest trial, Samajdar, where a group of Indian researchers ran an online survey about which medications healthcare workers liked

Just...what? This is not evidence about ivermectin in any sense. Exclude

japi.org/x2a464b4/iverm…

Just...what? This is not evidence about ivermectin in any sense. Exclude

japi.org/x2a464b4/iverm…

30/n Overall, that leaves us with this picture. One study among 15 that isn't potentially fake, extremely bad, or just useless as evidence

31/n There's not a whole lot you can take home from Seet, to be honest. The potential benefits are mostly in reported symptoms which may be biased due to the lack of blinding. The more objective lab tests found very little, if any, benefit for ivermectin

32/n So what do you say to the question of whether ivermectin works as a prophylactic?

NO ONE KNOWS

The evidence is shit, and the one decent-ish study inconclusive. A big old shrug 🤷♂️🤷♂️

NO ONE KNOWS

The evidence is shit, and the one decent-ish study inconclusive. A big old shrug 🤷♂️🤷♂️

33/n Anyway, the simple fact is that the prophylaxis literature is, if anything, WORSE than the treatment studies. This is evidence so woeful that you could not possibly draw a reasonable conclusion about whether ivermectin does or doesn't work

• • •

Missing some Tweet in this thread? You can try to

force a refresh