Have you been seeing artwork like this on your timeline and wondered how it was created?

Let's learn about one of the most popular algorithms for AI-generated art! ⬇ ⬇ ⬇

Let's learn about one of the most popular algorithms for AI-generated art! ⬇ ⬇ ⬇

The technique used is known as "VQGAN+CLIP" and is actually a combination of two deep learning models/algorithms both released earlier this year 2/11

VQGAN - Vector-Quantized Generative Adversarial Network

VQGAN is a type of GAN, which is a class of *generative* neural networks that have been used for #deepfakes and other AI-generated art techniques

You pass a vector/code and VQGAN generates an image. 3/11

VQGAN is a type of GAN, which is a class of *generative* neural networks that have been used for #deepfakes and other AI-generated art techniques

You pass a vector/code and VQGAN generates an image. 3/11

VQGAN, like many of the cutting-edge GANs, has a continuous, traversable latent space, which means that codes with similar values will generate similar images, and following a smooth path from one code to another will lead to a smooth interpolation from one image to another 4/11

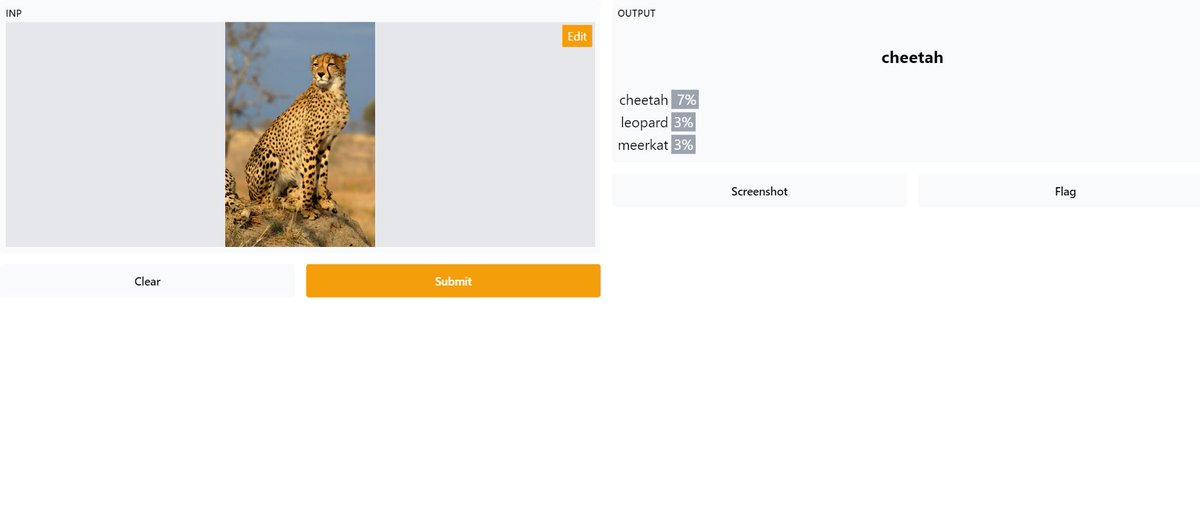

CLIP - Contrastive Language-Image Pretraining

CLIP is a model released by @OpenAI (the same company that developed GPT-3!).

It can be used to measure the similarity between an input image and text. 5/11

CLIP is a model released by @OpenAI (the same company that developed GPT-3!).

It can be used to measure the similarity between an input image and text. 5/11

VQGAN+CLIP:

1. Start w/ an init. image generated by VQGAN w/ a random code & input text provided by user.

2. CLIP provides a similarity measure for the image and text.

3. Through optimization (gradient ascent), iteratively adjust the image to maximize the CLIP similarity. 6/11

1. Start w/ an init. image generated by VQGAN w/ a random code & input text provided by user.

2. CLIP provides a similarity measure for the image and text.

3. Through optimization (gradient ascent), iteratively adjust the image to maximize the CLIP similarity. 6/11

That's all there is to it!

Essentially, CLIP guides a search through the latent space of VQGAN to find the vector that map to images which fit with a given sequence of words. 7/11

Essentially, CLIP guides a search through the latent space of VQGAN to find the vector that map to images which fit with a given sequence of words. 7/11

VQGAN+CLIP sometimes has unexpected behaviors based on different statistical properties learned during training. This includes the infamous "unreal engine" trick. 8/11

https://twitter.com/arankomatsuzaki/status/1399471244760649729

It's very interesting to see how rewording your prompt or including additional terms can lead to an interesting diversity of results! (known as prompt engineering) 9/11

While @WOMBO don't specify the algorithm used & might not be exactly the same as existing VQGAN+CLIP tools, the underlying models & principles remain the same but maybe tweaked a bit in terms of the prompt (especially for the style selection) & optimization process. 10/11

Hope this short thread helps!

I recommend reading @sea_snell's blog post if you want to dive deeper:

ml.berkeley.edu/blog/posts/cli…

If you like this thread, please share!

Consider following me for AI/ML-related content! 🙂

I recommend reading @sea_snell's blog post if you want to dive deeper:

ml.berkeley.edu/blog/posts/cli…

If you like this thread, please share!

Consider following me for AI/ML-related content! 🙂

Oh and adding a note that @advadnoun and @RiversHaveWings were the original pioneers of the VQGAN+CLIP technique (described in more detail in the above blog post)... Check out their work and give them a follow!

• • •

Missing some Tweet in this thread? You can try to

force a refresh