Attending @NeurIPSConf #NeurIPS2021 today?

Interested in algorithmic reasoning, implicit planning, knowledge transfer or bioinformatics?

We have 3 posters (1 spotlight!) in the poster session (4:30--6pm UK time) you might find interesting; consider stopping by! Details below: 🧵

Interested in algorithmic reasoning, implicit planning, knowledge transfer or bioinformatics?

We have 3 posters (1 spotlight!) in the poster session (4:30--6pm UK time) you might find interesting; consider stopping by! Details below: 🧵

(1) "Neural Algorithmic Reasoners are Implicit Planners" (Spotlight); with @andreeadeac22, Ognjen Milinković, @pierrelux, @tangjianpku & Mladen Nikolić.

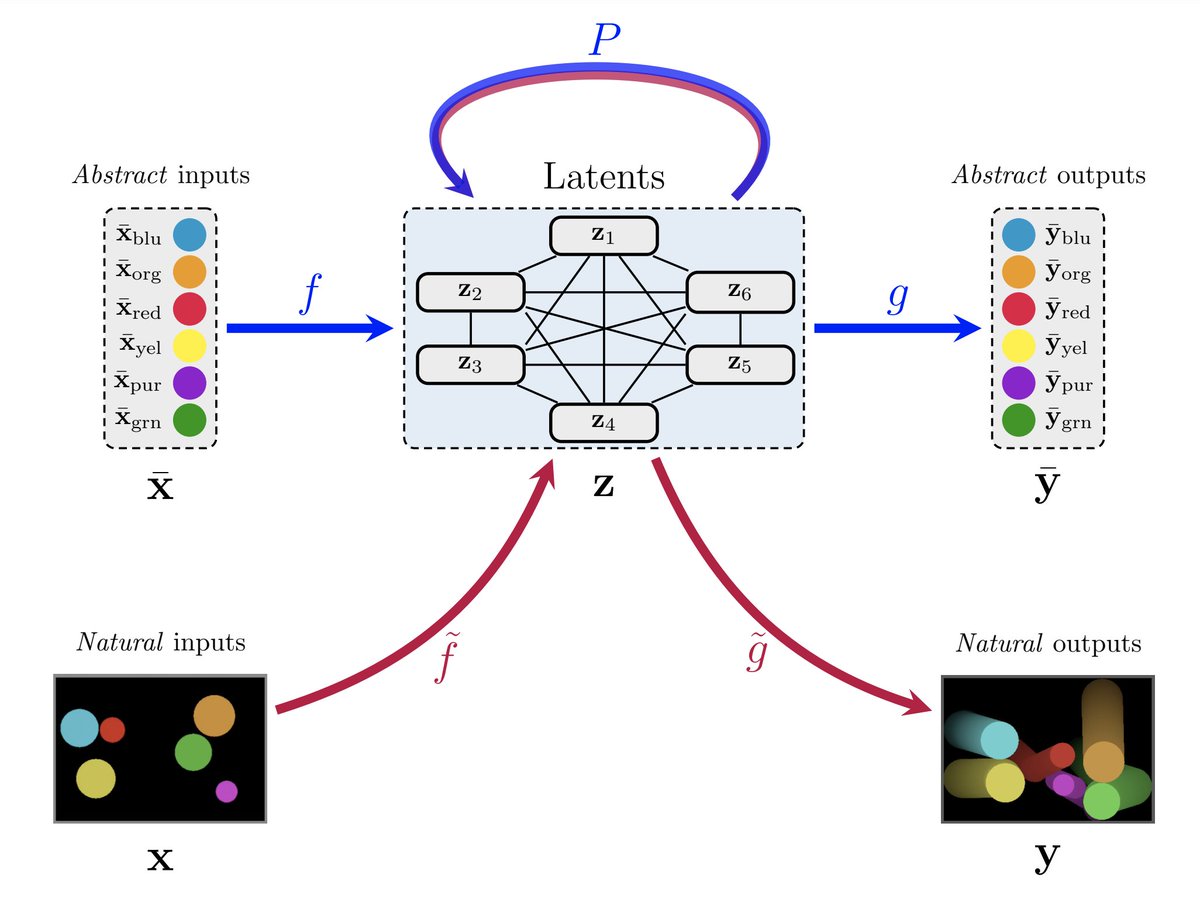

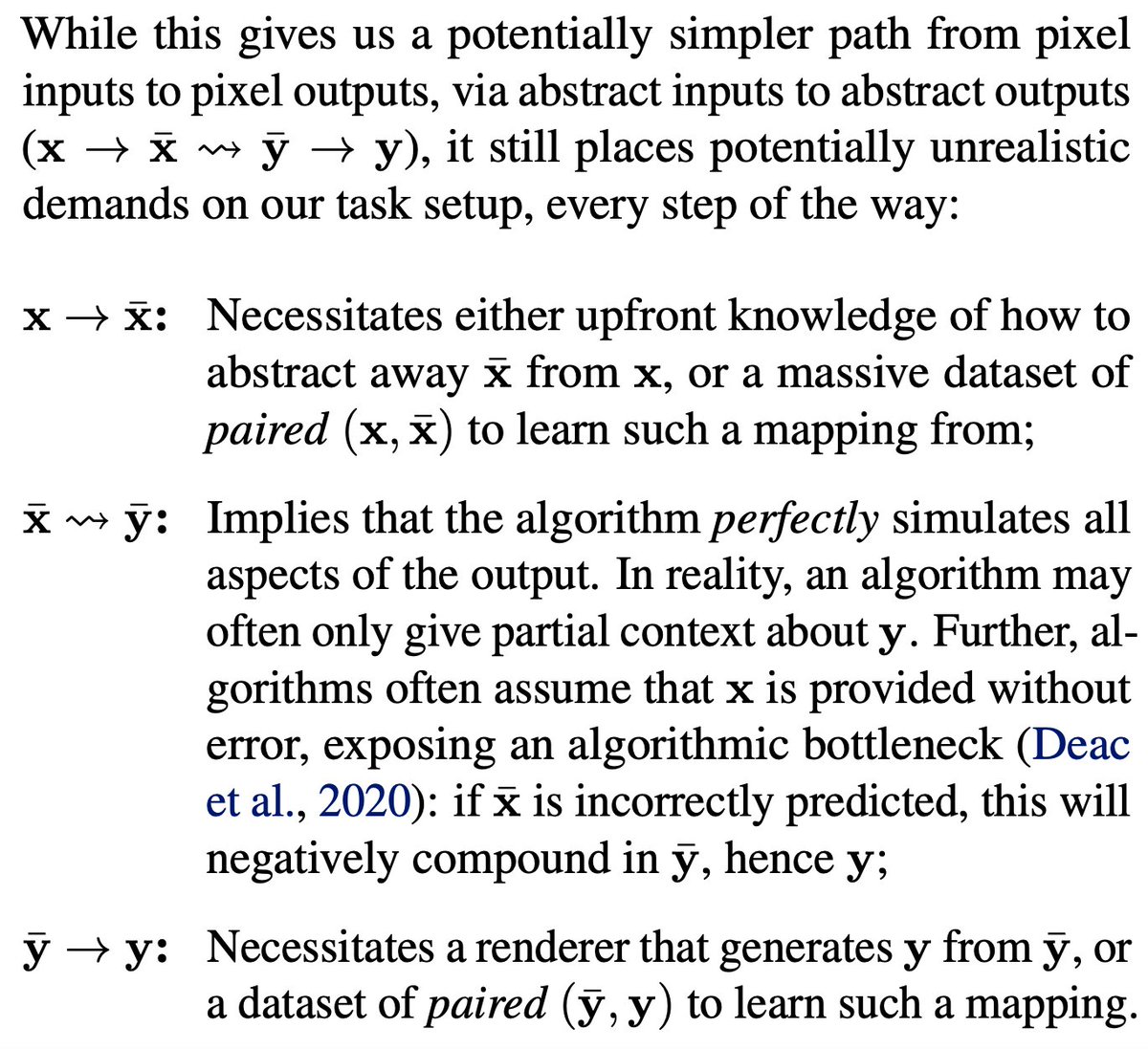

Value Iteration-based implicit planner (XLVIN), which successfully breaks the algorithmic bottleneck & yields low-data gains.

Value Iteration-based implicit planner (XLVIN), which successfully breaks the algorithmic bottleneck & yields low-data gains.

(2) "How to transfer algorithmic reasoning knowledge to learn new algorithms?"; with Louis-Pascal Xhonneux, @andreeadeac22 & @tangjianpku.

Studying how to successfully transfer algorithmic reasoning knowledge when intermediate supervision traces are missing.

Studying how to successfully transfer algorithmic reasoning knowledge when intermediate supervision traces are missing.

(3) "Neural Distance Embeddings for Biological Sequences"; with @GabriCorso, @RexYing0923, @Mpmisko, @jure & @pl219_Cambridge.

Framework to embed biological sequences in a hyperbolic space, and derive useful relations between them (e.g. alignment, similarity, clustering).

Framework to embed biological sequences in a hyperbolic space, and derive useful relations between them (e.g. alignment, similarity, clustering).

• • •

Missing some Tweet in this thread? You can try to

force a refresh