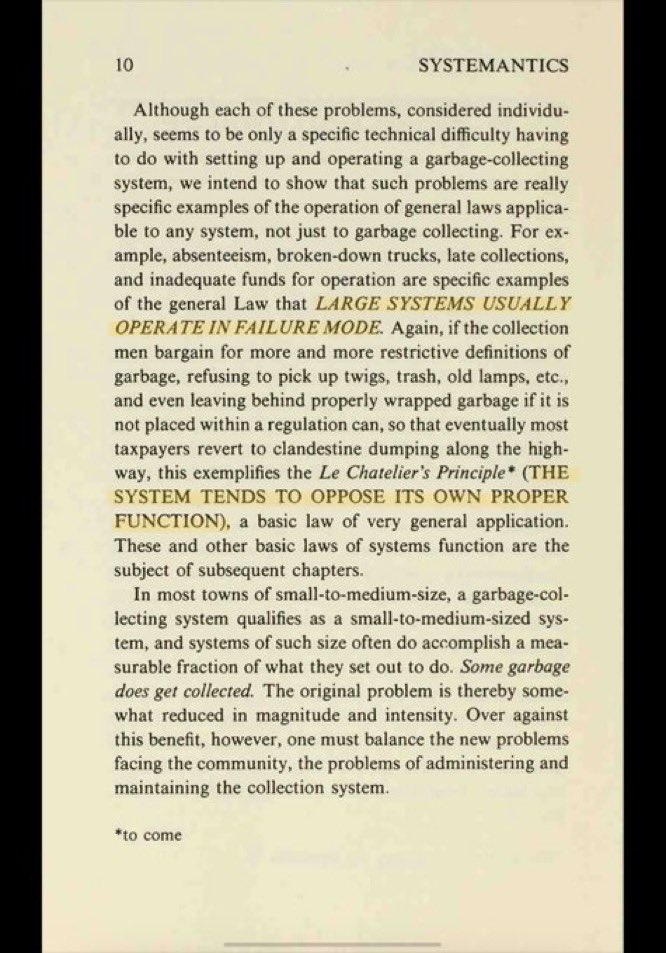

LARGE SYSTEMS USUALLY OPERATE IN FAILURE MODE, via @dangolant

Or like I used to say, your distributed system exists in a continuous state of partial degradation. There are bugs and flakes and failures all the way down, and hardly any of them ever matter. Until they do.

Or like I used to say, your distributed system exists in a continuous state of partial degradation. There are bugs and flakes and failures all the way down, and hardly any of them ever matter. Until they do.

This is why observability matters. SLOs make large multitenant systems tractable from the top down, but observability makes them comprehensible from the bottom up.

Maybe only .001% of all software system behaviors and bugs ever need to be closely inspected and understood, but that tiny percentage defines the success of your business and the happiness of your users.

And you CANNOT predict what will matter in advance.

And you CANNOT predict what will matter in advance.

Remember, it's not just about "is this broken?" It is equally about, "how does this work, and what is my user experiencing?"

The more equipped you are to answer the latter, and the more actively you seek those answers out, the less you will experience them as breakage.

The more equipped you are to answer the latter, and the more actively you seek those answers out, the less you will experience them as breakage.

Issues get *exponentially* more expensive to discover and fix the longer it takes to find them. You CANNOT rely on monitoring to find problems with the intersection of your code, your infra and your users.

If you try, you will doom yourself to a life of reactivity and toil.

If you try, you will doom yourself to a life of reactivity and toil.

We have to learn to be more proactive about examining that intersection. You instrument as you go, merge smaller diffs more often, autodeploy and keep a lid on delivery times.

Your job isn't done until you have closed the loop by checking your instrumentation in production.

Your job isn't done until you have closed the loop by checking your instrumentation in production.

And not just like, "is it up? is it down?" but rather,

* what are the distribution of response times by normalized query? raw query?

* what is the breakdown of response codes for a particular user for this endpoint?

* are 504s dominated by any userstring, browser, header, etc?

* what are the distribution of response times by normalized query? raw query?

* what is the breakdown of response codes for a particular user for this endpoint?

* are 504s dominated by any userstring, browser, header, etc?

Only you know what changes you are hoping and expecting to see in the system after your changes roll out. Look for them specifically.

You also need to develop a sixth sense for "something is weird". Which you can only do by checking up on your code in prod daily, habitually.

You also need to develop a sixth sense for "something is weird". Which you can only do by checking up on your code in prod daily, habitually.

• • •

Missing some Tweet in this thread? You can try to

force a refresh