Very detailed and wide-ranging decision of the Belgian DPA regarding cookie tracking in relation to (from inference, it's badly anonymised...) the @EDAATweets, the service that runes Your Online Choices (ht @PrivacyMatters) autoriteprotectiondonnees.be/publications/d…

So, the EDAA runs a site called "Your Online Choices", an incredibly little used, awkward & archiaic self regulatory initiative of the ad industry to try and claim that people have online choices in the absence of them. This website is linked to by ads, and itself places cookies.

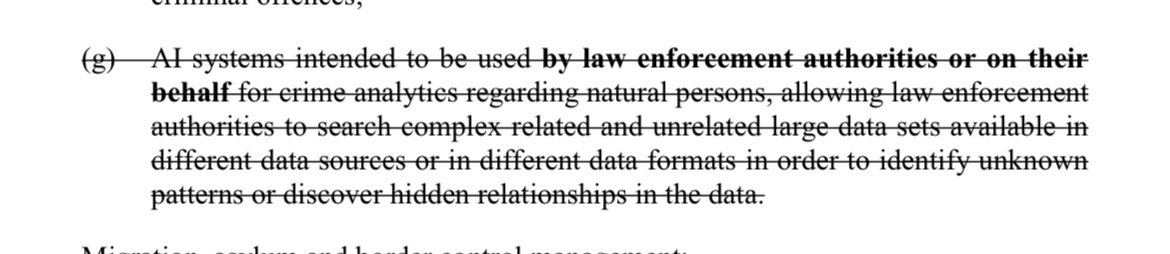

Here's the headline findings. Violation of arts 12-13 GDPR for laying a cookie to test the water for acceptability of cookies before consent banner was given.

Violation of art 13, GDPR for just putting a link to a privacy policy rather than displaying concrete and specific information about the cookies used.

No clear violation, just a strong recommendation (?) followed by an order (??), that countries data being transferred to needs to be included. Likely because the register is "based on a European regulator's model" so Belgian DPA doesn't want to start a fight...?

No breach of a cookie wall ban as you could browse the website with strictly necessary cookies only, but the Belgiam DPA makes it clear that it will take a dim view of cookie walls for non strictly necessary purposes.

Ultimately the latter was seemingly predictable because the website wasn't contingent on using non necessary cookies (unless of course you consider its structural role in enabling them throughout the whole adtech industry, but DP doesn't do structural roles well...)

It seems mostly this decision fiddles with the information requirements a bit. A real question would be whether or not a cookie banner could ever contain too many actors, or too much info, to ever consent to. Indications in this decision that the Belgian DPA may think it could.

oh also worth noting that this is a cross border case, was passed over from a data subject in Germany, EDAA is headquartered in Brussels.

• • •

Missing some Tweet in this thread? You can try to

force a refresh