Significant news for the AI Act from the Commission as it proposes its new Standardisation Strategy, involving amending the 2012 Regulation. Remember: private bodies making standards (CEN/CENELEC/ETSI) are the key entities in the AI Act that determine the final rules. 🧵

Firstly, the Commission acknowledges that standards are increasingly touching not on technical issues but on European fundamental rights (although doesn’t highlight the AI Act here). This has long been an elephant in the room: accused private delegation of rule making by the EC.

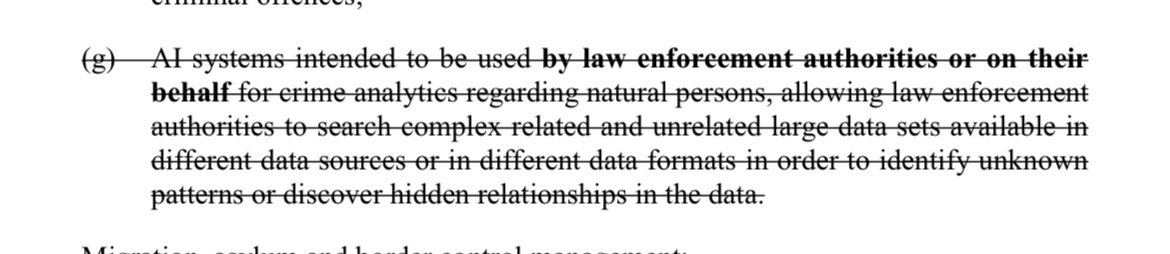

They point to CJEU case law James Elliot in that respect (see 🖼), where the Court has brought the interpretation of harmonised standards (created by private bodies!) within the scope of preliminary references. Could have also talked about Fra.Bo and Comm v DE.

The EC note governance in these European Standardisation Bodies is outrageous. They point out that in ETSI, which deals with telecoms and more, industry capture is built in society votes are “barely countable” and Member States have “circa 2%” of the vote: the rest is industry.

The Commission has not officially confirmed who will be mandated to make the standards for the AI Act (I’ve heard them lean more towards CEN/CENELEC in slides), but ETSI certainly want to be in on the technology.

Next there’s a big geopolitical change. The Commission propose an amendment in a short revision to the reg that has the effect of excluding non EU/EEA standards bodies from voting on any standards relating to the AI Act. Sorry, @BSI_UK — no more formal influence post Brexit.

This also has the effect of pushing out the limited formal influence of European Civil Society orgs, even the three that are mandated by the Commission and paid to be involved in standardisation, from consumer, social/trade union, and env fields, @anectweet, @ETUI_org, and ECOS.

In order to have a say on the AI Act, the Commission thus now relies on *Member State standardisation bodies* to be sufficiently representative of societal interests that in turn are sufficiently attuned to highly technological and European policy processes. This seems a stretch.

The EC does threaten a Sword of Damocles on european standards bodies: sort your house out and start to fix your wonky and broken governance or we’ll regulate you more directly, but I doubt this will have a significant effect, and this isn’t the first time they’ve done this.

In New Legislative Framework regulations like the AI Act (where standards can be used to demonstrate compliance), the EC can usually substitute standards for delegated acts called Common Specifications, but rarely does.

While EC executive action wouldn’t mean democratic, and a serious coregulatory process would be needed, the common specification process seems better suited to deal with fundamental rights charged areas of the AI Act than delegating to industry captured standards bodies.

The EC proposes to develop a framework for when it uses common specifications or not. This seems an opportunity for the Parliament to push for a inclusive process in building them in the case of standards touching on fundamental rights and freedoms, rather than delegating to ESOs

Link to package: ec.europa.eu/commission/pre…

• • •

Missing some Tweet in this thread? You can try to

force a refresh