The FUZZING'22 Workshop is organized by

* Baishakhi Ray (@Columbia)

* Cristian Cadar (@ImperialCollege)

* László Szekeres (@Google)

* Marcel Böhme (#MPI_SP)

Artifact Evaluation Committee Chair (Stage 2)

* Yannic Noller (@NUSComputing)

* Baishakhi Ray (@Columbia)

* Cristian Cadar (@ImperialCollege)

* László Szekeres (@Google)

* Marcel Böhme (#MPI_SP)

Artifact Evaluation Committee Chair (Stage 2)

* Yannic Noller (@NUSComputing)

Baishakhi (@baishakhir) is well-known for her investigation of AI4Fuzzing & Fuzzing4AI. Generally, she wants to improve software reliability & developers' productivity. Her research excellence has been recognized by an NSF CAREER, several Best Papers, and industry awards.

Cristian Cadar (@c_cadar) is the world leading researcher in symbolic execution and super well-known for @KLEEsymex. Cristian is an ERC Consolidator, an ACM Distinguished Member, and received many, many awards, most recently the IEEE CS TCSE New Directions Award.

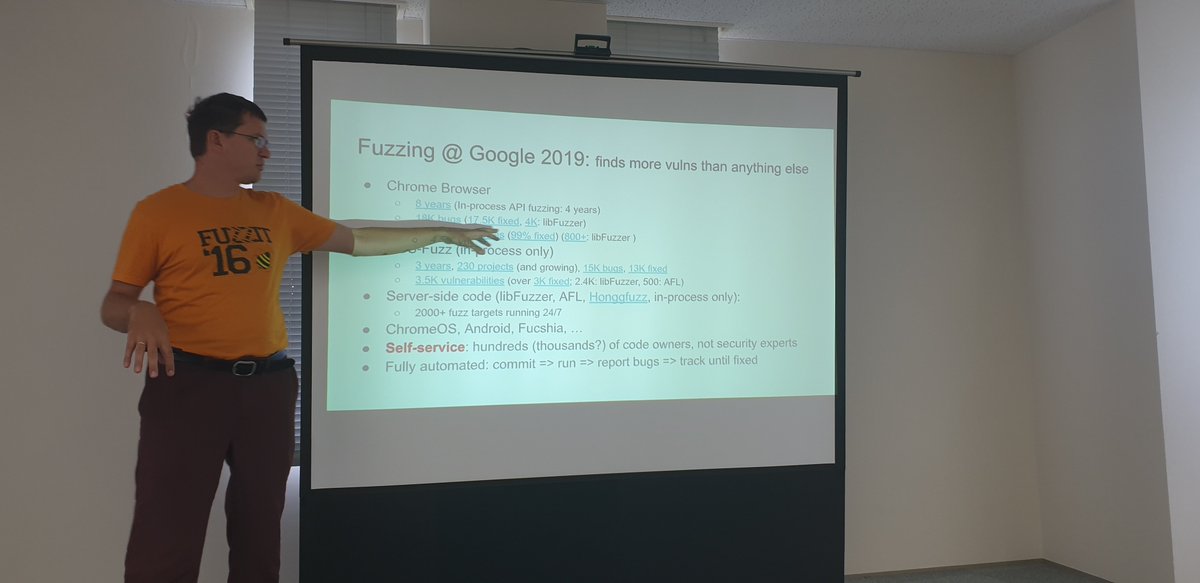

László Szekeres (@lszekeres) is passionate about software security where he wages Eternal War in Memory (SoK). He develops tools & infrastructure for protecting against security bugs, like AFL's LLVM mode or the Fuzzbench fuzzer evaluation platform.

Yannic Noller (@yannicnoller) works on fuzzing and automated program repair and is interested in software reliability, trustworthiness, and security. Yannic was also named as Distinguished Artifact Reviewer at ISSTA'21 and will organize artifact evaluation for our Stage 2.

• • •

Missing some Tweet in this thread? You can try to

force a refresh