Good Morning!

I tried to use text-to-image models to combine historical architecture with other locations around the world.

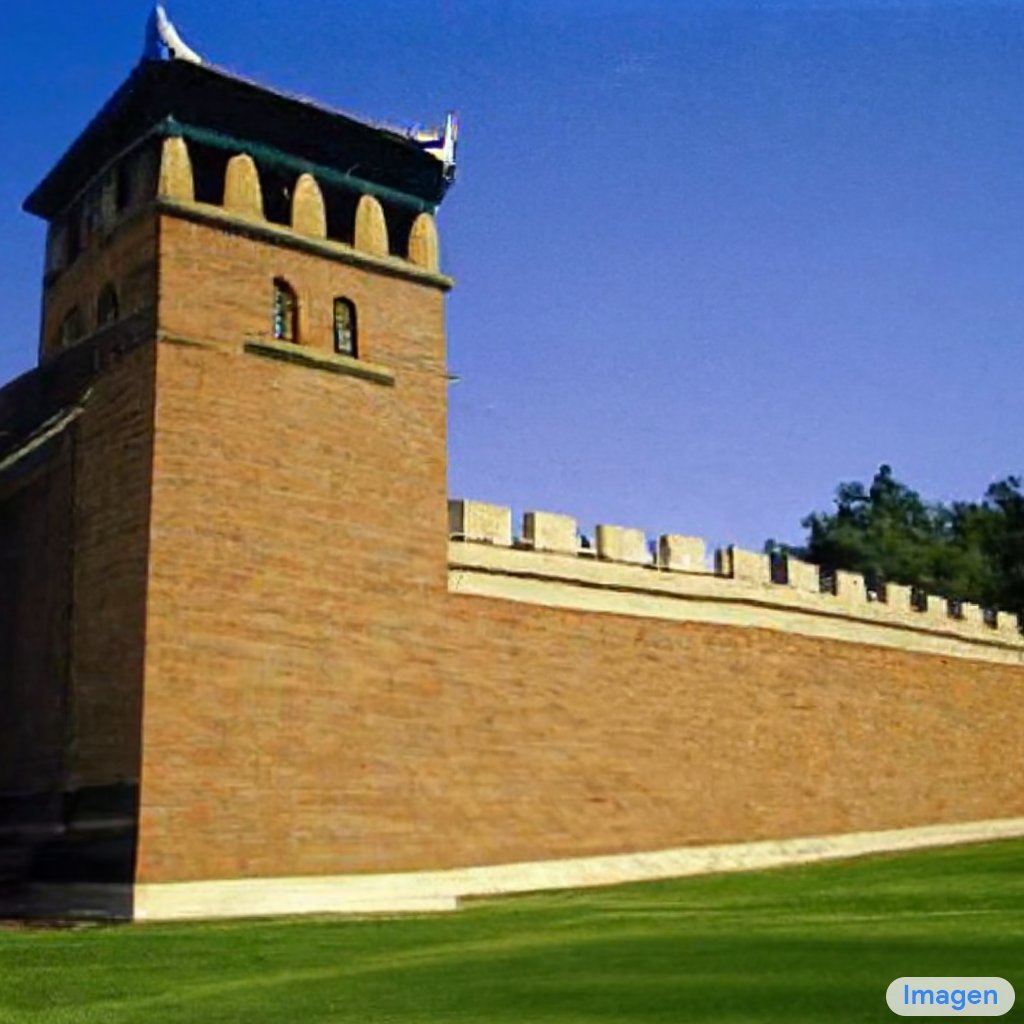

Here is “The Great Wall of San Francisco” by #Imagen

🧵Thread👇🏽

I tried to use text-to-image models to combine historical architecture with other locations around the world.

Here is “The Great Wall of San Francisco” by #Imagen

🧵Thread👇🏽

“The Great Wall of Africa” by #Imagen

“The Great Wall of Germany” by #Imagen

Looks like Germany. It also put in the European style houses and roofs on the towers. 🇩🇪

Looks like Germany. It also put in the European style houses and roofs on the towers. 🇩🇪

“The Great Wall of Dubai” by #Imagen

To be fair, the original Great Wall also had sections that went through sandy deserts. But here it crafted some local artifacts onto the design of the wall sometimes:

To be fair, the original Great Wall also had sections that went through sandy deserts. But here it crafted some local artifacts onto the design of the wall sometimes:

This blending also kind of works for concepts (like “Money”) rather than geographical locations!

Here’s “The Great Wall of Money” by #Imagen to give you some motivation as you look at the value of your stonks and crypto portfolios (cc @wallstreetbets):

Here’s “The Great Wall of Money” by #Imagen to give you some motivation as you look at the value of your stonks and crypto portfolios (cc @wallstreetbets):

Stepping back into the real world, here’s “The Great Wall of London” by #Imagen🇬🇧

Finally, here’s “The Great Wall of Hong Kong” to end this thread. 🧵

#Imagen decided to replace all of the beautiful hiking trails in #HongKong’s country parks with the Great Wall.

I have mixed feelings about it… 🙃

#Imagen decided to replace all of the beautiful hiking trails in #HongKong’s country parks with the Great Wall.

I have mixed feelings about it… 🙃

Where’s the Great Wall in the Desert?

Other prompts worked though:

“The Great Wall of Ghana” by #Imagen

Other prompts worked though:

“The Great Wall of Ghana” by #Imagen

• • •

Missing some Tweet in this thread? You can try to

force a refresh