Took a face made in #stablediffusion driven by a video of a #metahuman in #UnrealEngine5 and animated it using Thin-Plate Spline Motion Model & GFPGAN for face fix/upscale. Breakdown follows:1/9 #aiart #ai #aiArtist #MachineLearning #deeplearning #aiartcommunity #aivideo #aifilm

First, here's the original video in #UE5. The base model was actually from @daz3d #daz and I used Unreal's #meshtometahuman tool to make a #metahuman version. 2/9 #aiartprocess

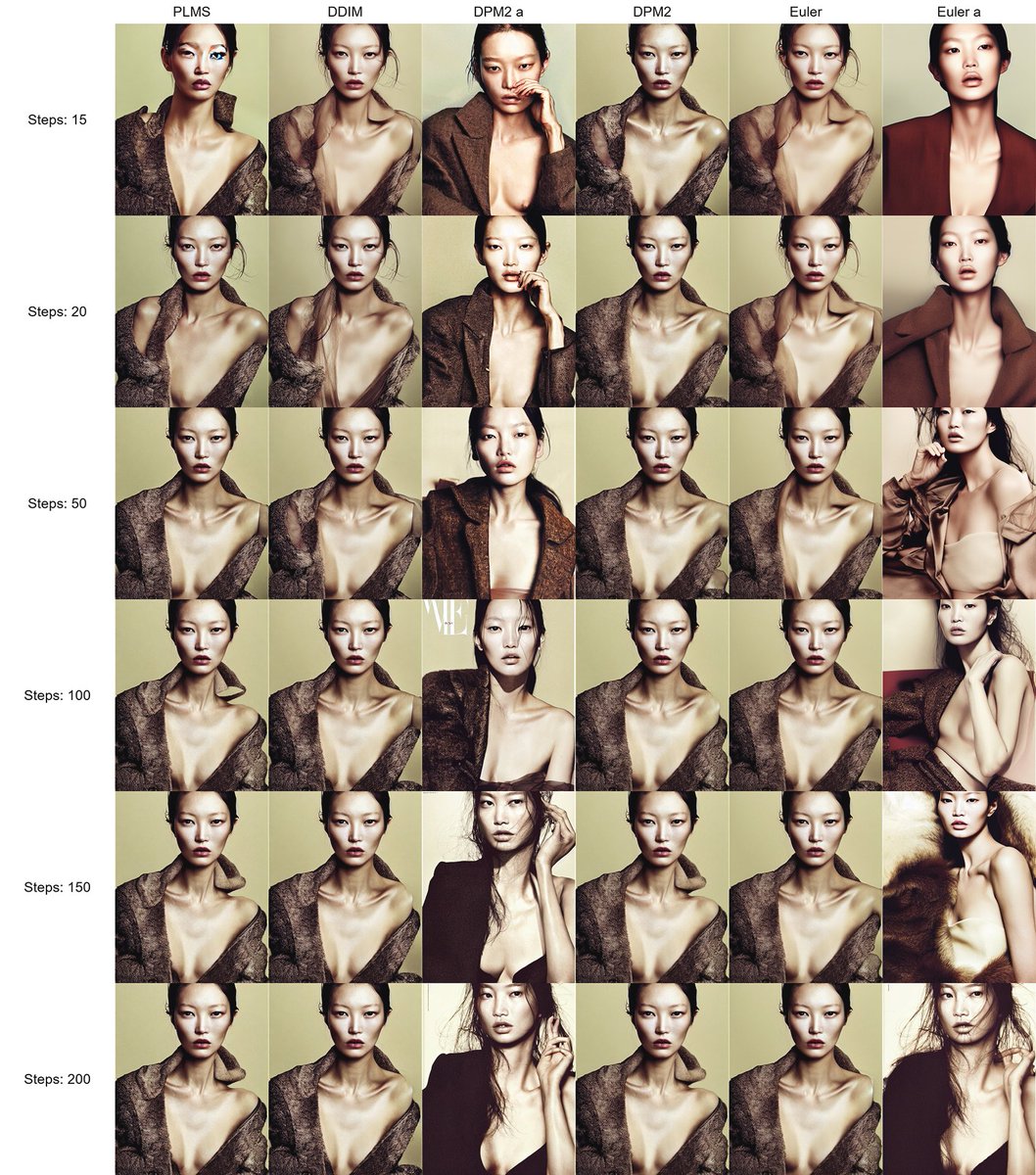

Then I took a single still frame from that video and ran it through #img2img in a local instance of #stablediffusion WebUI. After generating a few options I ended up with this image. 3/9 #aiartprocess

Then I used the Thin-Plate Spline Motion Model to animate the img2img result from #stablediffusion with the driving video from #UnrealEngine5. 4/9 #aiartprocess

github.com/yoyo-nb/Thin-P…

github.com/yoyo-nb/Thin-P…

The result is 256 x 256. 5/9 #aiartprocess

Here's a split screen highlighting the consistency and coherence between the driving video and the results. I upscaled the video a bit using #TopazVideoEnhance. Notice the quality of the blinking and the coherence of the "birthmark" under her left eye. 6/9 #aiartprocess

Good as this was, there were some artifacts in the eyes I wanted to reduce. Made the video an img sequence. Used a local instance of GFPGAN to batch process all the frames. This increased the quality of the eyes and sharpened the image by enlarging 4x.(1st video)7/9 #aiartprocess

GFPGAN changed the alignment of some things slightly for some reason and shifted the color. It also faded the birthmark a bit. Not sure how to control the strength of the effect locally just yet. GFPGAN link below. 8/9 #aiartprocess

github.com/TencentARC/GFP…

github.com/TencentARC/GFP…

There's still some artifacts here and there, but pretty good considering the look is from a single keyframe. I'll continue experimenting and see how to improve on this initial workflow. Hope this thread gives you some ideas! 9/9 #aiartprocess

• • •

Missing some Tweet in this thread? You can try to

force a refresh