How to get URL link on X (Twitter) App

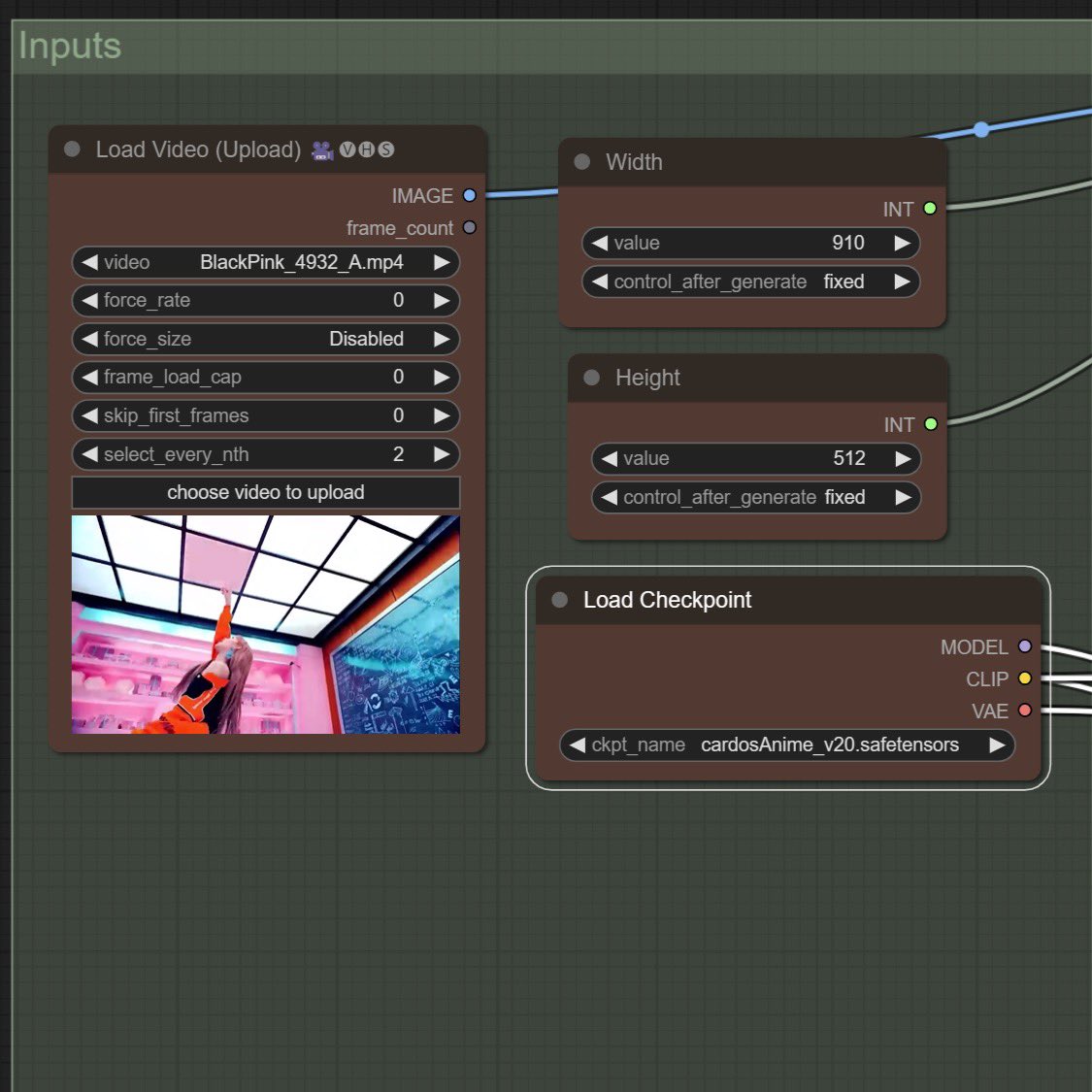

I imagine if you're coming to AI art from a different set of creative fields and client expectations, you'd approach the latent space from a different collection of frameworks and processes. Very curious how different a UI/UX catering to painters could look. 2/7 #aiartprocess

I imagine if you're coming to AI art from a different set of creative fields and client expectations, you'd approach the latent space from a different collection of frameworks and processes. Very curious how different a UI/UX catering to painters could look. 2/7 #aiartprocess