Remember who was scared of covid early, correct about its risks and correct about what we should have done about it (shutting down all flights into Europe on that day in Jan 2020 would likely have saved hundreds of thousands of lives)

https://twitter.com/RokoMijic/status/1221566557320634369

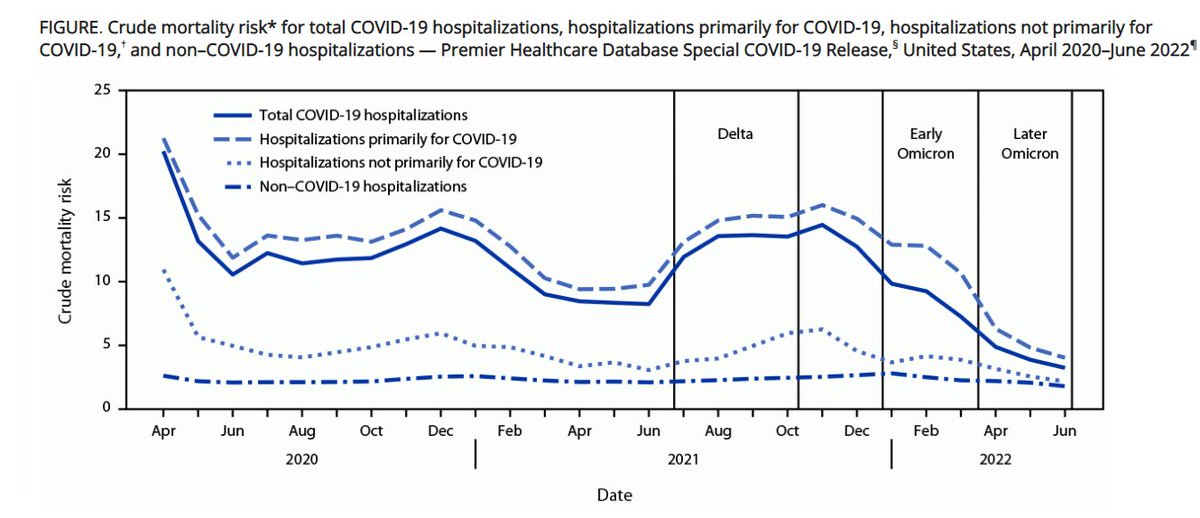

We know that #covid19 mortality was only high for a limited time: once we got to the omicron variant it dropped a lot, plus we developed vaccines and drugs like Paxlovid.

We actually only needed to hold out for 2 years, which was doable by shutting down travel.

We actually only needed to hold out for 2 years, which was doable by shutting down travel.

There's absolutely no sense in accelerating into a crisis and doing more of the thing that's harmful. In Jan 2020, that thing was importing covid viral particles into The West on planes.

Within 12 months of the start, we had vaccines and paxlovid. And those vaccines could ...

Within 12 months of the start, we had vaccines and paxlovid. And those vaccines could ...

... have been deployed to older people earlier. Scaling up vaccine production could have happened fast enough to protect the most important groups at some point in 2020.

Another thing that I think people are missing is the uncertainty aspect.

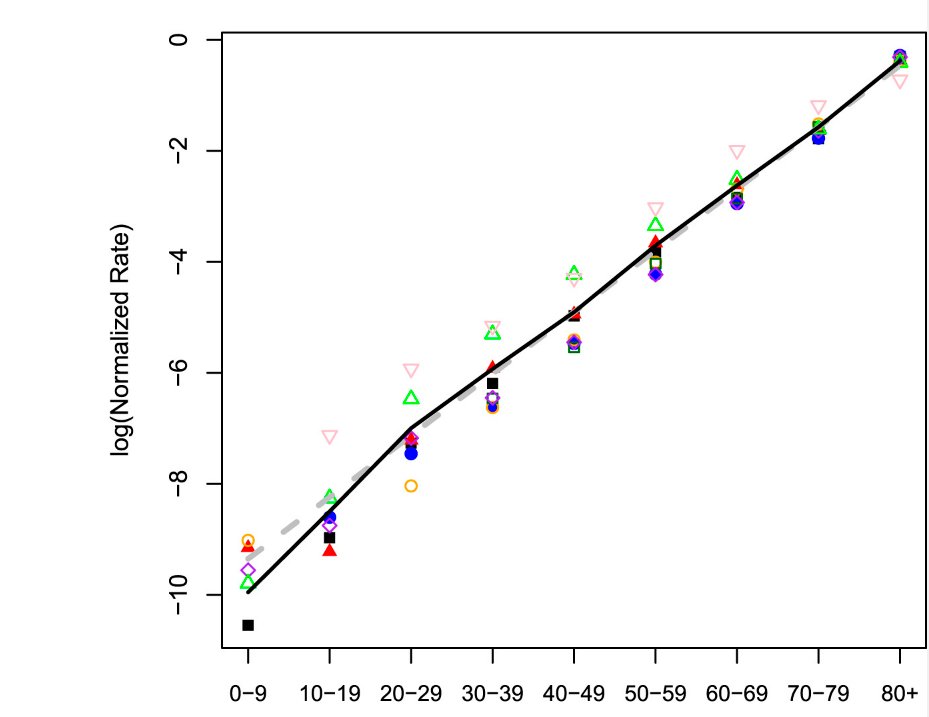

In early Jan 2020 when we got the first inklings of covid, we did not know how deadly it would be, and we also didn't know that it would basically only affect the old.

In early Jan 2020 when we got the first inklings of covid, we did not know how deadly it would be, and we also didn't know that it would basically only affect the old.

If you ban travel in Jan 2020, you can get to May 2020 and then decide that on balance it isn't that bad and you can deliberately infect the population. You could even do rolling vaccinations and infections so that no region gets infected before vaccinations.

By not acting, we gave up all those options and we just had to deal with the virus as it was which, as I predicted, would not be the end of the world.

https://twitter.com/RokoMijic/status/1221566912842342401

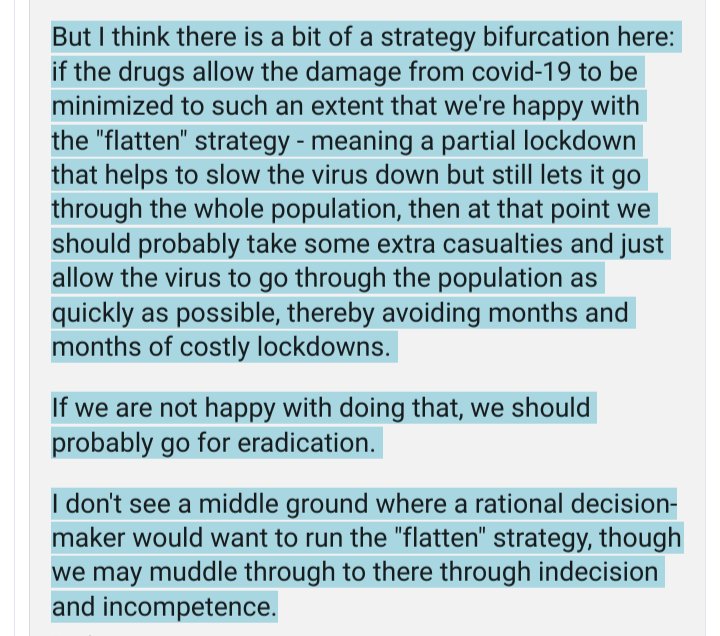

Also, since some people are having reading comprehension problems: I am and was against "lockdowns" i.e. local daily life bans. Here's an article in March 2020 saying that:

lesswrong.com/posts/Ddgry4k6…

lesswrong.com/posts/Ddgry4k6…

"we should probably take some extra casualties and just allow the virus to go through the population as quickly as possible, thereby avoiding months and months of costly lockdowns."

It's right there, people. ☝️

• • •

Missing some Tweet in this thread? You can try to

force a refresh

Read on Twitter

Read on Twitter