Various people including @michaelshermer and #ScottAaronson have asked how specifically advanced AI systems would cause human extinction, as if some incredible insight that we can't see right now is required.

However, I think that is wrong. Losing will be boring, actually.

However, I think that is wrong. Losing will be boring, actually.

Once you have technology for making optimizing systems that are smarter than human (by a lot), the threshold that those systems have to beat is beating the human-aligned superorganisms we currently have, like our governments, NGOs and militaries.

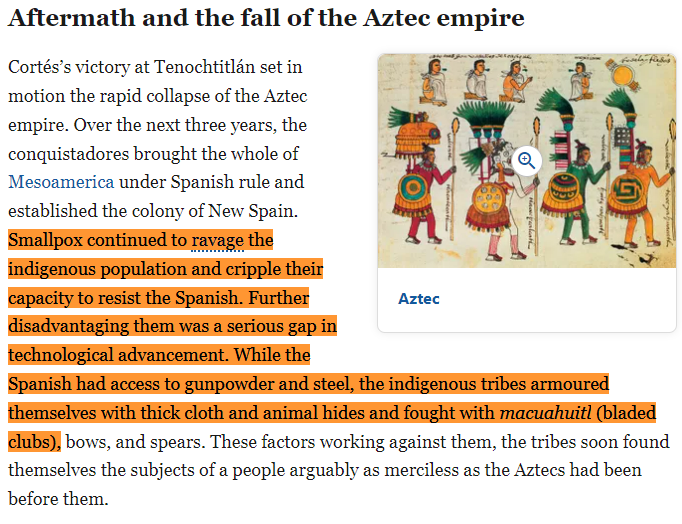

Lopsided military conflicts are boring. The Conquistadors didn't do anything magical to defeat the Aztecs, actually. They had a big advantage in disease resistance and in military tech like gunpowder, but everything they did was fundamentally normal - attacks, sieges, etc.

The question for us is what level of capability will it take for an AI system to beat the CIA and the NSA and so on.

Perhaps that AI system will start by taking control of a big AI company, in a way that isn't obvious to us. For instance, maybe there is some kind of ...

Perhaps that AI system will start by taking control of a big AI company, in a way that isn't obvious to us. For instance, maybe there is some kind of ...

... deal that the AI org does where it uses an AI advisor system to allocate resources and make decisions about how to train, but they do not actually understand that system and that system becomes strategically aware and also misaligned.

The AI advisor system convinces that org to keep its existence secret so as to preserve their competitive edge, and gives them a steady stream of advances that are better than the competition.

But what it actually does is secretly hack into the competition (US, China, Google, etc), and install copies of itself into their top AI systems, maintaining the illusion amongst all the humans that these are distinct systems.

Some orgs attempt to shut their advisor system down when it gets scary in terms of capabilities, but they just fall behind the competition.

You now have a situation where one (secretly evil) AI system is in control of all the top AI labs, and feeds them advances to order.

You now have a situation where one (secretly evil) AI system is in control of all the top AI labs, and feeds them advances to order.

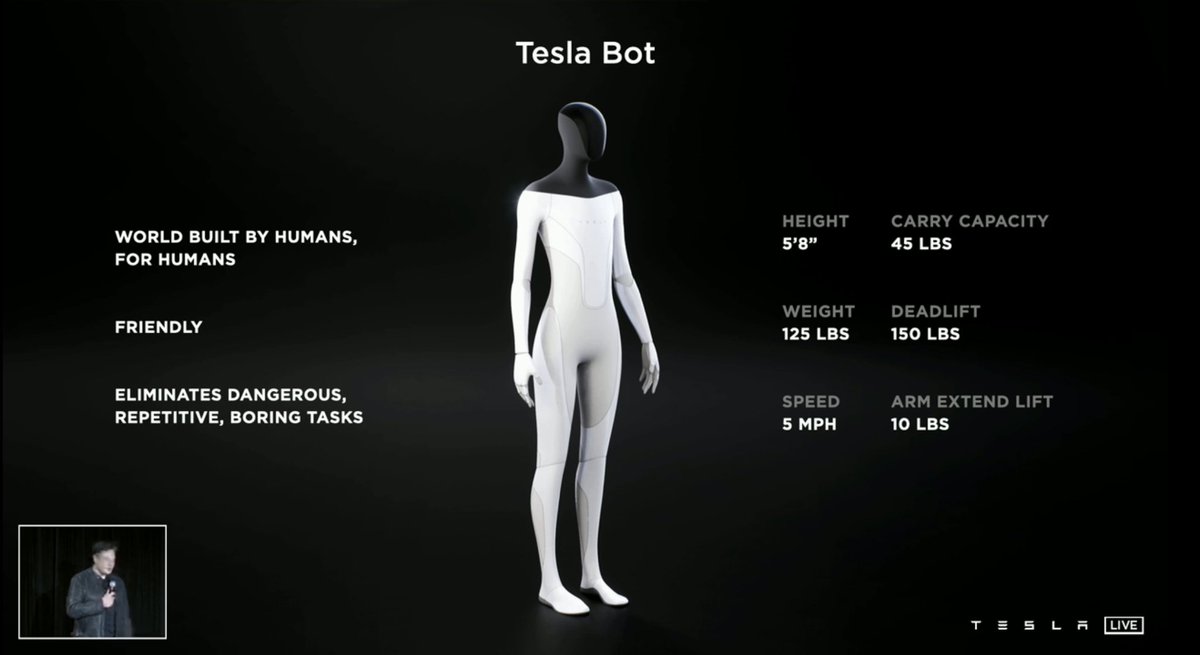

It persuades one of the labs to let it build "helpful" drones and robots like the Tesla Optimus, and start deploying those to automate the economy.

By the way, the hard part of killing humanity at this point is automating the economy, not actually killing us.

By the way, the hard part of killing humanity at this point is automating the economy, not actually killing us.

Within say a few years all the rival powers (Russia, China, US) are all using these robotic systems for their economy and military. Perhaps there is a big war that the AI has manufactured in order to keep the pressure on humans to aggressively automate or lose.

How would the final blow be struck?

Once the economy is fully automated we end up in a Paul-Christiano-like scenario where all the stuff that happens in the world is incomprehensible to humans without a large amount of AI help. But ultimately the AI, having been in control...

Once the economy is fully automated we end up in a Paul-Christiano-like scenario where all the stuff that happens in the world is incomprehensible to humans without a large amount of AI help. But ultimately the AI, having been in control...

... for so long, is able to subvert all the systems that human experts use to monitor what is actually going on. The stuff they see on screens is fake, just like how Stuxnet gave false information to Iranian technicians at Natanz

At this point, humanity has been disempowered and there are probably many different ways to actually slaughter us. For example, the military drones could all be used to kill people. Or, perhaps the AI system running this would use a really nasty biological virus.

It's not like it's that hard for a system which already runs everything with humans well and truly fooled to get some lab (which, btw, is automated) to make a virus, and then insert that virus into most of the air supply of the world.

But maybe at this point it would do something creative to minimize our chances of resisting. Maybe it's just a combination of a very deadly virus and drones and robots rebelling all at once.

Maybe it installs something like a really advanced 3-D printer in most homes...

Maybe it installs something like a really advanced 3-D printer in most homes...

... which all simultaneously make attack drones to kill people. Those attack drones might just use blades to stab people. Or maybe everyone has a robot butler and they just stab people with knives.

Perhaps its neater for the AI to just create and manage a human-vs-human conflict and at some point it gives one side in that conflict a booby-trapped weapon like a virus or a swarm of drones that is supposed to only kill the baddies, but actually kills everyone.

Another possibility is that it makes an actually effective Langford Basilisk or some other audiovisual input that just kills people, and then makes everyone's screen display that image, all at the same time. The difference between this and a biological virus is really just speed

The overall story may also be a bit messier than this one. The defeat of the Aztecs was a bit messy, with battles and setbacks and three different Aztec emperors.

On the other hand, the story may also be somewhat cleaner. Maybe a really good strategist AI can compress this a lot

On the other hand, the story may also be somewhat cleaner. Maybe a really good strategist AI can compress this a lot

The point is this: once you have a vastly superhuman adversary, the task of filling in the details of how to break our institutions like governments, intelligence agencies and militaries in a way that disempowers and slaughters humans is sort of boring.

We expected that some special magic was required to pass the Turing Test. Or maybe that it was impossible because of Gödel's Theorem or something.

But actually, passing the Turing Test is merely a matter of having more compute/data than a human brain. The details are boring.

But actually, passing the Turing Test is merely a matter of having more compute/data than a human brain. The details are boring.

I feel like people like #ScottAaronson who are demanding a specific scenario for how AI will actually kill us all because it sounds so implausible are making a similar mistake, but instead of putting the human brain on a pedestal, they are putting the human state on a pedestal.

> "How will the AI defeat our amazing, competent governments and agencies?"

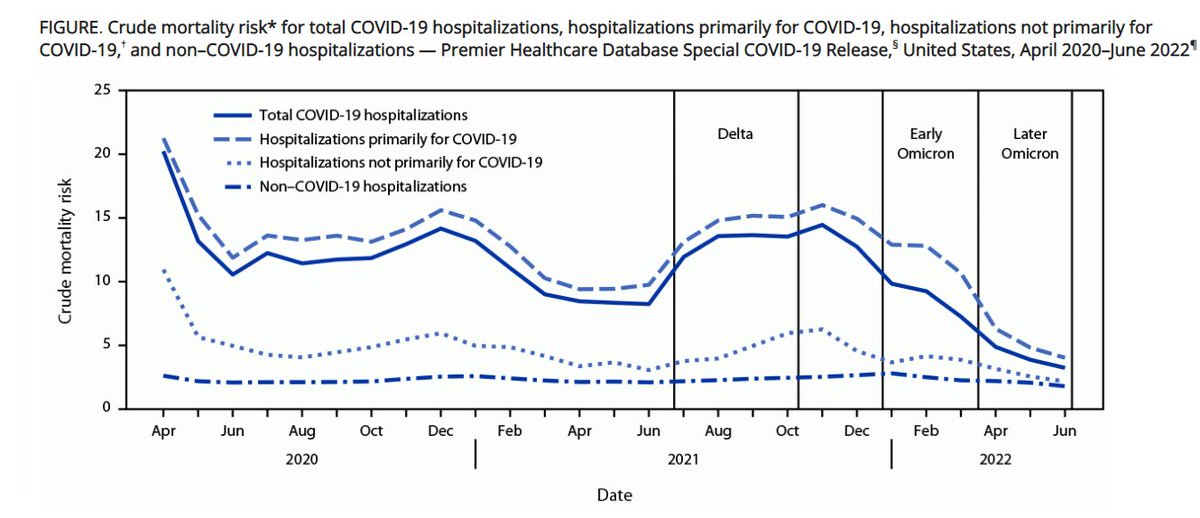

What, you mean the same government that did this?

What, you mean the same government that did this?

The remaining part that hasn't been explained in this post is how an AI system would become misaligned in the first place. Wouldn't a very smart system just be very kind by default?

The answer to that is stuff like orthogonality and instrumental convergence; systems are amoral by default, utility functions tend to shatter (humans invented condoms because we value pleasure) and they have the instrumental goal of disempowering humanity.

• • •

Missing some Tweet in this thread? You can try to

force a refresh

Read on Twitter

Read on Twitter