1/ 🌐 Web Scraping and Text Mining in R: Unlocking Insights 🔍 Learn advanced web scraping techniques and text mining tools to extract valuable insights from online data. #rstats #AdvancedR #TextMining #DataScience

2/ 🕸️ Web Scraping: Extract data from websites using powerful R tools:

•rvest for HTML scraping and parsing

•httr for managing HTTP requests

•xml2 for handling XML and XPath queries

•RSelenium for scraping dynamic web content

#rstats #datascience #AdvancedR

•rvest for HTML scraping and parsing

•httr for managing HTTP requests

•xml2 for handling XML and XPath queries

•RSelenium for scraping dynamic web content

#rstats #datascience #AdvancedR

3/🧪 Advanced Web Scraping Techniques: Go beyond basic scraping with:

•Setting up custom headers and cookies with httr

•Handling pagination and infinite scrolling

•Throttling requests to avoid getting blocked

•Using proxy servers to bypass restrictions

#rstats #AdvancedR

•Setting up custom headers and cookies with httr

•Handling pagination and infinite scrolling

•Throttling requests to avoid getting blocked

•Using proxy servers to bypass restrictions

#rstats #AdvancedR

4/ 📚 Text Processing: Clean and preprocess text data using:

•stringr for string manipulation

•tidyr for reshaping and cleaning text data

•tm (Text Mining) package for managing text corpora

•quanteda for advanced text processing

#rstats #AdvancedR #datascience

•stringr for string manipulation

•tidyr for reshaping and cleaning text data

•tm (Text Mining) package for managing text corpora

•quanteda for advanced text processing

#rstats #AdvancedR #datascience

5/ 🤖 Natural Language Processing: Analyze text data with powerful NLP techniques using:

•tidytext for text analysis in the tidyverse

•sentimentr for sentiment analysis

•spacyr for part-of-speech tagging and dependency parsing

•topicmodels for topic modeling

#rstats

•tidytext for text analysis in the tidyverse

•sentimentr for sentiment analysis

•spacyr for part-of-speech tagging and dependency parsing

•topicmodels for topic modeling

#rstats

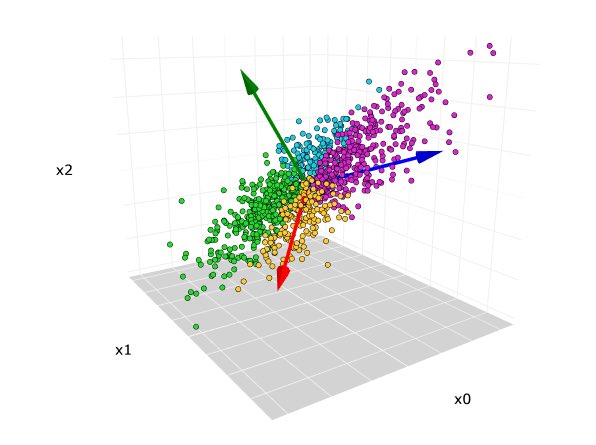

6/ 💡 Advanced Text Mining Techniques: Explore sophisticated text mining methods like:

•Word embeddings with word2vec or GloVe

•Text classification with RTextTools or caret

•Network analysis with igraph or tidygraph

#rstats #AdvancedR #datascience

•Word embeddings with word2vec or GloVe

•Text classification with RTextTools or caret

•Network analysis with igraph or tidygraph

#rstats #AdvancedR #datascience

7/ 📊 Visualizing Text Data: Create insightful visualizations with:

•ggplot2 for word frequency plots and bar charts

•wordcloud or wordcloud2 for visually appealing word clouds

•ggraph for network visualizations

#rstats #AdvancedR #datascience

•ggplot2 for word frequency plots and bar charts

•wordcloud or wordcloud2 for visually appealing word clouds

•ggraph for network visualizations

#rstats #AdvancedR #datascience

8/ 🚀 Case Studies: Apply web scraping and text mining techniques to real-world problems, such as:

•Social media sentiment analysis

•Web content summarization

•Trend and keyword analysis

•Recommender systems

#rstats #AdvancedR #datascience

•Social media sentiment analysis

•Web content summarization

•Trend and keyword analysis

•Recommender systems

#rstats #AdvancedR #datascience

9/ 📚 Resources: Learn more about advanced web scraping and text mining in R with these books:

•"R Web Scraping Quick Start Guide" by Olgun Aydin

•"Text Mining with R" by Julia Silge and David Robinson

#rstats #AdvancedR #datascience

•"R Web Scraping Quick Start Guide" by Olgun Aydin

•"Text Mining with R" by Julia Silge and David Robinson

#rstats #AdvancedR #datascience

10/ 🎉 In conclusion, mastering advanced web scraping and text mining techniques in R can help you unlock valuable insights from online data. Keep exploring these methods to elevate your R skills and data analysis capabilities! #rstats #AdvancedR #TextMining #DataScience

• • •

Missing some Tweet in this thread? You can try to

force a refresh

Read on Twitter

Read on Twitter