Why the #EUAIAct might be bad for #AI #Research in the #EU and why we should care. A long 🧵 1/15

Disclaimer:

*I’m not a legal expert, but I work with #AI models

*I’m happy to discuss if you have some insights that I have missed

*I am part of #LAION

*All opinions are my own

Disclaimer:

*I’m not a legal expert, but I work with #AI models

*I’m happy to discuss if you have some insights that I have missed

*I am part of #LAION

*All opinions are my own

https://twitter.com/djleufer/status/1668954719744655360

Here is the draft: 2/15

europarl.europa.eu/meetdocs/2014_…

europarl.europa.eu/meetdocs/2014_…

Problem: The #EUAIAct puts a regulation on providers of foundation models or General-purpose AI systems defined as

“... trained on broad data at scale, is designed for generality of output, and can be adapted to a wide range of distinctive tasks” t.ly/D-ibR

3/15

“... trained on broad data at scale, is designed for generality of output, and can be adapted to a wide range of distinctive tasks” t.ly/D-ibR

3/15

A provider is to my understanding anyone who puts such a model on a website or a github or anywhere out there.

🚨 Big problem 🚨Every model can be a foundation model!

4/15

🚨 Big problem 🚨Every model can be a foundation model!

4/15

We, as researchers, publish models all the time. For each paper, we try to provide code and as many models as possible to allow other people to reproduce our results and improve upon them.

With this definition, almost every model we provide becomes a foundation model bc ...

5/15

With this definition, almost every model we provide becomes a foundation model bc ...

5/15

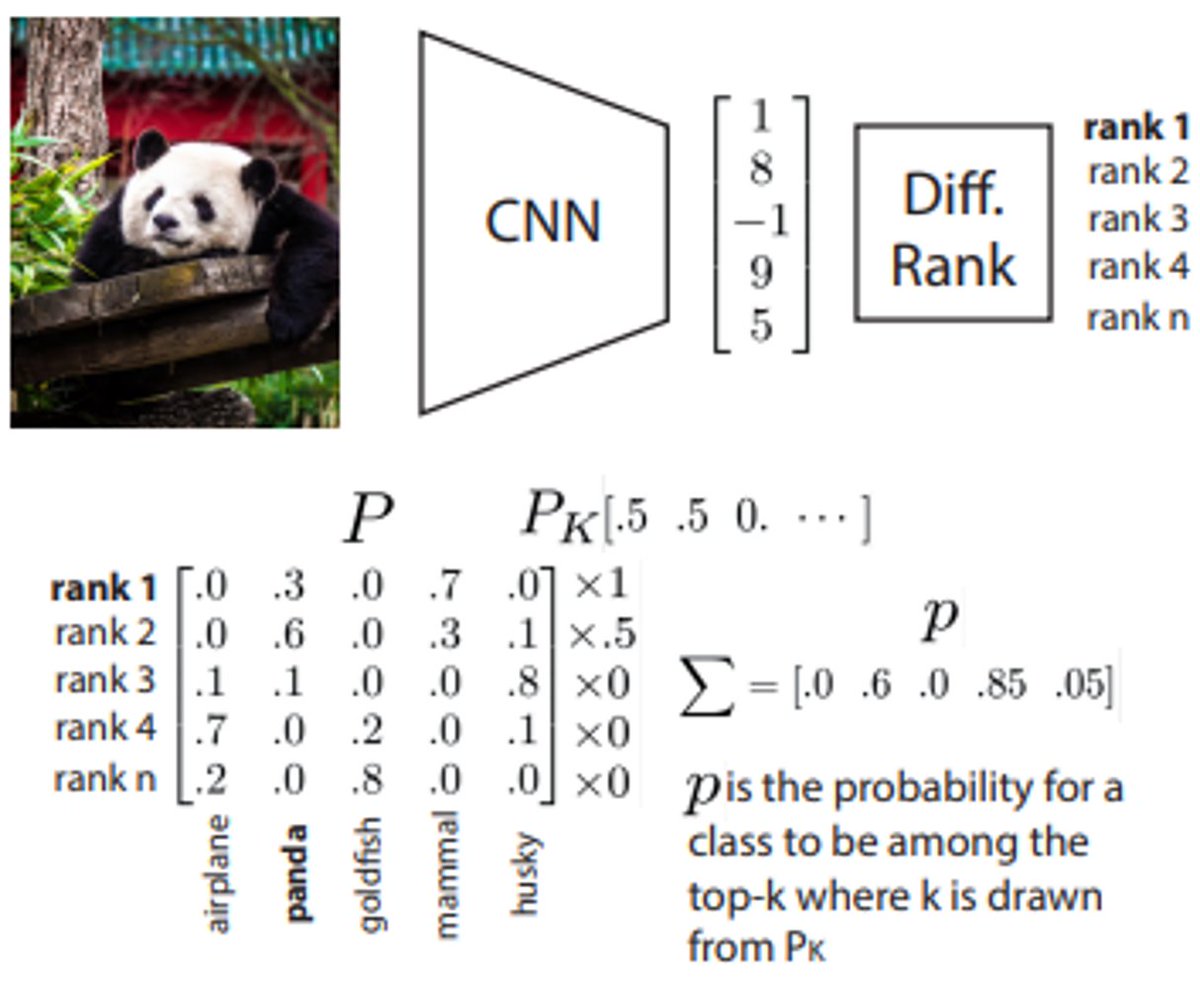

1) Most models nowadays test on multiple downstream tasks

2) There is no specification of data at scale. With 1M ,100M, 1B images? When I use labels vs. no labels?

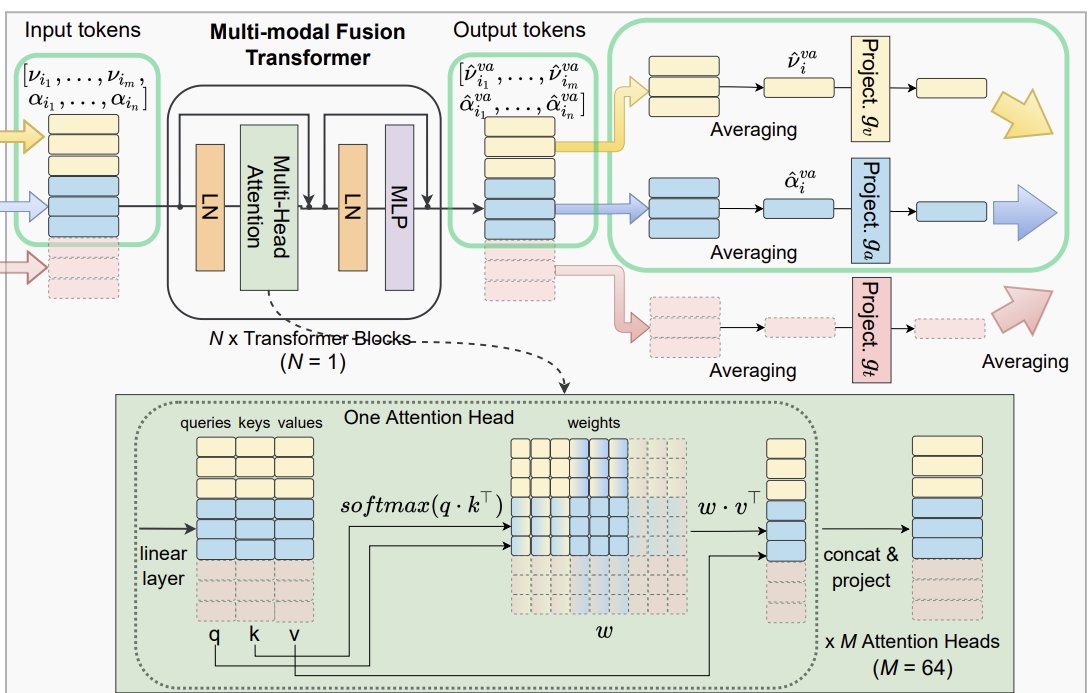

3) We recently found that INet pretraining helps audio classification. Does this turn the backbone into a FM?

6/15

2) There is no specification of data at scale. With 1M ,100M, 1B images? When I use labels vs. no labels?

3) We recently found that INet pretraining helps audio classification. Does this turn the backbone into a FM?

6/15

Most important: By making our models easily available, we are giving up control over them. So even if we have something that only works on one task, we do not control if someone uses it for something new.

This is not a flaw, but allows our field to grow and flourish.

7/15

This is not a flaw, but allows our field to grow and flourish.

7/15

It also ensures safety as others can use those models to check for errors and biases or to understand how those models learned what they did. This is a vital part of research. We can not do without this. But this also means that I would need to consider each model as a FM.

8/15

8/15

Public universities and gov founded research can not provide the infrastructure currently necessary to fulfill the obligations that would come with this definition (and gov would want/have money to pay for that).

9/15

9/15

Even for companies, it might be unreasonable to open-source things bc of the legal trouble and the uncontrollability of the situation (see above).

As a result, the EU AI Act might need to put a big lid on any open-source models published in Europe.

10/15

As a result, the EU AI Act might need to put a big lid on any open-source models published in Europe.

10/15

This would be super bad, bc

1) #researchers (@timnitGebru , @mmitchell_ai @TaliaRinger) need accessible models to investigate them, find out where they fail, and publish those things. This is not optional but vital to guarantee and improve the safety of those models.

11/15

1) #researchers (@timnitGebru , @mmitchell_ai @TaliaRinger) need accessible models to investigate them, find out where they fail, and publish those things. This is not optional but vital to guarantee and improve the safety of those models.

11/15

2) Open-source models might become safer than industry internal models, bc they have been publicly tested, so we might want startups etc to use those models, rather than some closed source stuff.

12/15

12/15

3) Many companies will in the future not publish their models anymore for obvious reasons. So there will be limited access... and open-source, gov founded research might be a major source for such models in the future.

13/15

13/15

I’m not a legal expert but this might be a combination of good intentions and unexpected side effects that might in the long term make the whole situation worse and actually decrease safety in AI. Germans call that #Verschlimmbesserung (we have a word for everything)

14/15

14/15

Thanks for staying with me for so long! Happy to discuss if I got something wrong here.

15/15

15/15

• • •

Missing some Tweet in this thread? You can try to

force a refresh

Read on Twitter

Read on Twitter