Justice Thomas jumped into the #Section230 debate to embrace GOP arguments for narrowing protections for content moderation. He might think differently in a case where the issues he raised were actually briefed by both sides—unlike this very narrow case

techfreedom.org/justice-thomas…

techfreedom.org/justice-thomas…

Thomas often issues such statements when SCOTUS decides not to take a case—to vent his frustrations about the state of the law

But this is the first time SCOTUS has ever considered taking a case involving #Section230. The briefs here did not even address the issues Thomas raises

But this is the first time SCOTUS has ever considered taking a case involving #Section230. The briefs here did not even address the issues Thomas raises

Justice Thomas is free to call for fuller briefing on Section 230’s meaning in, as he says, “an appropriate case,” but this is not that case. Justice Thomas had no need to express his own views, in extensive dicta, without the benefit of the briefing he acknowledges is needed.

The Malwarebytes case was about content filtering tools. The Ninth Circuit imported a "good faith" requirement into #Section230 (c)(2)(B), which protects those who offer content filtering tools to others.

Ironically, Thomas objected to courts’ ‘rel[ying] on purpose and policy’ when interpreting Section 230, yet that is precisely what the Ninth Circuit did; it’s why the Supreme Court should have taken this case. The 9th Circuit read into the statute words that are not there.

It makes sense to require sites to prove ‘good faith’ when claiming (c)(2)(A)’s immunity for their content moderation decisions

It makes no sense when a filtering tool developer claims (c)(2)(B)’s immunity for providing its tool to others to make their own decisions

It makes no sense when a filtering tool developer claims (c)(2)(B)’s immunity for providing its tool to others to make their own decisions

Letting the Ninth Circuit’s decision stand invites litigation against the makers of malware software, parental controls and other tools that empower users to filter content online. That liability will cause many small developers to exit the market even before they are sued.

The Malwarebytes case involved only the interplay between (c)(2)(A) & (c)(2)(B), not the interplay between (c)(2)(A) & (c)(1) or the meaning of (c)(1)

Thomas argues that (c)(1) protects only decisions to leave content up and only (c)(2)(A) protects decisions to take content down

Thomas argues that (c)(1) protects only decisions to leave content up and only (c)(2)(A) protects decisions to take content down

Justice Thomas’s opinion tracks political talking points advanced by the White House about #Section230. Those theories are framed in textualist terms, but they quickly break down upon close examination—as Thomas himself might ultimately agree if he waited to hear from both sides

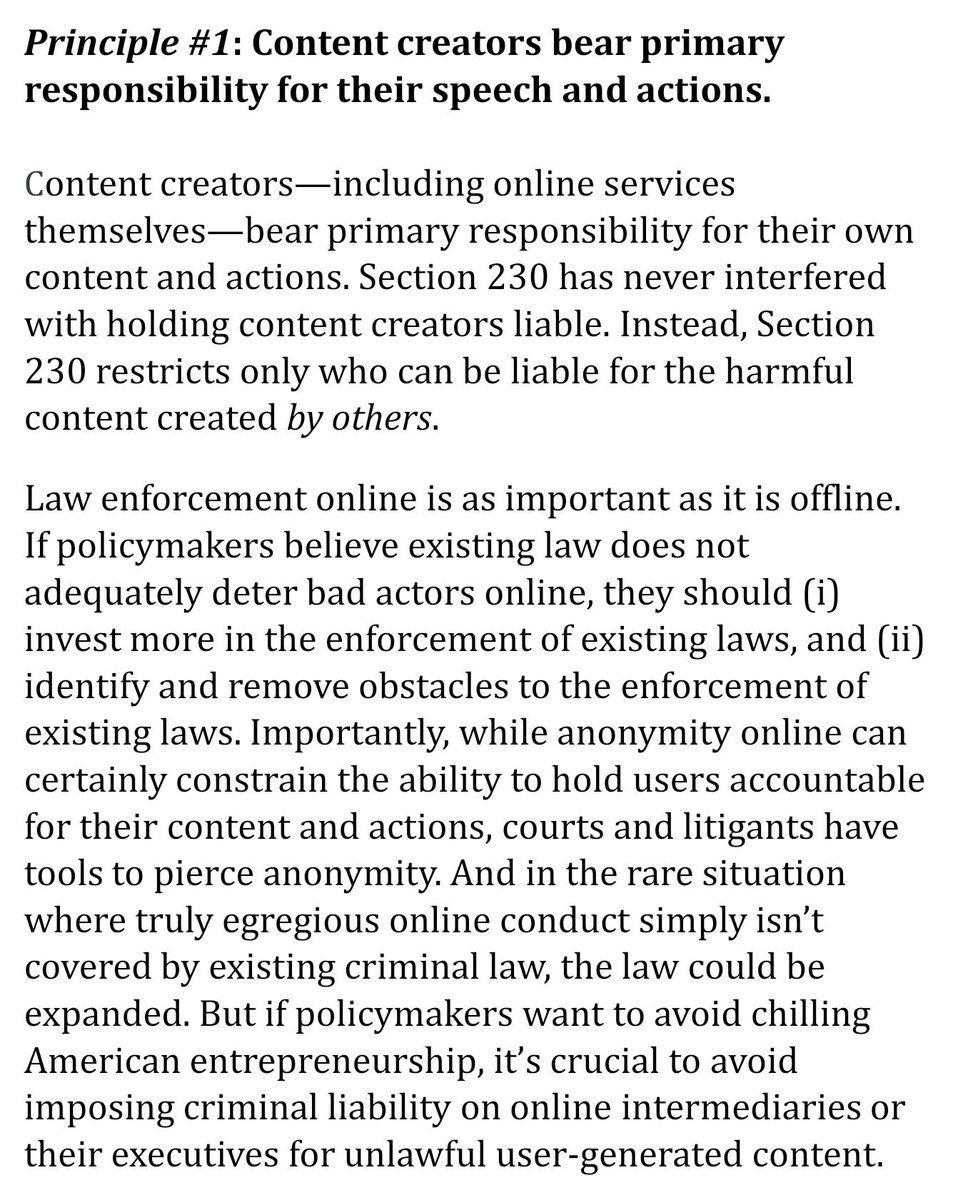

Across the board, #Section230 protects the same thing the First Amendment does: editorial discretion. That’s why Congress said website operators cannot be held liable ‘as publishers’ for content they in no way created.

Our FCC comments explain why: techfreedom.org/ntias-fcc-peti…

Our FCC comments explain why: techfreedom.org/ntias-fcc-peti…

Refusing to carry content one finds objectionable is a core function of any publisher. The courts have interpreted the statute correctly — as a way to short-circuit expensive litigation and thus avoid what one appeals court called ‘death by ten thousand duck-bites.’

Instead of being able to resolve lawsuits over content moderation with a motion to dismiss, Thomas would force websites to litigate lawsuits through discovery—which, on average, accounts for up to 90% of litigation costs

That, in turn, will only discourage content moderation

But #Section230’s central purpose was to avoid the Moderator’s Dilemma: Congress wanted to ensure that websites weren’t discouraged from trying to clean up harmful or illegal content

But #Section230’s central purpose was to avoid the Moderator’s Dilemma: Congress wanted to ensure that websites weren’t discouraged from trying to clean up harmful or illegal content

If, as Thomas argues, 230(c)(1) doesn’t protect websites from being held liable as distributors for content they knew, or should have known, was illegal, this liability will create a perverse incentive not to monitor user content—another version of the Moderator’s Dilemma

Holding sites liable for content they edit in any way, as Thomas proposes, could, conversely, discourage them from attempting to make hard calls, such as blotting out objectionable words (eg racial sluts) while leaving other content up

They may simply take down content entirely

They may simply take down content entirely

So, in summary...

Thomas's opinion has no legal weight, isn't based on the kind of briefing the Court relies on in actual decisions

He should have limited himself to urging plaintiffs to bring more #Section230 cases so these issues could be fully briefed

Thomas's opinion has no legal weight, isn't based on the kind of briefing the Court relies on in actual decisions

He should have limited himself to urging plaintiffs to bring more #Section230 cases so these issues could be fully briefed

We explained why the Court should have taken, and reversed, the Ninth Circuit's decision in Malwarebytes when we filed our amicus brief back in June:

techfreedom.org/scotus-should-…

techfreedom.org/scotus-should-…

For a fuller explanation of these issues, read our comments on the NTIA petition asking the FCC to rewrite #Section230: techfreedom.org/ntias-fcc-peti…

And our reply comments: techfreedom.org/ntias-supporte…

And our reply comments: techfreedom.org/ntias-supporte…

Whoops: obviously we meant ANTI-malware software here

• • •

Missing some Tweet in this thread? You can try to

force a refresh