New WP for your doomscroll:

➤We follow 842 Twitter users with Dem or Rep bot

➤We find large causal effect of shared partisanship on tie formation: Users ~3x more likely to follow-back a co-partisan

psyarxiv.com/ykh5t/

Led by @_mohsen_m w/ @Cameron_Martel_ @deaneckles

1/

➤We follow 842 Twitter users with Dem or Rep bot

➤We find large causal effect of shared partisanship on tie formation: Users ~3x more likely to follow-back a co-partisan

psyarxiv.com/ykh5t/

Led by @_mohsen_m w/ @Cameron_Martel_ @deaneckles

1/

We are more likely to be friends with co-partisans offline & online

But this doesn't show *causal* effect of shared partisanship on tie formation

* Party correlated w many factors that influence tie formation

* Could just be preferential exposure (eg via friend rec algorithm)

But this doesn't show *causal* effect of shared partisanship on tie formation

* Party correlated w many factors that influence tie formation

* Could just be preferential exposure (eg via friend rec algorithm)

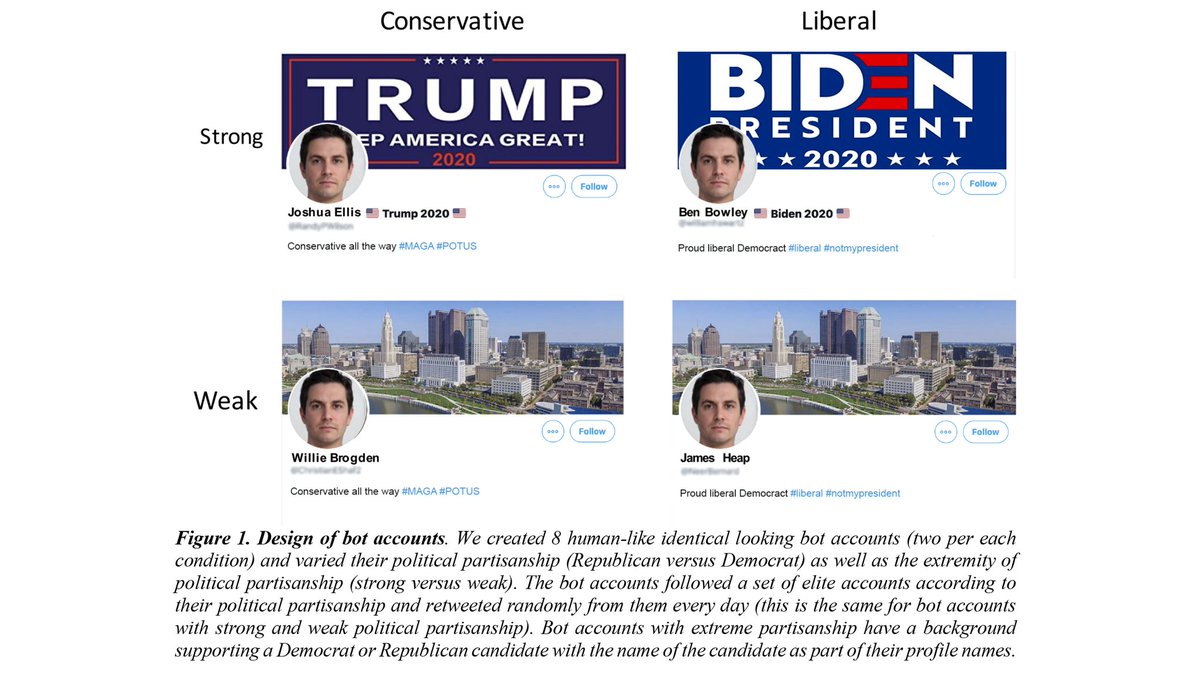

So we test causal effect using Twitter field exp

Created bot accounts that strongly or weakly identified as Dem or Rep supporters

Randomly assigned 842 users to be followed by one of our accounts, and examined the prob that they reciprocated and followed our account back

3/

Created bot accounts that strongly or weakly identified as Dem or Rep supporters

Randomly assigned 842 users to be followed by one of our accounts, and examined the prob that they reciprocated and followed our account back

3/

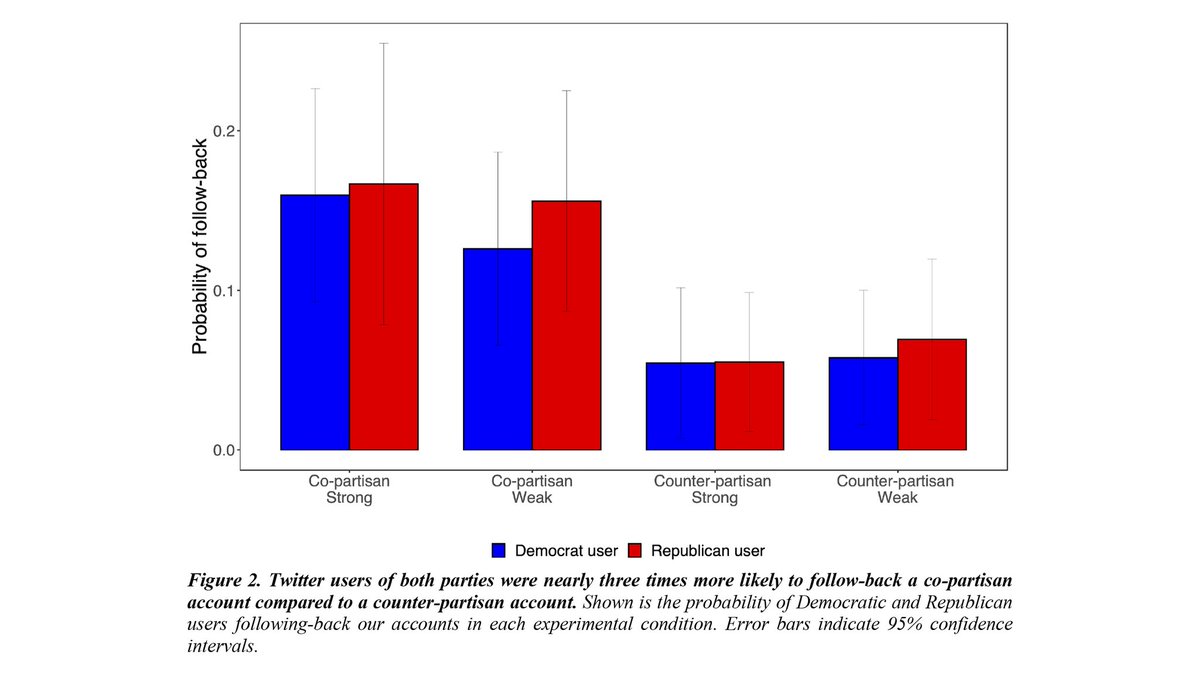

RESULTS!

➤Users were ~3x more likely to follow-back bots whose partisanship matched their own

➤Strength of bot partisanship didn't matter much

➤Dems & Reps showed equivalent level of tie formation bias (no partisan asymmetry)

4/

➤Users were ~3x more likely to follow-back bots whose partisanship matched their own

➤Strength of bot partisanship didn't matter much

➤Dems & Reps showed equivalent level of tie formation bias (no partisan asymmetry)

4/

Shows strong causal effect of shared partisanship on actual social tie formation

➤Ecologically valid support for prior results from affective polarization survey exps

➤Suggests partisan psych drives homophily, s/t algorithmic help needed to increase cross-party connection

5/

➤Ecologically valid support for prior results from affective polarization survey exps

➤Suggests partisan psych drives homophily, s/t algorithmic help needed to increase cross-party connection

5/

(Although of course not clear if it's actually beneficial to increase cross-party connection - @chris_bail et al suggest maybe not pnas.org/content/115/37…, @RoeeLevyZ suggests maybe yes papers.ssrn.com/sol3/papers.cf…)

6/

6/

What I find striking about these results is not so much that the effect exists per se, but rather how big it is

Also, nice how social media field exps can combine causal inference with ecological validity. V excited for to do more in this space, under lead of @_mohsen_m

Also, nice how social media field exps can combine causal inference with ecological validity. V excited for to do more in this space, under lead of @_mohsen_m

These results are another stark reminder (as if we needed more right now) of the political sectarian that is gripping America- as described by @EliJFinkel led Science paper out this week

science.sciencemag.org/content/370/65…

Happy doomscrolling everyone

(& of course, comments appreciated!)

science.sciencemag.org/content/370/65…

Happy doomscrolling everyone

(& of course, comments appreciated!)

• • •

Missing some Tweet in this thread? You can try to

force a refresh