Excellent paper from Google discussing the robustness of Deep Learning models when deployed in real domains. arxiv.org/abs/2011.03395

The issue is described as 'underspecification'. The analogy they make is linear equations with more unknowns than the number of equations. The excess freedom leads to differing behavior across networks trained on the same dataset.

This is one of the rare papers that has practical significance to the production deployment of deep learning. I've alluded to this problem previously with respect to physical simulations. medium.com/intuitionmachi…

I wrote, "The main argument against DL models is that they don’t represent any physics, although they seem to generate simulations that do look realistically like physics."

Conventional computational models are constrained to reflect the actual physics. Proper DL methods therefore also have to be constrained similarly in their dynamics.

Although we have seem some impressive progress in this area. It's difficult to do well in domains like NLP and EHR.

https://twitter.com/IntuitMachine/status/1316506946896359433

This is because our models of these domains are also underspecified. We do not know the constraints to aid in constraining our models. So in edge cases that are absent in our training set, we are unaware of their emergent behavior.

This problem however is not unique to DL models. They also exist in conventional computation models. That is why ensembles of models are always used to predict weather patterns. The Church-Turing hypothesis is simply unavoidable for complex systems.

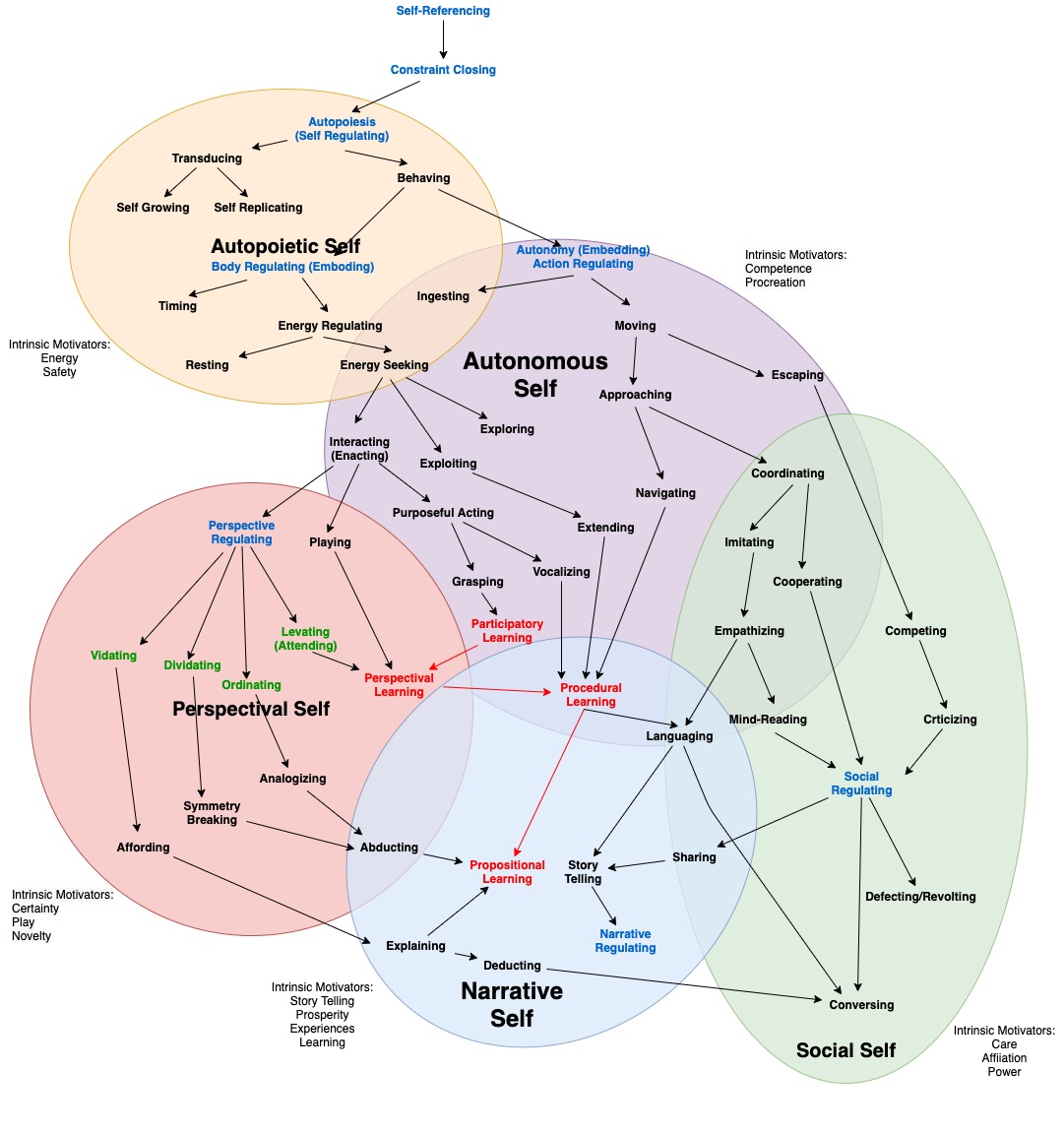

The path forward for robust AI will always depend on good explanatory models that capture the relevant causality of the system being predicted. Advanced AI should not be driven naively by curve fitting, but rather by relevance realization.

That is, true intelligence is a multi-scale phenomena and the issue of judgement is critical to its effective deployment.

Wrote this up here: medium.com/intuitionmachi…

• • •

Missing some Tweet in this thread? You can try to

force a refresh