Tonight 12/10 9pm PT, Aviral Kumar will present Model Inversion Networks (MINs) at @NeurIPSConf. Offline model-based optimization (MBO) that uses data to optimize images, controllers and even protein sequences!

paper: tinyurl.com/mins-paper

pres: neurips.cc/virtual/2020/p…

more->

paper: tinyurl.com/mins-paper

pres: neurips.cc/virtual/2020/p…

more->

The problem setting: given samples (x,y) where x represents some input (e.g., protein sequence, image of a face, controller parameters) and y is some metric (e.g., how well x does at some task), find a new x* with the best y *without access to the true function*.

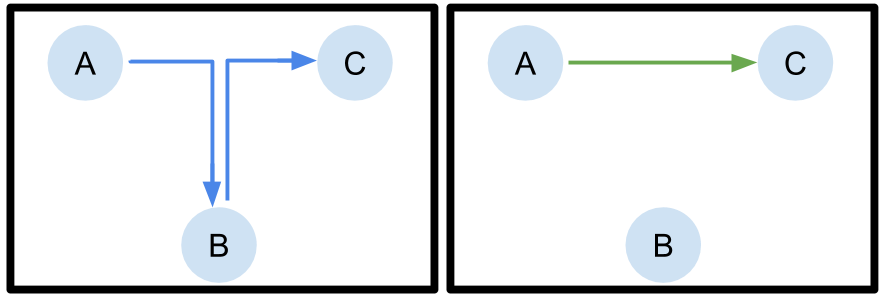

Classically, model-based optimization methods would learn some proxy function (acquisition function) fhat(x) = y, and then solve x* = argmax_x fhat(x), but this can result in OOD inputs to fhat(x) when x is very high dimensional.

This can be mitigated by active sampling (i.e., collecting more data), but this is often not possible in practice (e.g., requires running costly experiments). Or by using Bayesian models like GPs, but these are difficult to scale to high dimensions.

MINs address this issue with a simple approach: instead of learning f(x) = y, learn f^{-1}(y) = x -- here, the input y is very low dimensional (1D!), making it much easier to handle OOD inputs. This ends up working very well in practice.

Why does this problem matter? In many cases we *already* have offline data (e.g., previously synthesized drugs and their efficacies, previously tested aircraft wings and their performance, prior microchips and their speed), so offline MBO uses this data to produce new designs.

In contrast, many alternative methods rely on active sampling of the data -- when we are talking about real world data (e.g., biology experiments, aircraft designs, etc.), each datapoint can be expensive and time-consuming, while offline MBO can reuse the same data.

• • •

Missing some Tweet in this thread? You can try to

force a refresh