My favorite part of @NeurIPSConf is the workshops, a chance to see new ideas and late-breaking work. Our lab will present a number of papers & talks at workshops:

thread below ->

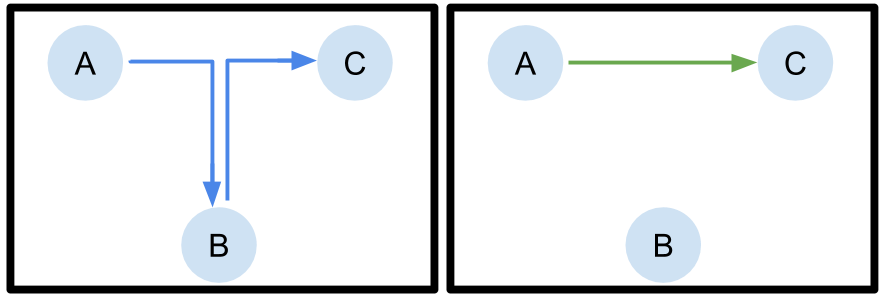

meanwhile here is a teaser image :)

thread below ->

meanwhile here is a teaser image :)

At robot learning workshop, @katie_kang_ will present the best-paper-winning (congrats!!) “Multi-Robot Deep Reinforcement Learning via Hierarchically Integrated Models”: how to share modules between multiple real robots; recording here: (16:45pm PT 12/11)

At the deep RL workshop, Ben Eysenbach will talk about how MaxEnt RL is provably robust to certain types of perturbations. Contributed talk at 14:00pm PT 12/11.

Paper: drive.google.com/file/d/1fENhHp…

Talk: slideslive.com/38941344/maxen…

Paper: drive.google.com/file/d/1fENhHp…

Talk: slideslive.com/38941344/maxen…

Ben will also present C-Learning: a new algorithm for goal-conditioned learning that combines RL with principled training of predictive models. Deep RL poster session, 12:30 pm PT.

Paper: arxiv.org/abs/2011.08909

Website: ben-eysenbach.github.io/c_learning/

Talk: slideslive.com/38941367/clear…

Paper: arxiv.org/abs/2011.08909

Website: ben-eysenbach.github.io/c_learning/

Talk: slideslive.com/38941367/clear…

Also at deep RL WS posters, Jensen Gao&@sidgreddy will present “XT2: Training an X-to-Text Typing Interface”: how deep RL can assist users to type via gaze and other interfaces, esp. for persons with disabilities.

Paper: drive.google.com/file/d/12f2P2b…

Talk: slideslive.com/38941310/xt2-t…

Paper: drive.google.com/file/d/12f2P2b…

Talk: slideslive.com/38941310/xt2-t…

Ashvin Nair will present AWAC, offline RL with online finetuning, also at the deep RL WS poster session.

pres: slideslive.com/38941335/accel…

paper: arxiv.org/abs/2006.09359

blog: bair.berkeley.edu/blog/2020/09/1…

pres: slideslive.com/38941335/accel…

paper: arxiv.org/abs/2006.09359

blog: bair.berkeley.edu/blog/2020/09/1…

Also at deep RL WS posters, @timrudner @vitchyr will present “Outcome-Driven Reinforcement Learning,” describing how goal-conditioned RL can be derived in a principled way via variational inference.

Paper: timrudner.com/papers/Outcome…

Talk: slideslive.com/38941289/outco…

Paper: timrudner.com/papers/Outcome…

Talk: slideslive.com/38941289/outco…

Also at deep RL WS, Aviral Kumar will present “Implicit Under-Parameterization” – our work on how TD learning can result in excessive aliasing due to rank collapse.

Paper: arxiv.org/abs/2010.14498

Video:

Paper: arxiv.org/abs/2010.14498

Video:

Also at deep RL WS, @snasiriany and co-authors will present “DisCo RL”: RL conditioned on distributions, which provides much more expressivity than conditioning on goals.

Paper: snasiriany.me/files/disco_rl…

Presentation: slideslive.com/38941375/distr…

Poster: snasiriany.me/files/disco_rl…

Paper: snasiriany.me/files/disco_rl…

Presentation: slideslive.com/38941375/distr…

Poster: snasiriany.me/files/disco_rl…

At deepRL WS, @mmmbchang will present “Modularity in Reinforcement Learning: An Algorithmic Causality Perspective on Credit Assignment” how causal models help us understand transfer in RL!

Poster: bit.ly/2LjSelT

Paper: bit.ly/2KanyTK

Vid: bit.ly/3gxeMLp

Poster: bit.ly/2LjSelT

Paper: bit.ly/2KanyTK

Vid: bit.ly/3gxeMLp

At deep RL WS, and as long oral presentation at offline RL WS, @avisingh599 will present COG: how offline RL can chain skills and acquire a kind of “common sense”

Vid:

Web: sites.google.com/view/cog-rl

Blog: bair.berkeley.edu/blog/2020/12/0…

Offline RL talk 12/12 9:50am

Vid:

Web: sites.google.com/view/cog-rl

Blog: bair.berkeley.edu/blog/2020/12/0…

Offline RL talk 12/12 9:50am

At robot learning WS (8:45am PT poster) and real-world RL WS (12/12 11:20am poster), @avisingh599 will present PARROT: pre-training models that explore for diverse robotic skills.

Arxiv: arxiv.org/abs/2011.10024

Video:

Website: sites.google.com/view/parrot-rl

Arxiv: arxiv.org/abs/2011.10024

Video:

Website: sites.google.com/view/parrot-rl

At meta-learning workshop, Marvin Zhang will present Adaptive Risk Minimization, how models can learn to adapt to distributional shifts at test time via meta-learning.

Paper: arxiv.org/abs/2007.02931

Pres: slideslive.com/38941545/adapt…

Paper: arxiv.org/abs/2007.02931

Pres: slideslive.com/38941545/adapt…

Enjoy all the NeurIPS workshops!!

At deep RL WS, Abhishek Gupta & @its_dibya present GCSL (goal-conditioned supervised learning), a simple principled method to use supervised learning for RL!

Room B, B5, 1230-1330 & 18-19 PT

Paper arxiv.org/abs/1912.06088

Pres slideslive.com/38941275/learn…

Blog bair.berkeley.edu/blog/2020/10/1…

Room B, B5, 1230-1330 & 18-19 PT

Paper arxiv.org/abs/1912.06088

Pres slideslive.com/38941275/learn…

Blog bair.berkeley.edu/blog/2020/10/1…

Also at deep RL WS, Abhishek Gupta & Kevin Lin will present BayCLR -- normalized maximum likelihood (NML) + meta-learning for setting goals!

Room D, C7, Deep RL Workshop, 12:30-1:30 and 6-7 PST

Paper: drive.google.com/file/d/1sd7nWn…

Slideslive: slideslive.com/38941398/reinf…

Room D, C7, Deep RL Workshop, 12:30-1:30 and 6-7 PST

Paper: drive.google.com/file/d/1sd7nWn…

Slideslive: slideslive.com/38941398/reinf…

At DRL WS and robot learning WS, @_oleh, @chuning_zhu will present collocation-based planning for image-based model-based RL! By relaxing dynamics, robot images object "flying" to goal before figuring out how to move it

Video slideslive.com/38943304/latco…

paper drive.google.com/file/d/1zG9NxH…

Video slideslive.com/38943304/latco…

paper drive.google.com/file/d/1zG9NxH…

At ML4Molecules (ml4molecules.github.io), Justin Fu will present Offline Model-Based Optimization via Normalized Maximum Likelihood (NEMO), for optimizing designs from data w/ NML! 8:30am ML4Molecules poster session

Paper: drive.google.com/file/d/1u-SC7O…

Poster: drive.google.com/file/d/133R0Aa…

Paper: drive.google.com/file/d/1u-SC7O…

Poster: drive.google.com/file/d/133R0Aa…

At WS on challenges of real-world RL, B. Eysenbach will present DARC. Domain adaptation for RL: how to train RL agents in one domain, but have them pretend they are in another

Pres (1520 PT Sat 12/12) drive.google.com/file/d/1YwfWOv…

Paper arxiv.org/abs/2006.13916

blog.ml.cmu.edu/2020/07/31/mai…

Pres (1520 PT Sat 12/12) drive.google.com/file/d/1YwfWOv…

Paper arxiv.org/abs/2006.13916

blog.ml.cmu.edu/2020/07/31/mai…

• • •

Missing some Tweet in this thread? You can try to

force a refresh