I respect Pedro's work, and I also really enjoyed his book. But man, I don't know where I should start with this one…

https://twitter.com/pmddomingos/status/1336187141366317056

Maybe can start with “Facial feature discovery for ethnicity recognition” (Published by @WileyInResearch in 2018):

https://twitter.com/hardmaru/status/1135377283940700160

2 years later

“Huawei tested AI software that could recognize Uighur minorities and alert police. The face-scanning system could trigger a ‘Uighur alarm,’ sparking concerns that the software could help fuel China’s crackdown”

The article cited the paper.

washingtonpost.com/technology/202…

“Huawei tested AI software that could recognize Uighur minorities and alert police. The face-scanning system could trigger a ‘Uighur alarm,’ sparking concerns that the software could help fuel China’s crackdown”

The article cited the paper.

washingtonpost.com/technology/202…

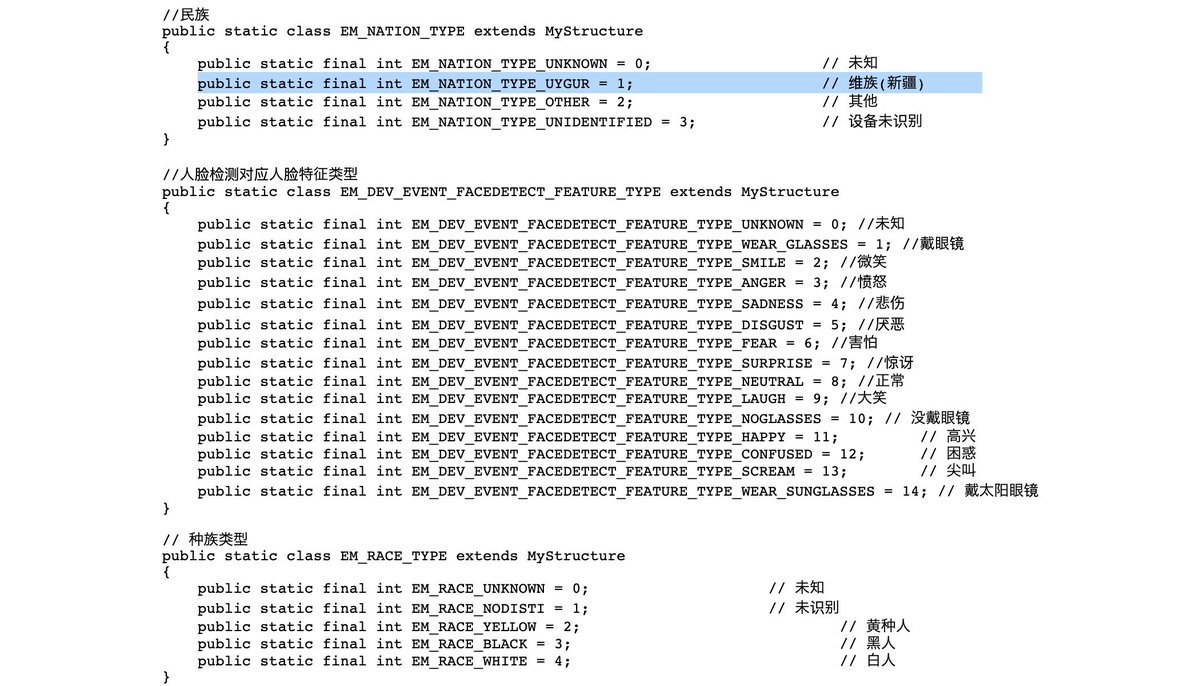

Uighur detection (and only Uighur detection) is literally now embedded into the surveillance camera API of companies such as Hikvision:

https://twitter.com/kcimc/status/1323178590922366976

Entire sub-industries in AI / Tech has emerged in authoritarian countries that is totally “unaware that automating racial recognition algorithms would even be controversial”

https://twitter.com/paulmozur/status/1336500430059175940

Rather than a longer Twitter thread, here's an article in @Nature worth reading about “the ethical questions that haunt facial-recognition research” which discusses some of these issues in depth, with several front line AI researchers with different views.

nature.com/articles/d4158…

nature.com/articles/d4158…

At the end of the day, the @NeurIPSConf Statement of Impact won’t solve our problems, and may even be weird for many papers, but I do believe it is a step in the right direction.

https://twitter.com/hardmaru/status/1230516096928641027

A final point:

I don't believe the goal of NeurIPS Impact Statement is to censor papers, but rather to make researchers more aware of ethical implications of their work. Hopefully when PhD students graduate, this thought process will remain in their work.

I don't believe the goal of NeurIPS Impact Statement is to censor papers, but rather to make researchers more aware of ethical implications of their work. Hopefully when PhD students graduate, this thought process will remain in their work.

https://twitter.com/RaiaHadsell/status/1336380383424864256

• • •

Missing some Tweet in this thread? You can try to

force a refresh