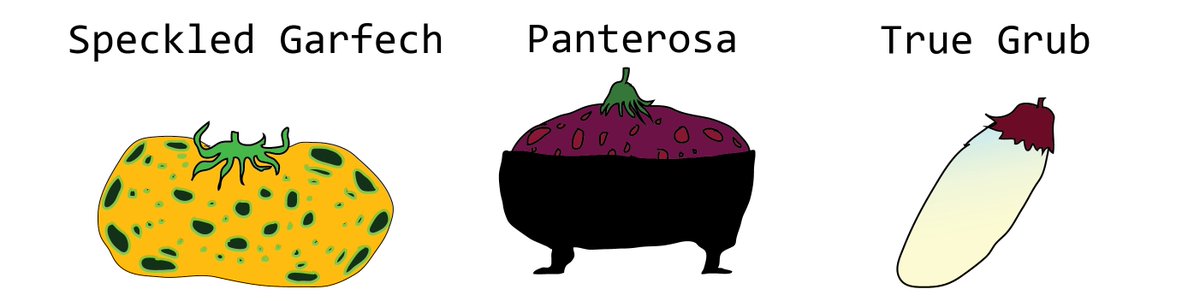

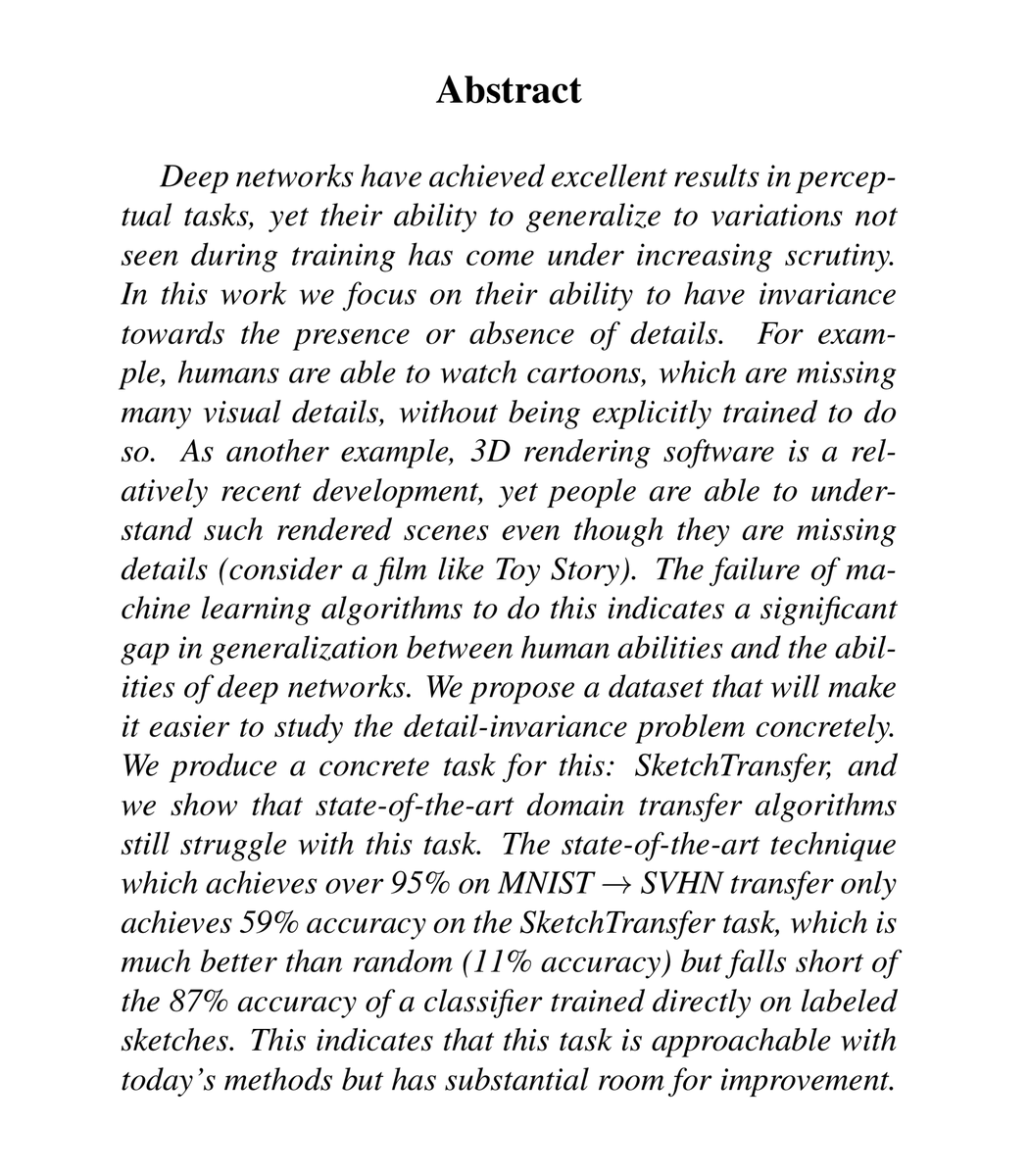

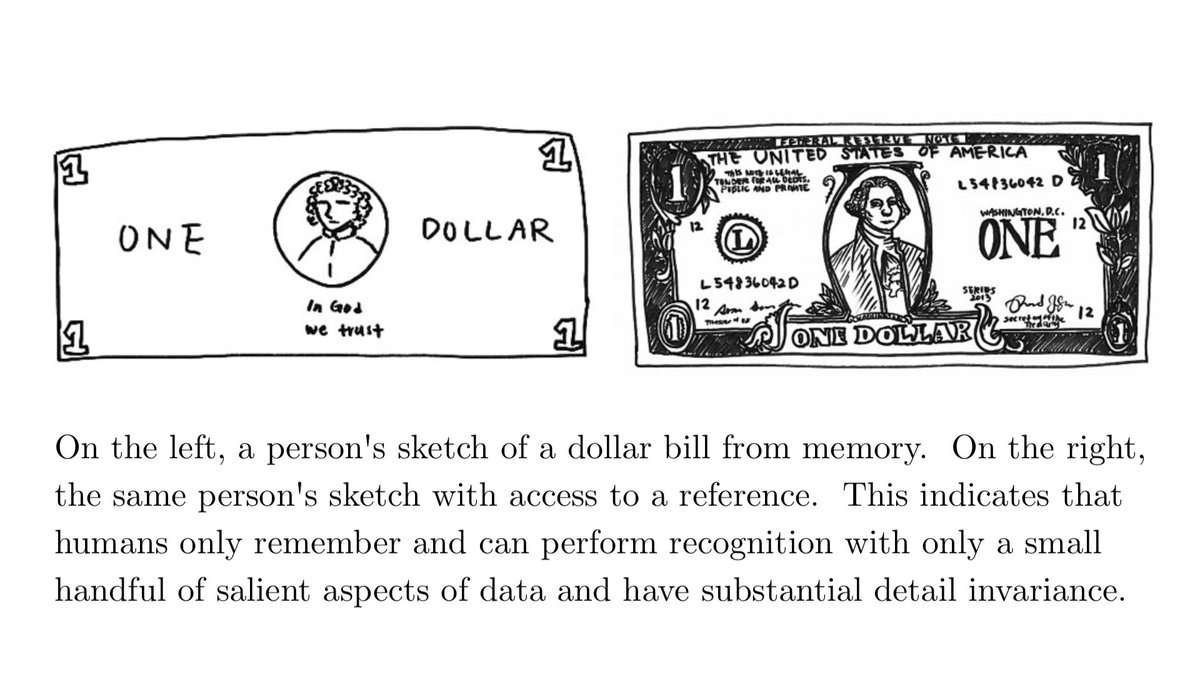

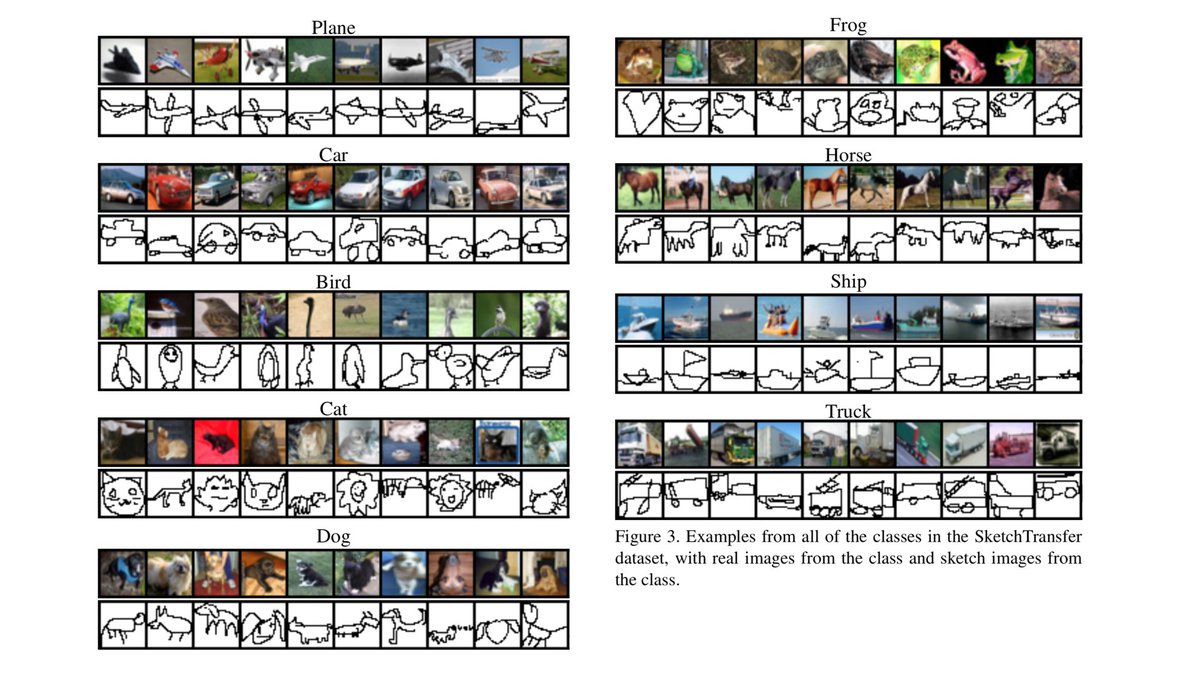

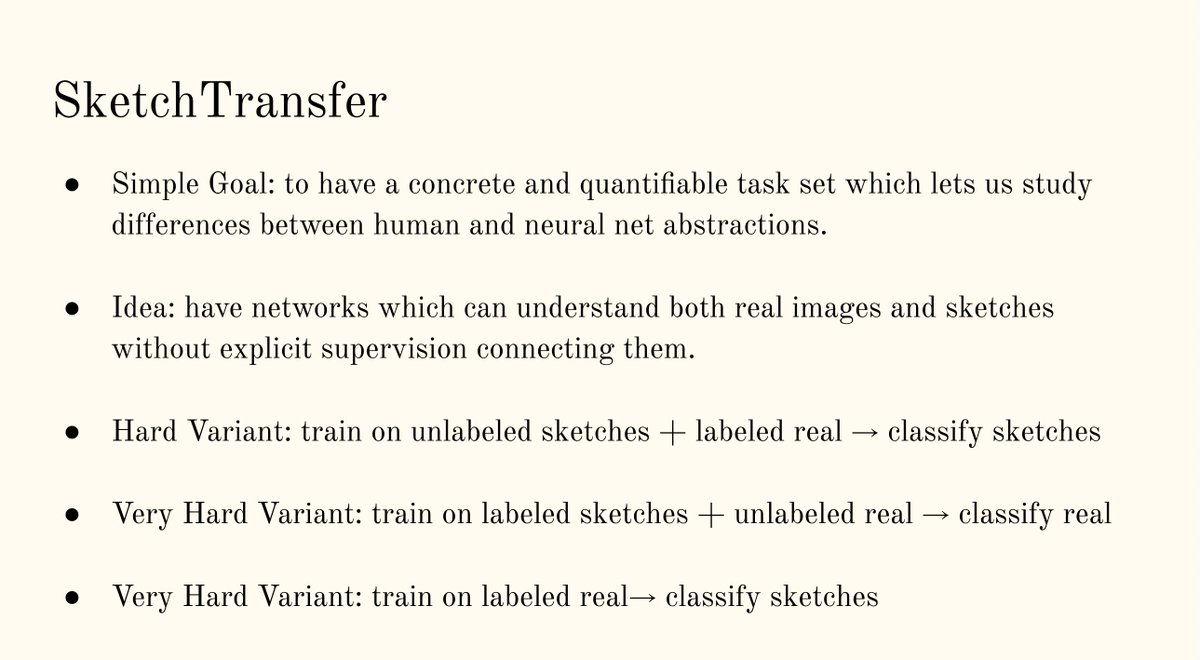

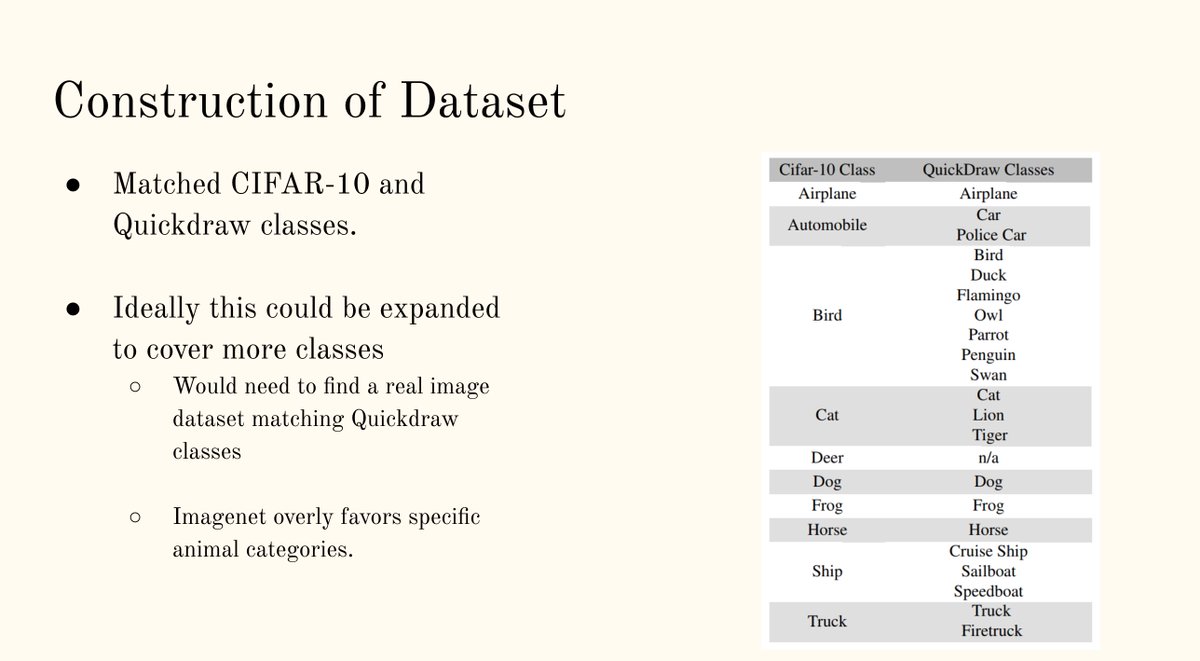

If we train a neural net to classify CIFAR10 photos but also give it unlabelled QuickDraw doodles, how well can it classify these doodles? arxiv.org/abs/1912.11570

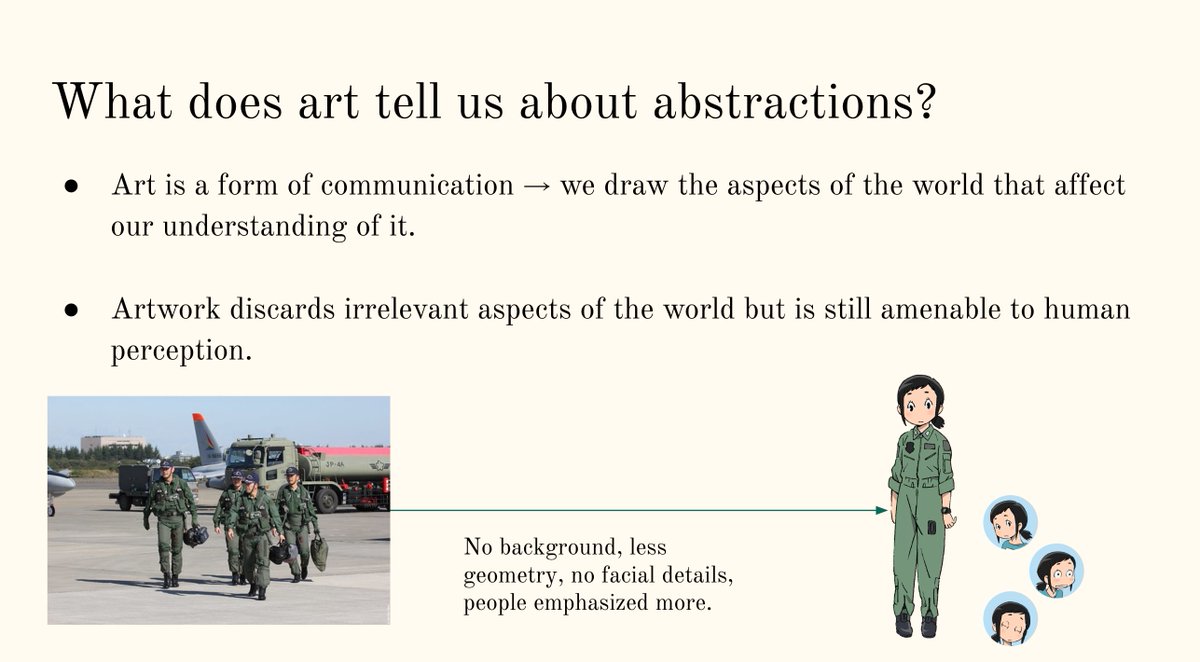

Recent paper by Alex Lamb, @sherjilozair, Vikas Verma + me looks motivated by abstractions learned by humans and machines.

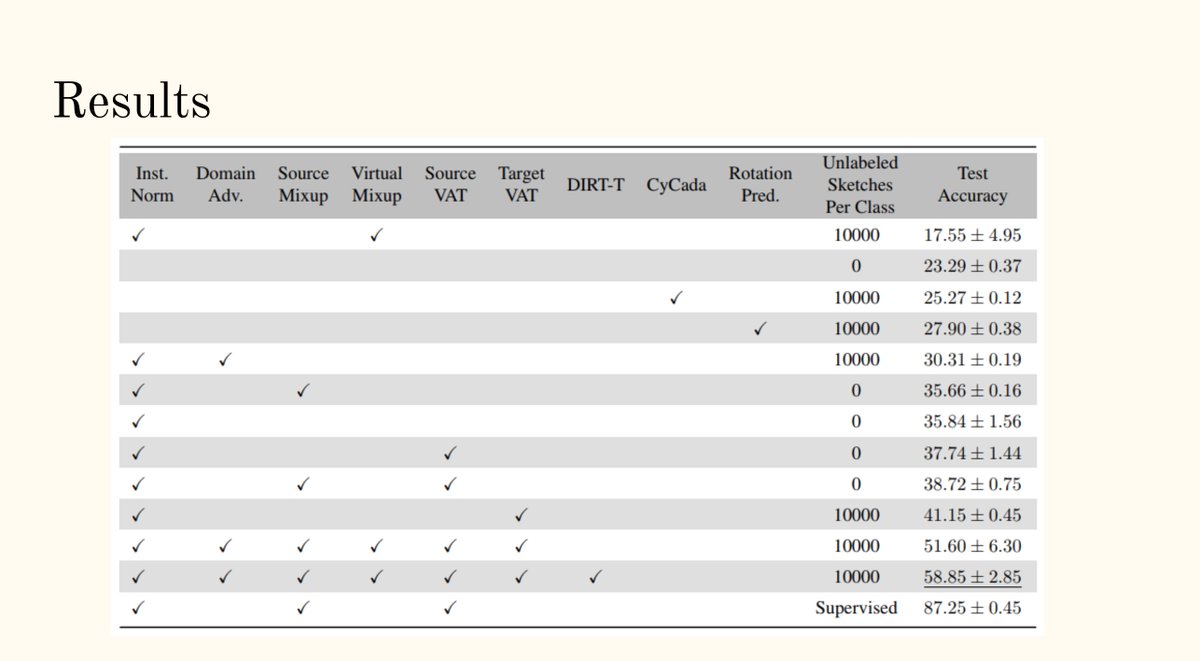

Alex trained SOTA domain transfer methods on labelled CIFAR10 data + unlabelled QuickDraw doodles & reported his findings:

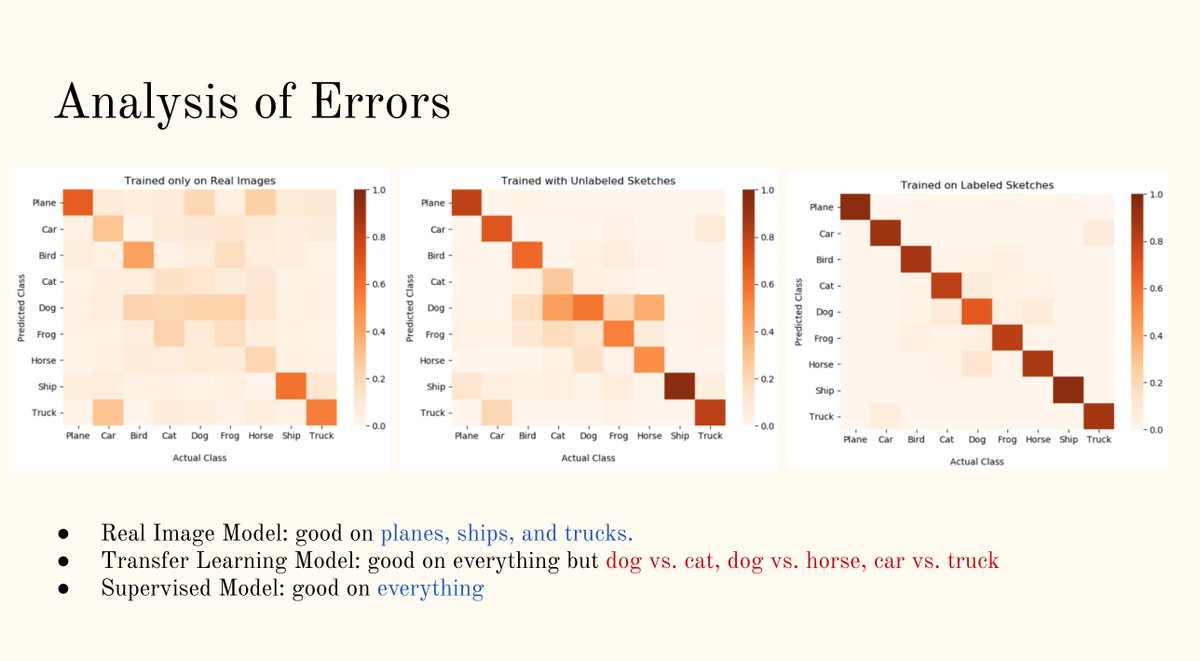

Surprisingly, training a model only on CIFAR10 still does quite well on ships, planes & trucks!