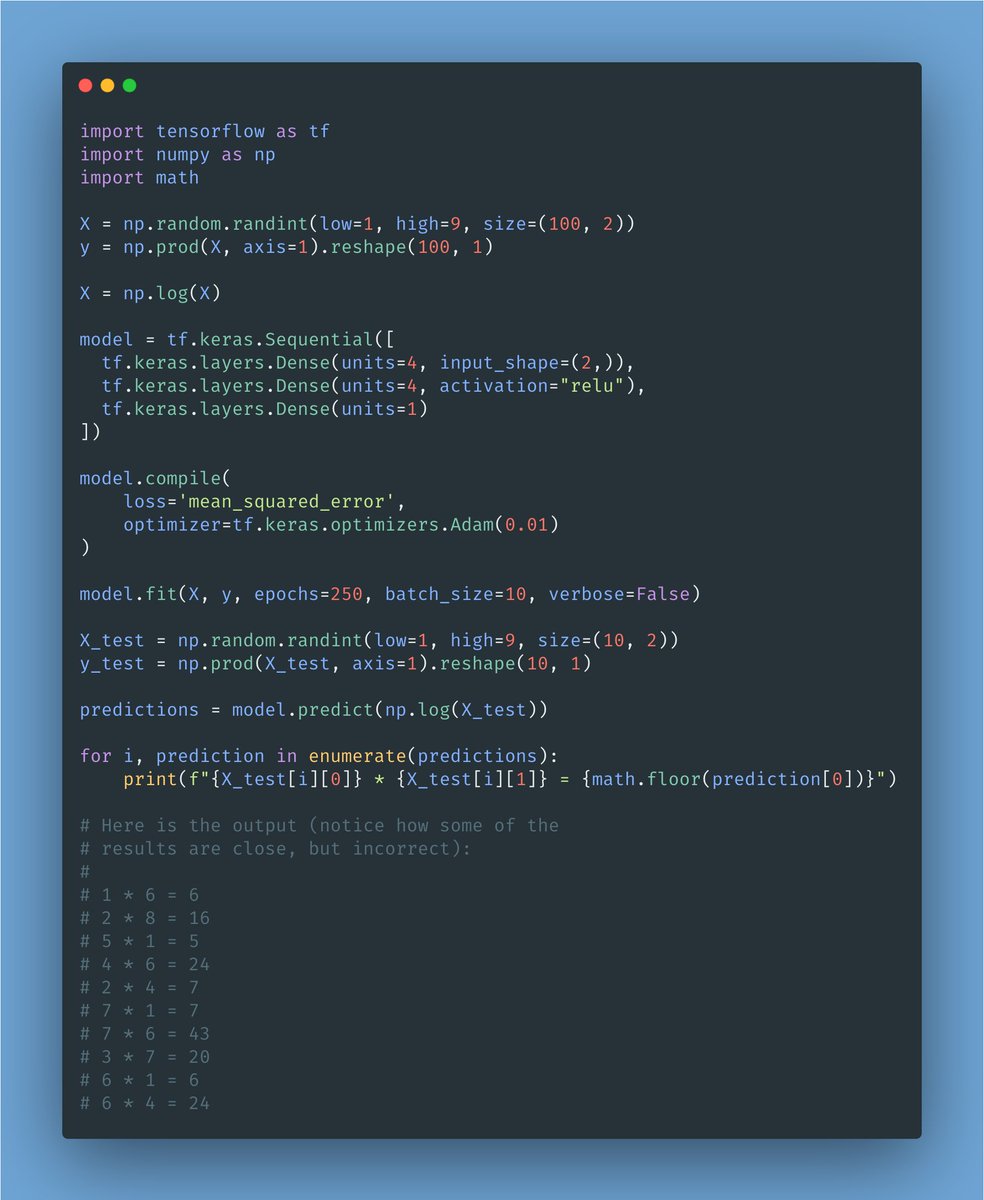

Here is a full Python 🐍 implementation of a neural network from scratch in less than 20 lines of code!

It shows how it can learn 5 logic functions. (But it's powerful enough to learn much more.)

An excellent exercise in learning how feedforward and backpropagation work!

It shows how it can learn 5 logic functions. (But it's powerful enough to learn much more.)

An excellent exercise in learning how feedforward and backpropagation work!

A quick rundown of the code:

▫️ X → input

▫️ layer → hidden layer

▫️ output → output layer

▫️ W1 → set of weights between X and layer

▫️ W2 → set of weights between layer and output

▫️ error → how far is our prediction after every epoch

▫️ X → input

▫️ layer → hidden layer

▫️ output → output layer

▫️ W1 → set of weights between X and layer

▫️ W2 → set of weights between layer and output

▫️ error → how far is our prediction after every epoch

I'm using a sigmoid as the activation function. You will recognize it through this formula:

sigmoid(x) = 1 / 1 + exp(-x)

It would have been nicer to extract it as a separate function, but then the code wouldn't be as compact 😉

sigmoid(x) = 1 / 1 + exp(-x)

It would have been nicer to extract it as a separate function, but then the code wouldn't be as compact 😉

Within the loop, we first update the value of both layers. This is "forward propagation."

Then we compute the error.

Then we update the value of the weights (starting with the last set.) This is "backpropagation."

Then we compute the error.

Then we update the value of the weights (starting with the last set.) This is "backpropagation."

There are a couple of reasons you want to use sigmoid on the last layer:

1. You need to constraint the weights from growing too much. The sigmoid will do just that.

2. You want your output to be between 0 and 1.

Try to remove it and print the output.

1. You need to constraint the weights from growing too much. The sigmoid will do just that.

2. You want your output to be between 0 and 1.

Try to remove it and print the output.

https://twitter.com/Alanmatys/status/1356602781193412614?s=20

Here are some (hand-wavy) notes about the backpropagation step.

Hopefully, these explain how W2 and W1 are computed.

Hopefully, these explain how W2 and W1 are computed.

• • •

Missing some Tweet in this thread? You can try to

force a refresh