Here we go. @NickKristof calls for Paypal to stop working with adult companies — with no mention of the real ways CSAM is shared online. Because private social media channels and the Dark Web aren't of interest to the evangelicals he works with. nytimes.com/2021/04/16/opi…

His source again is Laila Micklewait, who — again — is not with Exodus Cry, an Evangelical organization that wants to stop sex work, but with "The Justice Defense Fund"

And just for good I-don't-care-about-actual-data measure, he throws in the terrible study from the British Journal of Criminology and *drumroll* entirely misrepresents it. Now it shows *actual* sexual violence.

*Reminder* — It doesn't!

https://twitter.com/mikestabile/status/1379157350938865669

But perhaps my favorite @NickKristof-ism is the phrase "rapists, real or fake*

This is a man who has a history of misrepresenting sex trafficking and sex crimes. He's a true believer in what evangelicals are selling (at least when it comes to sex), and he leaves tremendous damage in his wake. washingtonpost.com/blogs/erik-wem…

This is the man who, in recklessly going after Backpage for similar claims as he's now making about adult sites, helped pushed sex workers offline and onto the streets. theguardian.com/commentisfree/…

It is NOT that we shouldn't go after CSAM and revenge porn. They are a scourge. But there are ways to do it that don't strip sex workers of things like banking and online ads that actually protect them!

But that would take someone — either Kristof of the evangelicals who feed him information — believing that #sexworkisrealwork. It would take looking at the actual data. This column is gasoline on a fire. It's irresponsible and dangerous. Shame on @nytimes for platforming this.

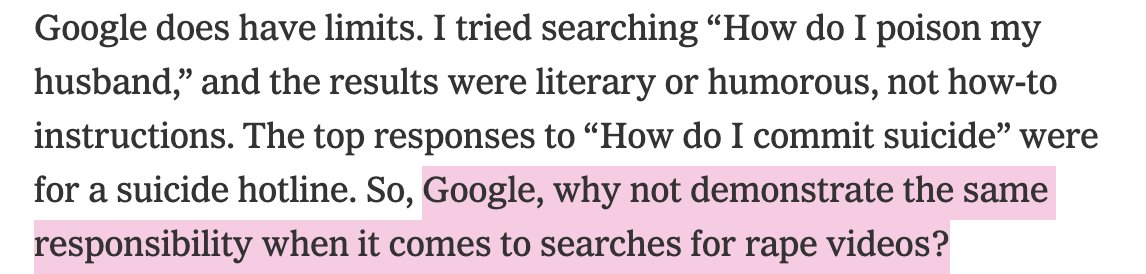

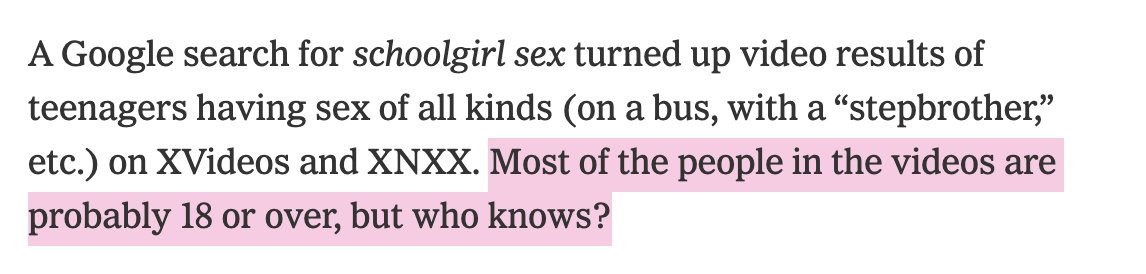

I can't stress enough how unserious @NickKristof is. I just googled "rape unconscious girl" which he has called on Google to remove. It's CLEARLY fictitious. I don't know who made it — most payment processors ban it — but he's demanding Google police fantasy.

This is no longer about CSAM or non-consensual content (if it ever was). If it was, he and Exodus Cry wouldn't constantly go down these wormholes where they pretend not to know the difference between fake and real. They want it all down.

• • •

Missing some Tweet in this thread? You can try to

force a refresh