A friend messaged me:

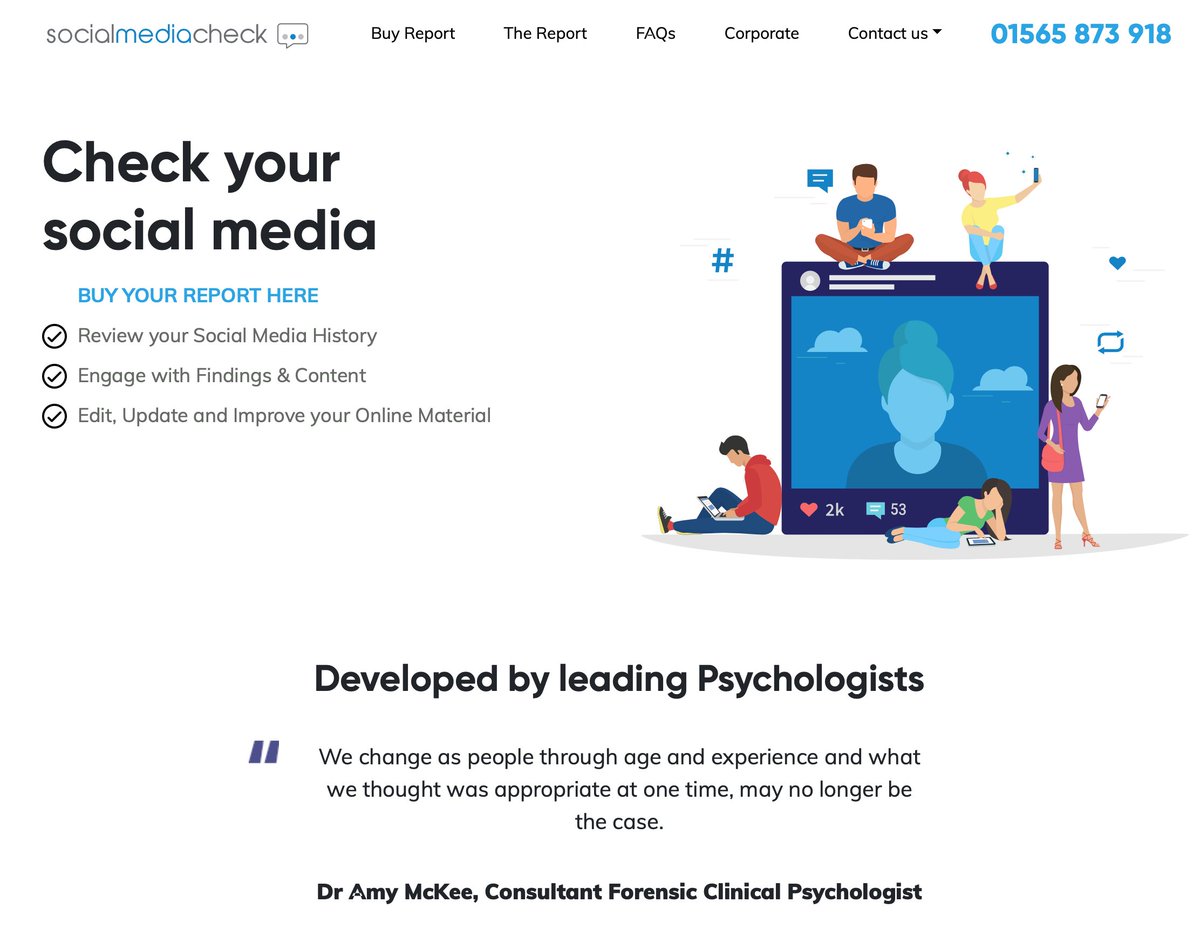

"What do you think about the security issues of typing social media passwords into an app from this company?"

socialmediacheck.com

Reply: "How much profanity am I allowed to use?"

"What do you think about the security issues of typing social media passwords into an app from this company?"

socialmediacheck.com

Reply: "How much profanity am I allowed to use?"

I mean, this all sounds like a service dedicated to checking your own shit, right? Unless like me you are entirely shameless, that might be an issue for some:

Oh, wait, what?

"We only conduct a Social Media Check once the person to be checked (subject) has provided consent."

So who is the "your" in "Check your social media", then?

"We only conduct a Social Media Check once the person to be checked (subject) has provided consent."

So who is the "your" in "Check your social media", then?

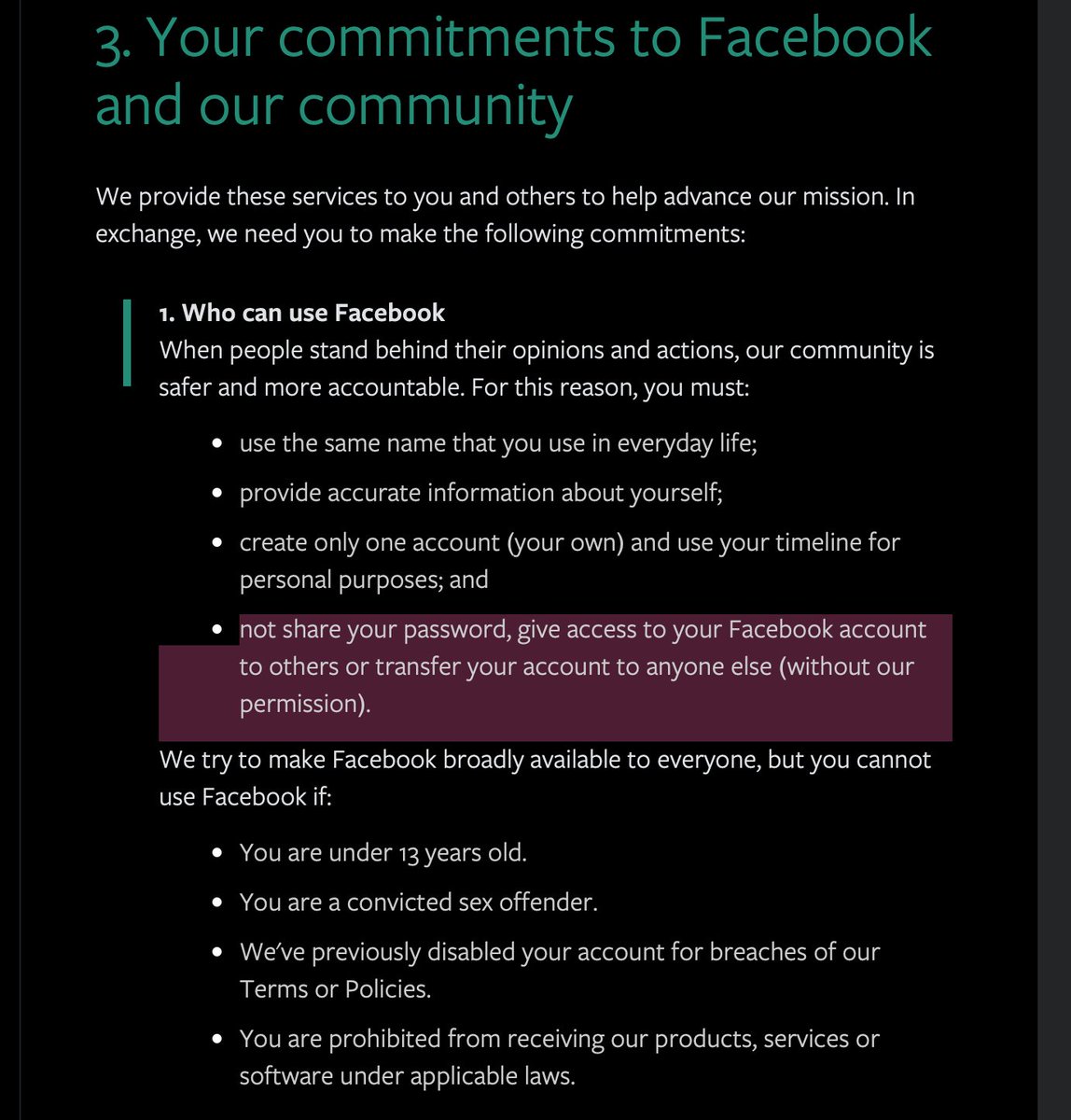

Let's ignore the fact that — for entirely sensible "don't do this, it's a really bad idea" — it's against Facebook's terms of service to give away your access credentials to commercial third parties.

facebook.com/legal/terms

facebook.com/legal/terms

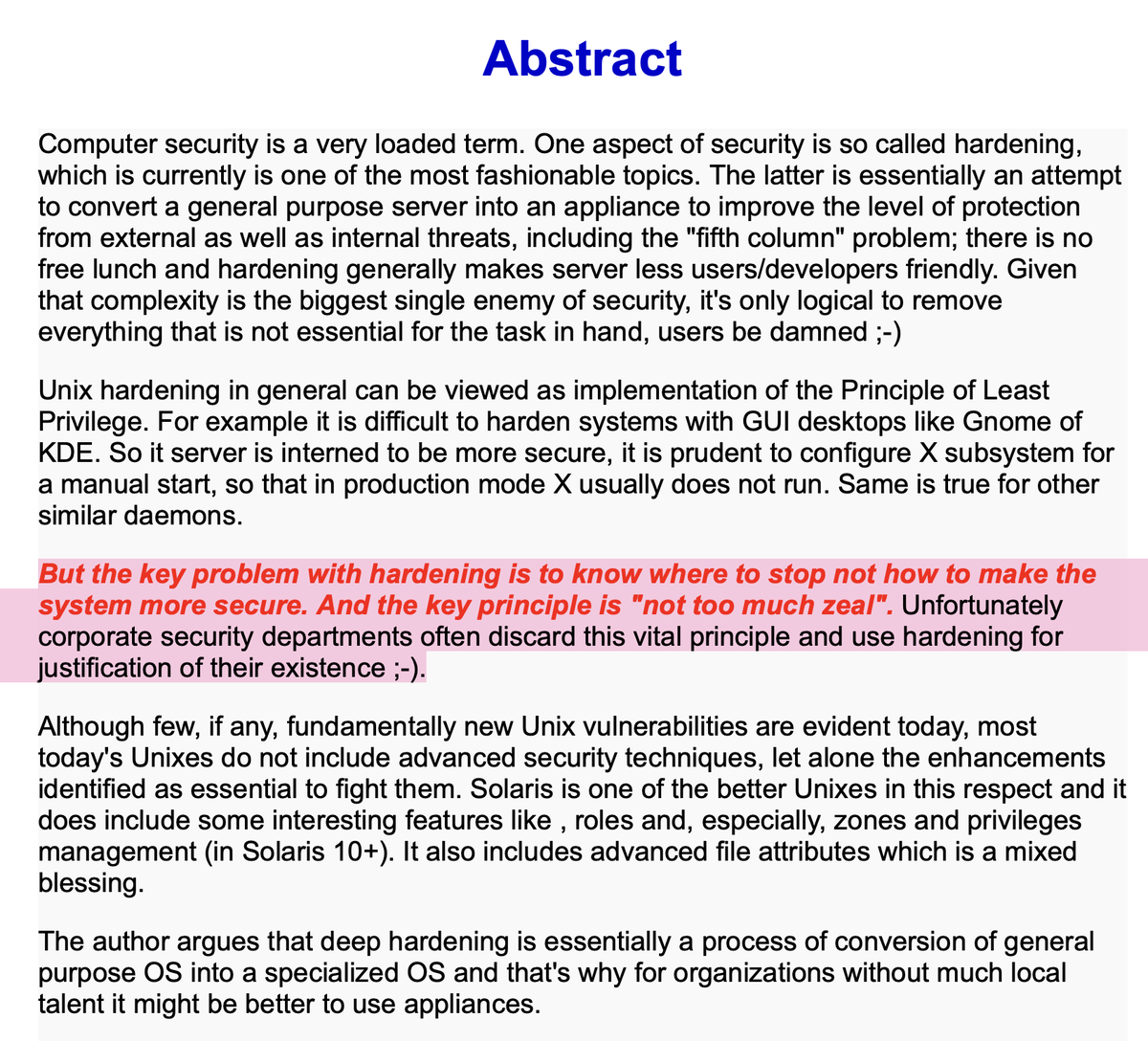

Not to mention that: passwords are the WORST way to achieve data sharing with third parties

But even if they were doing it legitimately and using the PLATFORM API, they'd be forbidden "to make eligibility determinations about people, including for housing, employment, insurance, education opportunities, credit, …benefits, or immigration status"

developers.facebook.com/terms/

developers.facebook.com/terms/

And get what the results of these checks are being used for. I'm not saying, but you can guess.

> 100% private (i.e. no human intervention at any stage of Social Media Check)

Wanna bet that they will have a problem with any account that has enabled 2FA?

How would they address that? Not to mention: addressing CAPTCHA, etc?

Wanna bet that they will have a problem with any account that has enabled 2FA?

How would they address that? Not to mention: addressing CAPTCHA, etc?

FAQs discreetly skirt "Does SocialMediaCheck.COM breach the terms of service of the various platforms?"

developer.twitter.com/en/developer-t…

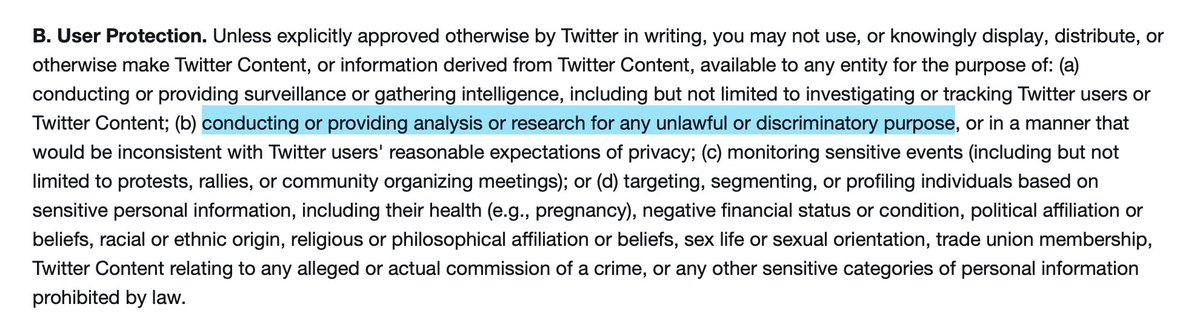

"you may not use…information derived from Twitter Content [for] …conducting or providing analysis or research for any unlawful or discriminatory purpose, or in a manner that would be inconsistent with Twitter users' reasonable expectations of privacy

"you may not use…information derived from Twitter Content [for] …conducting or providing analysis or research for any unlawful or discriminatory purpose, or in a manner that would be inconsistent with Twitter users' reasonable expectations of privacy

I am having a hard time getting clarity on the mechanism here; apparently you are supposed to authorise SocialMediaCheck to do its privacy-invasive thing, which sounds like OAuth; but there is also talk of logging in with your passwords... which sounds... equally or more bad.

Question for @EerkeBoiten and @PrivacyMatters : is it possible to meaningfully provide consent for processing which you do not understand the extent of, upon data that you likewise no longer have full awareness of?

https://twitter.com/AlecMuffett/status/1396735465575825410?s=19

• • •

Missing some Tweet in this thread? You can try to

force a refresh