*LocoProp: Enhancing BackProp via Local Loss Optimization*

by @esiamid @_arohan_ & Warmuth

Interesting approach to bridge the gap between first-order, second-order, and "local" optimization approaches. 👇

/n

by @esiamid @_arohan_ & Warmuth

Interesting approach to bridge the gap between first-order, second-order, and "local" optimization approaches. 👇

/n

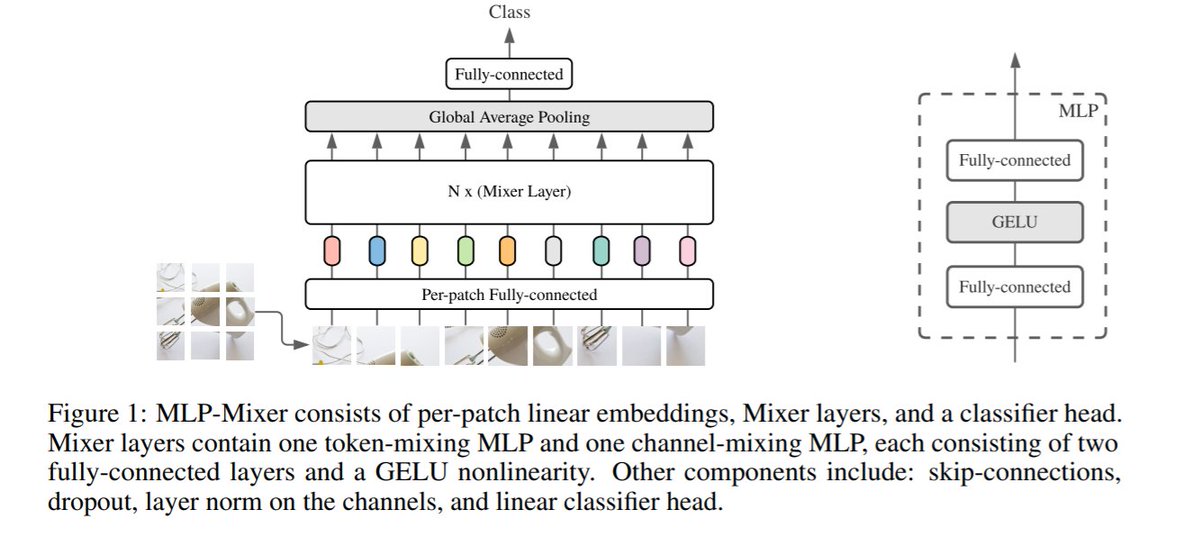

The key idea is to use a single GD step to define auxiliary local targets for each layer, either at the level of pre- or post-activations.

Then, optimization is done by solving local "matching" problems wrt these new variables.

/n

Then, optimization is done by solving local "matching" problems wrt these new variables.

/n

What is intriguing is that the framework interpolates between multiple scenarios: first solution step is the original GD, while closed-form solution (in one case) is similar to a pre-conditioned GD model. Optimization is "local" in the sense that it decouples across layers.

/n

/n

Paper is here: arxiv.org/abs/2106.06199

Looking forward to the code and to further explorations of this idea.

Looking forward to the code and to further explorations of this idea.

• • •

Missing some Tweet in this thread? You can try to

force a refresh