This post rips Prophet (a forecasting package I helped create) to shreds and I agree with most of it🥲 I always suspected the positive feedback was mostly from folks who’d had good results—conveniently the author has condensed many bad ones into one place. microprediction.com/blog/prophet

It’s really freakin hard to make statistical software that generalizes across many problems. Forecasting (extrapolation) is among the hardest statistical problems. I don’t think anyone who’s seen me present Prophet would think I’ve misrepresented these facts.

The website might not do the best job of explaining all the limitations and it’s honestly been surprising to me how much sophistication people attribute to the underlying model. I hope a little cold water will help! There are often better approaches and packages out there.

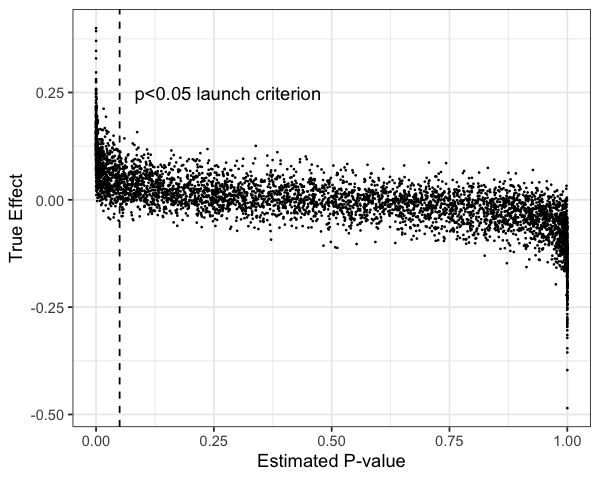

If I could build it again, I’d start with automating the evaluation of forecasts. It’s silly to build models if you’re not willing to commit to an evaluation procedure. I’d also probably remove most of the automation of the modeling. People should explicitly make these choices.

The lesson here is important and underrated: models and packages are just tools. We attribute magical powers to them, as if their creators have somehow anticipated all the idiosyncrasies of your particular problem. It’s unlikely they have and there’s no substitute for evaluation.

Almost forgot to link to my soundcloud:

https://twitter.com/seanjtaylor/status/1123278380369973248

• • •

Missing some Tweet in this thread? You can try to

force a refresh