I have nothing to do tonight so i'm going to try to re-visualize this data. Starting a THREAD I'll keep updated as I go.

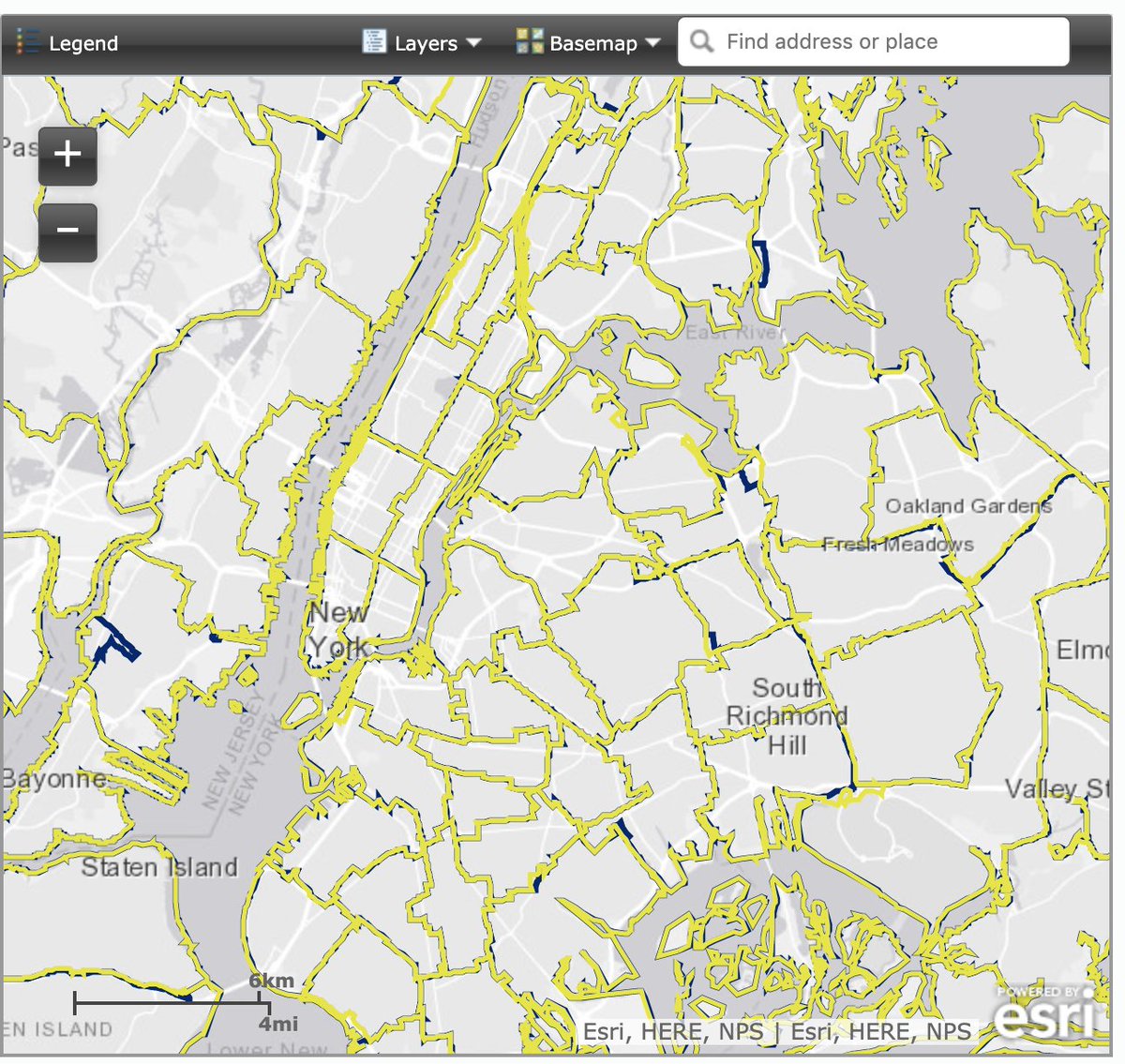

Looks like there's a variable called TRANTIME that is "Travel time to work." The map uses PUMA as the geography, which are areas with ~100K people each.

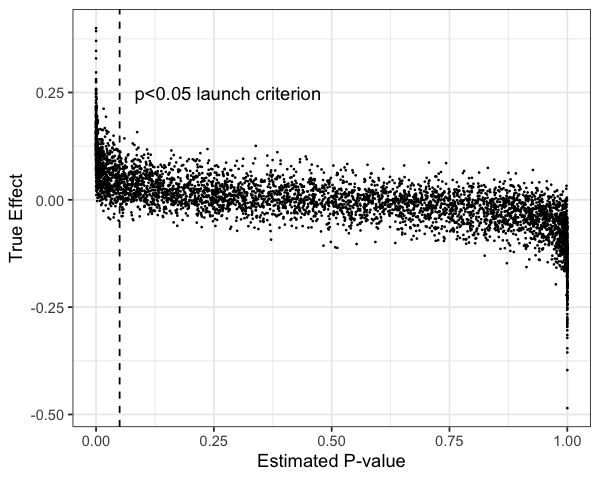

This doubt is a special feeling you have while analyzing data.

I await your friendly critiques of my R style and taste.