One of the more helpful things new analysts can do is to read about different sorts of attacks and understand the timeline of events that occurred in them. This enables something called forecasting, which is an essential skill. Let's talk about that. 1/

Any alert or finding that launches an investigation represents a point on a potential attack timeline. That timeline already exists, but the analyst has to discover its remaining elements to decide if it's malicious and if action should be taken. 2/

Good analysts look at an event and consider what sort of other events could have led to it or followed it that would help them make a judgement about the sequences disposition. 3/

This is where forecasting comes in. Analysts come up with potential timelines that could exist. Then, they ask investigative questions to try and narrow down the one that actually exists. This is bridging perception to reality. 4/

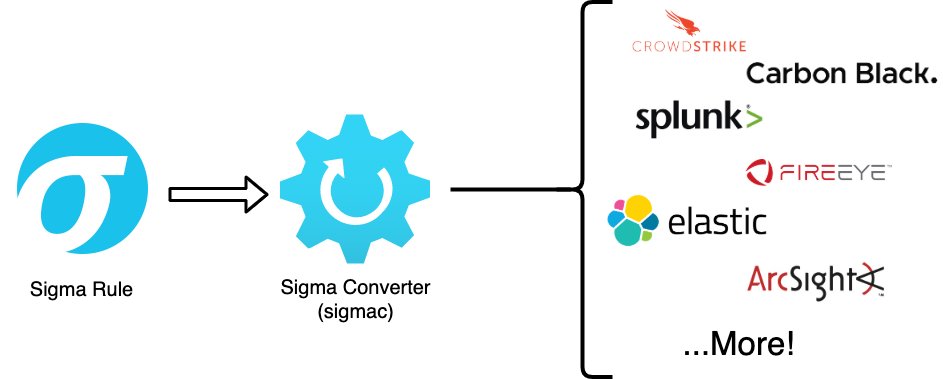

You can try this yourself. Consider an alert from this Sigma rule where a user was denied access to login to a system through RDP: github.com/SigmaHQ/sigma/…. 5/

Now, take a few minutes and think about the sequence of events that could be associated with this alert. It helps to break this up into preceding (what led to this) and succeeding (what happened after this) portions of the timeline. 6/

Starting w/ preceding timeline, this might be malicious if you see:

- Similar attempts from the source

- Suspicious downloads from the source

- Suspicious executions from the source

- Events occurring outside normal user baseline

- Evidence of cred dumping on the source

7/

- Similar attempts from the source

- Suspicious downloads from the source

- Suspicious executions from the source

- Events occurring outside normal user baseline

- Evidence of cred dumping on the source

7/

The idea here is that if an attacker is making these attempts, they probably have some foothold on the source host which would mean some other specific events likely already occurred. 8/

For the succeeding portion of the timeline, if it were malicious you might see:

- Similar attempts on other hosts

- Continued scanning from the source

- Attempts to access other services on the target

- An eventual successful RDP login

- Attempts to use those creds elsewhere

9/

- Similar attempts on other hosts

- Continued scanning from the source

- Attempts to access other services on the target

- An eventual successful RDP login

- Attempts to use those creds elsewhere

9/

The idea here is that the attacker might be targeting this system for a reason and may make additional attempts to access it using stolen credentials after this attempt failed. Or they might use stolen creds somewhere else. 10/

In this scenario, the analyst is able to ask focused investigative questions because they know 1) what would have had to have happened for the attacker to get here, 2) what else the attacker might do based on the result of this event. 11/

Analysts gain this knowledge by dissecting and internalizing other attacks. That occurs at multiple levels of abstraction. 12/

The high level describes broad attack life cycles. For example, knowing that recon often occurs before exploitation or that establishing a foothold often comes after the initial compromise. 13/

The low level describes specific system stimulus and response. For example, knowing that PsExec executed on a source system means that some application may have executed on a remote target. Or, that exec of a cred dumping tool may mean use of those creds on other systems. 14/

I like to think of this forecasting process like Doctor Strange sitting around looking into all the potential futures. But, instead you're looking at all the different pasts that could have occurred instead. 15/

Of course, there are a lot of things that go into deciding which timeline you want to try and prove exists and only one does. Something I talk about often is that, in many cases, it's fastest to try and prove something is benign before you prove it is malicious. 16/

In the earlier example from this thread, it's also likely that the user on the source machine typed the wrong system name or IP when using RDP and it was a mistake. That's easy to investigate -- just call them. But it still requires forecasting. 17/

All told, as a new analyst you want to read about lots of different attacks and understand the timeline of events that happened and what led from one to another. You need to do this with different types of attacks (spyware, ransomware, automated, human-driven, and so on.) 18/

Remember, expertise isn't truly measured in years alone. It's also measured in the diversity of your experiences. We can't all experience lots and lots of breaches, but you can read about them! There's no shortage of examples. 19/

The ability to forecast potential timelines from various points in an investigation is a core, crucial analyst skill. Like all those other skills, you can get better at it with deliberate practice. 20/20

• • •

Missing some Tweet in this thread? You can try to

force a refresh