Alright, let's add some substance to this Pegasus discussion. Contrary to what you might read, research into NSO has been going on for years and has involved a lot of great research groups (@citizenlab, @kaspersky, @Lookout, to name a few). It has also included leaks.

Folks are speculating about how we might know about the targets of Pegasus customers. NSO simultaneously claims that they don't know their customers targets but at the same time they know that none of the @AmnestyTech infections are real. Two obviously incompatible statements.

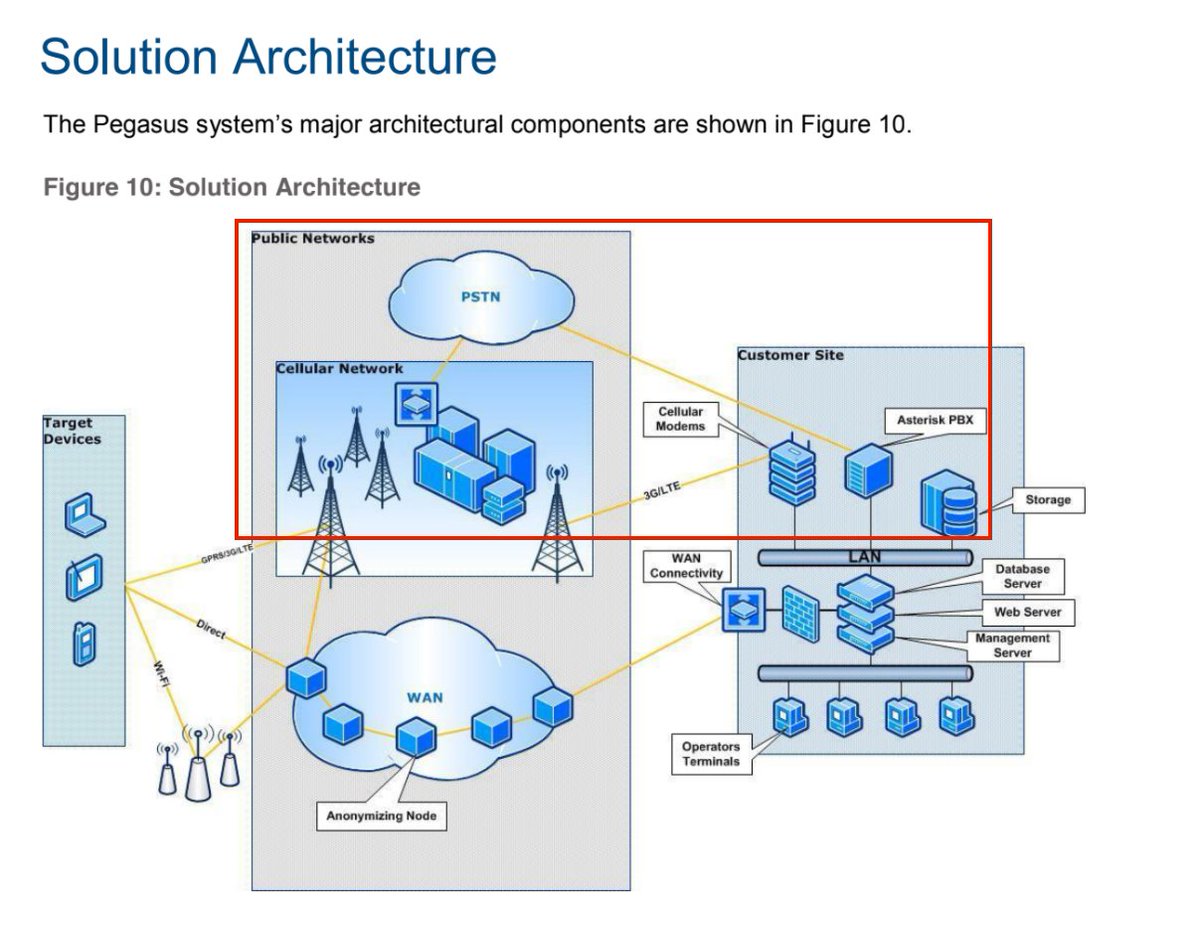

Assuming NSO doesn't have access to their customers targets, a list of targets of interest would have to come from a structural fault in the agent/exploit delivery infrastructure that NSO uses. We have a high-level view of how that system is architected.

A quick overview of how the solution works. The system is deployed on a customer site (usually one standing rack of servers) and provides multiple ways to infect targets (remote, local WAN, physical, etc). The customer-operator puts in a phone number and 'voila'.

NSO is selling the point-and-click outcome of what's actually a complex process. What the operator doesn't see is the testing of the phone number to see that it's valid(#1), fingerprinting the phone type/OS version(#2),generating a one-time delivery mechanism for exploit (#3)...

The one-click or zero-click exploit depends on what the cellular service provider allows (OTA vs SMS push) and what valid exploit NSO has on hand. These three points are important because that's where NSO most likely to (a)coincidentally see targets / (b)accidentally leak targets

What should we expect to see for these different scenarios (numbered above)?

(#1): One or more third-party HLR lookup service(s) used to validate phone numbers at time of being nominated for targeting (MOST LIKELY SOURCE OF THE LIST)

(#1): One or more third-party HLR lookup service(s) used to validate phone numbers at time of being nominated for targeting (MOST LIKELY SOURCE OF THE LIST)

Note that NSO has basically said as much in their own early response–

washingtonpost.com/investigations…

washingtonpost.com/investigations…

What would that (#1) list look like? Timestamped requests to check a broad swath of phone numbers for targeting including real victims, potential victims, incompatible phones, outdated numbers, old infections, etc. It would explain inflated numbers, broad base of customers, etc.

(#2): Depends on implementation specifics not spelled out by NSO. Likely an iframe/JS landing page hosted somewhere (AWS?)that serves as a precursor for attacks. If it's all the same for all customers, this is a second possible source but more likely to include IPs, not numbers.

(#3): NSO's own exploit delivery infrastructure. As I've mentioned before, offensive tooling providers have learned not to let their (not very bright)customers play with valuable exploits themselves. They host infrastructure/portals that do the exploit weaponizing for them.

At (#3) is where NSO is likely to have an opportunity to validate if the targets are real. They would also have the opportunity to make sure they purge that information on their end. While it's possible that targeting info could leak from here, I consider it less likely.

Alternatively, if you want to lend credence to NSO's rebuttals, remember that there are years of publications and forensic investigations on Pegasus infections in UAE and Mexico (to name a few) that can't be waved away.

I really hope this quells some of the uncertainty around this issue and allows folks to focus on the real issues happening with the for-profit sale of unregulated surveillance technologies to countries with no legal framework nor good track record for human rights.

(Source: Leaked Pegasus Marketing Material circa 2019- s3.documentcloud.org/documents/4599… )

/End

/End

@KimZetter @dangoodin001 @kaepora @runasand @iblametom @nicoleperlroth @ryanaraine @jsrailton @lorenzofb @evacide @thegrugq

• • •

Missing some Tweet in this thread? You can try to

force a refresh