How can you learn to use the @fastdotai framework to its fullest extent? A thread on what I believe is the most important lesson you can teach yourself: 👇

1/

1/

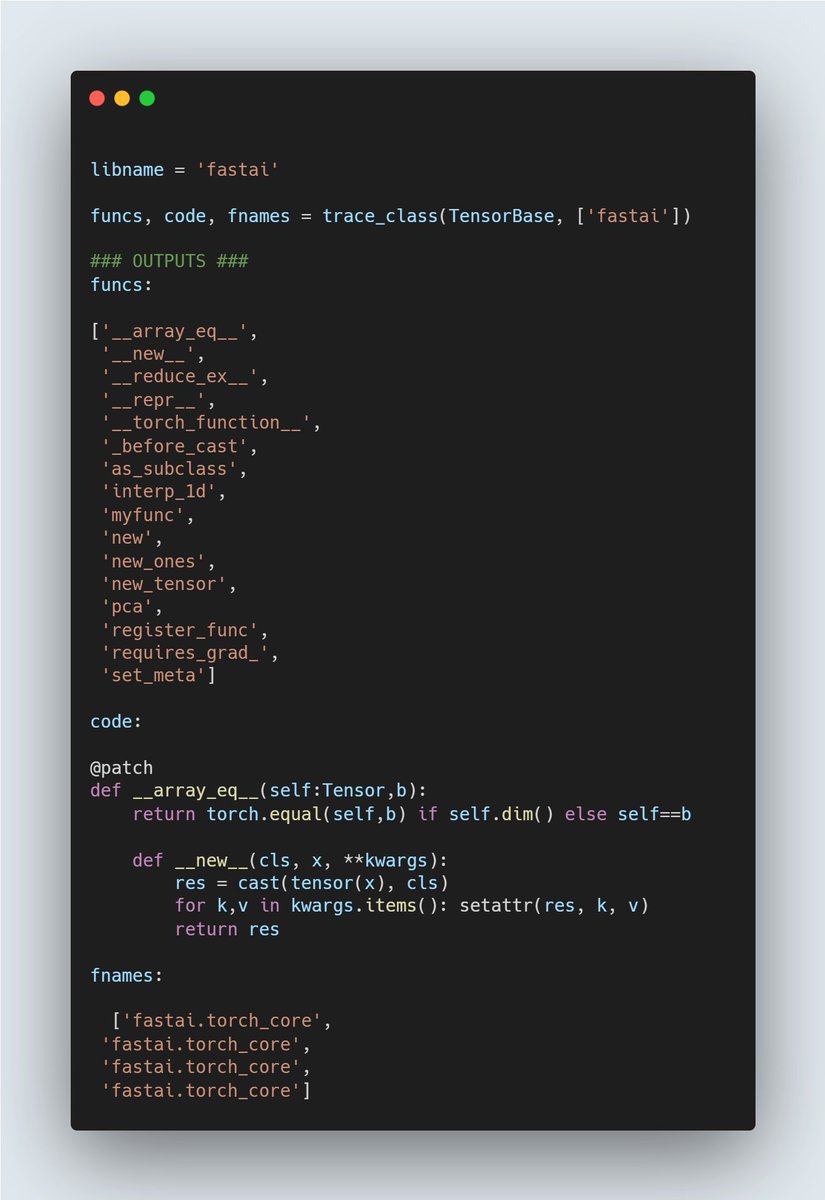

First: fixing a misconception. At its core, fastai is just PyTorch. It uses torch tensor, trains with the torch autograd system, and uses torch models.

Don't believe me? Let's talk about how you can learn to see this and utilize it

2/

Don't believe me? Let's talk about how you can learn to see this and utilize it

2/

Start with the PETS datablock + dataloaders, but write your own pytorch loop. Use cnn_learner if you want to start, and grab the model in learn.model

Train your model in the torch loop, and see that we can achieve (similar) scores.

3/

Train your model in the torch loop, and see that we can achieve (similar) scores.

3/

Don't go for fit_one_cycle when doing this, or any complex scheduler. Just use fit.

Start with completely unfreeze the model, then freeze it in fastai and pytorch. Learn what the training API is wrapping for you.

But what about the data?

4/

Start with completely unfreeze the model, then freeze it in fastai and pytorch. Learn what the training API is wrapping for you.

But what about the data?

4/

Use PyTorch datasets and DataLoaders, rebuild the PETS dataset with it, and use it with cnn_learner. You'll need to specify a .c attribute on them though, so cnn_learner knows how to build your model.

5/

5/

Once you've seen how both work, bring it together. Torch loaders, a torch model, and a torch loop.

And then, go the other end (or almost) torch loaders, torch model, and fastai's base Learner class.

6/

And then, go the other end (or almost) torch loaders, torch model, and fastai's base Learner class.

6/

What did this exercise teach?

It opened your eyes to how fastai really works, and how you can swap and switch it for anything in pytorch yourself.

After this, really dive deep into the callback system to understand what and how fastai's training loop is done.

7/

It opened your eyes to how fastai really works, and how you can swap and switch it for anything in pytorch yourself.

After this, really dive deep into the callback system to understand what and how fastai's training loop is done.

7/

I have a video on this here:

8/8

8/8

• • •

Missing some Tweet in this thread? You can try to

force a refresh