Machine learning models can be extremely powerful.

But there's a catch: they are notoriously hard to optimize for any given problem. There are just too many variables that we could change.

Thread: On keeping your sanity when training a model.

But there's a catch: they are notoriously hard to optimize for any given problem. There are just too many variables that we could change.

Thread: On keeping your sanity when training a model.

I'm sure you've heard about "hyperparameters."

Think of this as "configuration settings."

Depending on the settings you choose, your model will perform differently.

Sometimes better. Sometimes worse.

Think of this as "configuration settings."

Depending on the settings you choose, your model will perform differently.

Sometimes better. Sometimes worse.

Here are some of the settings that we could change when building a model:

• learning rate

• batch size

• epochs

• optimizer

• regularization

The list goes on and on.

• learning rate

• batch size

• epochs

• optimizer

• regularization

The list goes on and on.

There's usually a combination of values for these hyperparameters that's ideal for your problem.

But finding those values is a headache. There are too many possibilities!

We need something better.

But finding those values is a headache. There are too many possibilities!

We need something better.

Unfortunately, I've seen many people testing different parameters by hand.

This is tedious, suboptimal, and will rarely lead to good results.

We should automate this process.

This is tedious, suboptimal, and will rarely lead to good results.

We should automate this process.

I put together a couple of examples for you.

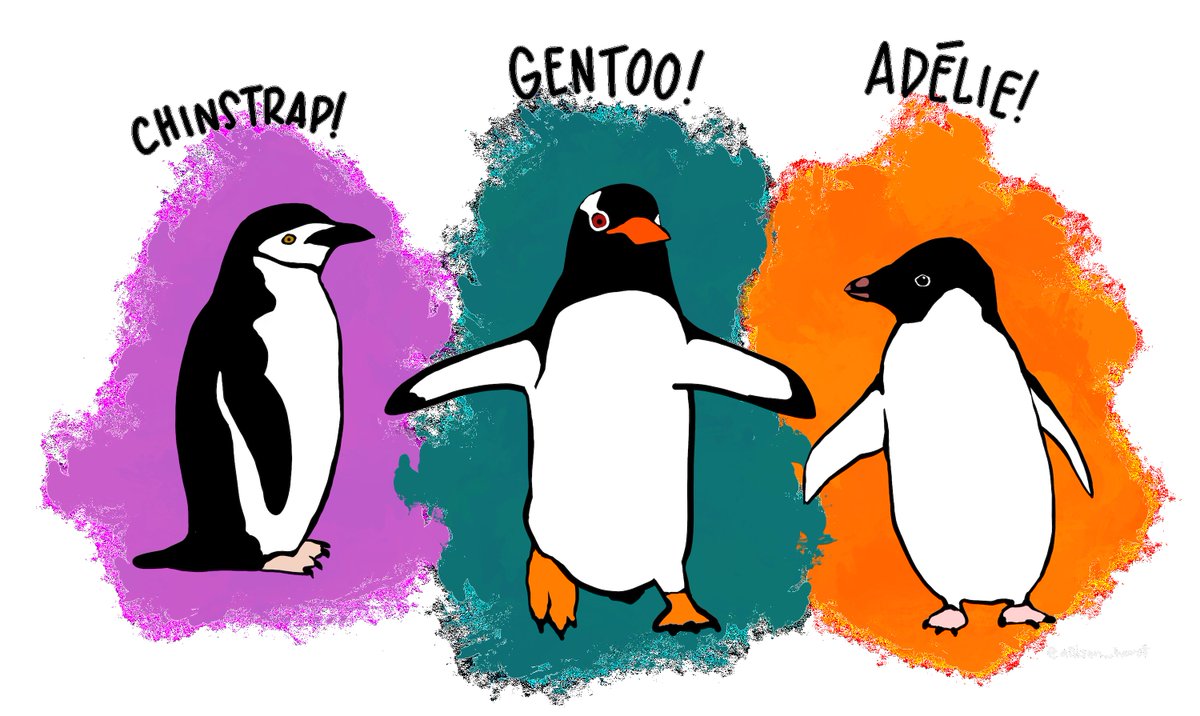

The first example uses KerasTuner to train a neural network on the Penguins dataset.

Here is the notebook: deepnote.com/@svpino/Keras-…

The first example uses KerasTuner to train a neural network on the Penguins dataset.

Here is the notebook: deepnote.com/@svpino/Keras-…

The second example uses Optuna and shows how to optimize an XGBRegressor and a CatBoostRegressor model.

Here is the notebook: deepnote.com/@svpino/Tuning…

(This is part of the work I had to do during my first Kaggle competition.)

Here is the notebook: deepnote.com/@svpino/Tuning…

(This is part of the work I had to do during my first Kaggle competition.)

By the way, if you aren't using @DeepnoteHQ already, you should definitely check it out!

You can create a free account and give your code some VIP treatment.

You can put together a collection of published notebooks that look beautiful.

You can create a free account and give your code some VIP treatment.

You can put together a collection of published notebooks that look beautiful.

If you are using TensorFlow and Keras, KerasTuner is the way to go.

In this example, I'm optimizing 3 hyperparameters:

• learning rate

• first hidden layer's units

• second hidden layer's units

Here is the documentation of KerasTuner: keras.io/keras_tuner/

In this example, I'm optimizing 3 hyperparameters:

• learning rate

• first hidden layer's units

• second hidden layer's units

Here is the documentation of KerasTuner: keras.io/keras_tuner/

It was only a few weeks ago when I learned about Optuna.

Its API is really simple to understand. The resultant code is clean and organized.

I incorporated Optuna into my toolset and I'm planning to keep using it.

Here is their documentation: optuna.org

Its API is really simple to understand. The resultant code is clean and organized.

I incorporated Optuna into my toolset and I'm planning to keep using it.

Here is their documentation: optuna.org

Bottom line: Tunning these hyperparameters with these tools is dead easy.

Even better: The process will find a good set for us in a smart way. It doesn't need to try every combination!

This makes the process cleaner, faster, and better than trying by hand.

Even better: The process will find a good set for us in a smart way. It doesn't need to try every combination!

This makes the process cleaner, faster, and better than trying by hand.

Let's recap:

• Stop tuning hyperparameters manually.

• Check out KerasTuner if you are using TensorFlow.

• Check out Optuna as well.

Two examples for you:

1. deepnote.com/@svpino/Keras-…

2. deepnote.com/@svpino/Tuning…

• Stop tuning hyperparameters manually.

• Check out KerasTuner if you are using TensorFlow.

• Check out Optuna as well.

Two examples for you:

1. deepnote.com/@svpino/Keras-…

2. deepnote.com/@svpino/Tuning…

I post threads like this every Tuesday and Friday.

Follow me @svpino for practical tips and stories about my experience with machine learning.

And if you don’t want to miss any of these threads, subscribe to my newsletter (link in my profile), and I’ll email them to you.

Follow me @svpino for practical tips and stories about my experience with machine learning.

And if you don’t want to miss any of these threads, subscribe to my newsletter (link in my profile), and I’ll email them to you.

Optuna is independent of specific libraries or frameworks, so you can use it with TensorFlow, PyTorch, or ScikitLearn algorithms.

If you are using Keras, however, I'd recommend you look into KerasTuner instead.

If you are using Keras, however, I'd recommend you look into KerasTuner instead.

https://twitter.com/ed_rockkk/status/1433759186635001862?s=20

Anything that the training process updates, we call "parameters." For example, the weights and biases of a neural network.

Anything that we set, and doesn't change with training, we call "hyperparameter." For example, the learning rate.

Anything that we set, and doesn't change with training, we call "hyperparameter." For example, the learning rate.

https://twitter.com/panthadeep1/status/1433799328477319174?s=20

• • •

Missing some Tweet in this thread? You can try to

force a refresh