Imagine I tell you this:

"The probability of a particular event happening is zero."

Contrary to what you may think, this doesn't mean that this event is impossible. In other words, events with 0 probability could still happen!

This seems contradictory. What's going on here?

"The probability of a particular event happening is zero."

Contrary to what you may think, this doesn't mean that this event is impossible. In other words, events with 0 probability could still happen!

This seems contradictory. What's going on here?

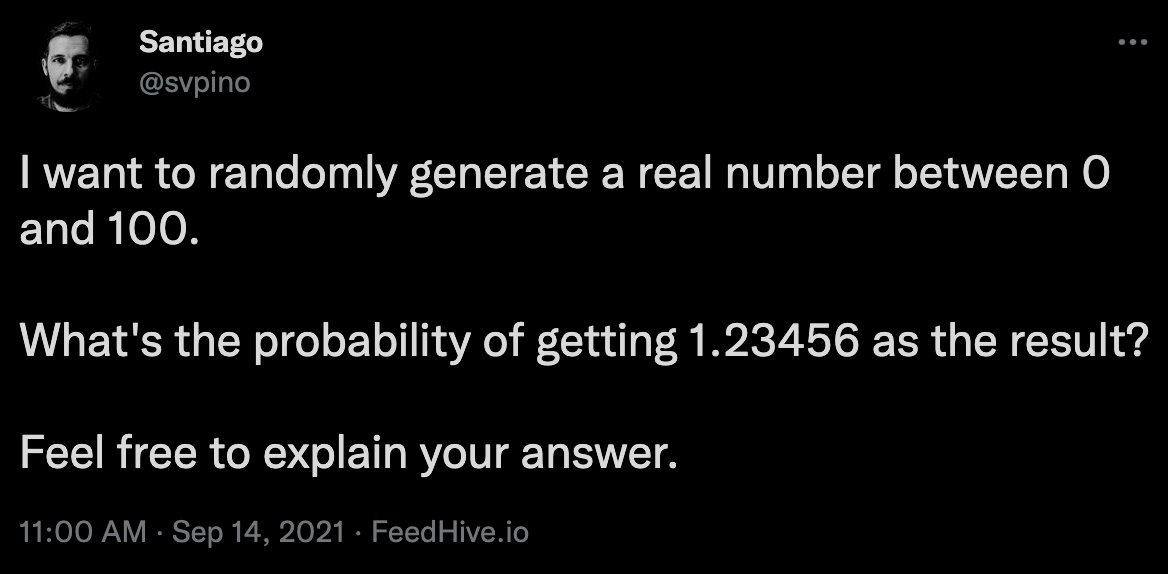

Yesterday, I asked the question in the attached image.

Hundreds of people replied. Many of the answers followed the same logic:

"The probability can't be zero because that would mean that the event can't happen."

This, however, is not true.

Hundreds of people replied. Many of the answers followed the same logic:

"The probability can't be zero because that would mean that the event can't happen."

This, however, is not true.

Let's start with something that we know:

Impossible outcomes always have a probability of 0.

This means that the probability of an event that can't happen is always zero.

Makes sense. But the opposite is not necessarily true!

Impossible outcomes always have a probability of 0.

This means that the probability of an event that can't happen is always zero.

Makes sense. But the opposite is not necessarily true!

If we limit the space of possible outcomes to a finite number of possibilities, we can say that any outcomes with a probability of zero are impossible.

But what happens if we look into an infinite space of outcomes?

But what happens if we look into an infinite space of outcomes?

Here is an example: Draw a random real number between 0 and 1.

Possible outcomes? Infinite.

What's the probability of drawing any specific number?

Possible outcomes? Infinite.

What's the probability of drawing any specific number?

Well, we know that we have ∞ possibilities, therefore:

P(drawing a number) = 1/∞

Here is where it gets interesting.

Infinity is not a number! We can't just simply do regular operations using ∞.

P(drawing a number) = 1/∞

Here is where it gets interesting.

Infinity is not a number! We can't just simply do regular operations using ∞.

We can reason about 1/∞ by using limits:

lim(𝑥 → ∞) 1/𝑥 = 0

In English: "1/𝑥 tends to 0 as 𝑥 grows towards infinity." In other words, as 𝑥 grows large, our probability grows really small.

This is good, but there's something missing: what's the probability then?

lim(𝑥 → ∞) 1/𝑥 = 0

In English: "1/𝑥 tends to 0 as 𝑥 grows towards infinity." In other words, as 𝑥 grows large, our probability grows really small.

This is good, but there's something missing: what's the probability then?

To properly compute the probability in a continuous context, we need to look into probability densities.

I won't do that here, but trust me when—unsurprisingly—I simplify things by saying:

P(drawing a number) = 1/∞ = 0

I won't do that here, but trust me when—unsurprisingly—I simplify things by saying:

P(drawing a number) = 1/∞ = 0

Therefore, the probability of getting any specific outcome from an infinite space of possible outcomes is zero.

It doesn't mean that the probability is really small. It doesn't mean that the probability is close to zero.

It means that the probability is zero.

It doesn't mean that the probability is really small. It doesn't mean that the probability is close to zero.

It means that the probability is zero.

Whenever we are looking at an infinite space of outcomes, we say the following:

"An event with zero probability implies that the event will almost never happen."

This is accurate. Saying that the event will never happen (or is impossible) is not.

"An event with zero probability implies that the event will almost never happen."

This is accurate. Saying that the event will never happen (or is impossible) is not.

I'm not a mathematician.

This thread aims to illustrate probabilities on an infinite space of outcomes using an informal explanation.

For those looking for a more formal approach, I'd recommend reading about probability density and how it's used in a continuous context.

This thread aims to illustrate probabilities on an infinite space of outcomes using an informal explanation.

For those looking for a more formal approach, I'd recommend reading about probability density and how it's used in a continuous context.

Every week, I post 2 or 3 threads like this, breaking down machine learning concepts and giving you ideas on applying them in real-life situations.

You can find more of these at @svpino.

If you find this helpful, stay tuned: a lot more is coming.

You can find more of these at @svpino.

If you find this helpful, stay tuned: a lot more is coming.

• • •

Missing some Tweet in this thread? You can try to

force a refresh