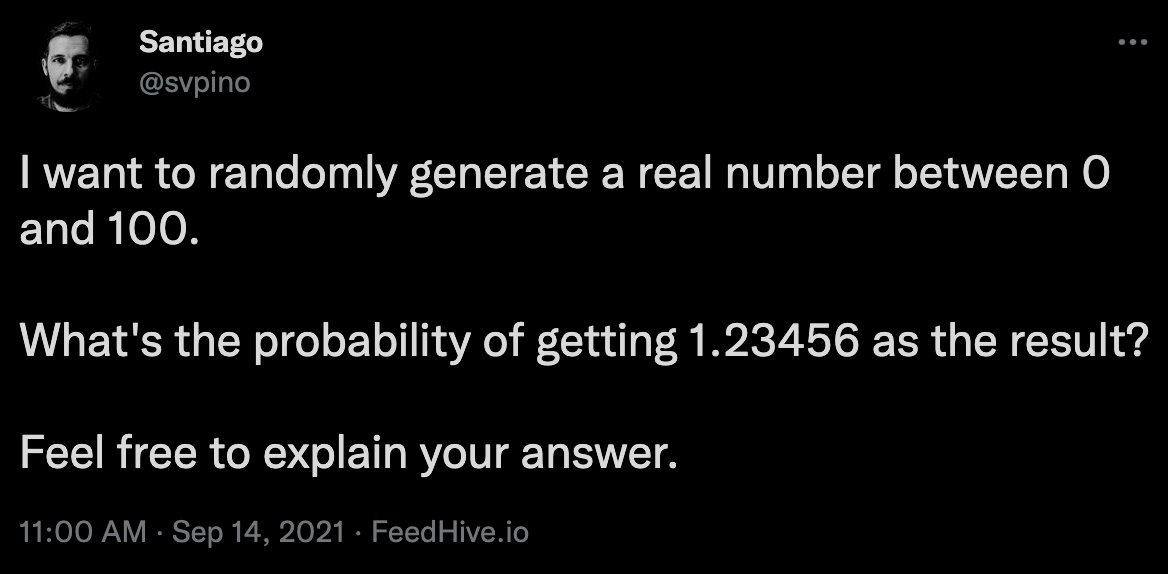

Antoine was born in France back in 1607.

Despite not being a nobleman, he called himself "Chevalier De Méré," and spent his days as any other writer and philosopher at the time.

But the Chevalier liked gambling, and was obsessed with the probabilities surrounding the game.

Despite not being a nobleman, he called himself "Chevalier De Méré," and spent his days as any other writer and philosopher at the time.

But the Chevalier liked gambling, and was obsessed with the probabilities surrounding the game.

One day he started losing money unexpectedly.

His choices were between:

1. Getting at least one six with four throws of a die, or

2. Getting at least one double six with 24 throws of a pair of dice?

He believed both had equal probabilities, but luck kept eluding him. 🤦

His choices were between:

1. Getting at least one six with four throws of a die, or

2. Getting at least one double six with 24 throws of a pair of dice?

He believed both had equal probabilities, but luck kept eluding him. 🤦

This is how Méré thought about this problem:

1. Chance of getting one six in one roll: 1/6

2. Average number in four rolls: 4(1/6) = 2/3

3. Chance of getting double six in one roll: 1/36

4. Average number in 24 rolls: 24(1/36) = 2/3

Then, why was he losing money?

1. Chance of getting one six in one roll: 1/6

2. Average number in four rolls: 4(1/6) = 2/3

3. Chance of getting double six in one roll: 1/36

4. Average number in 24 rolls: 24(1/36) = 2/3

Then, why was he losing money?

Méré enlisted two famous mathematicians to help him with the mistery.

Blaise Pascal and Pierre de Fermat took up the challenge.

After several letters, they solved the riddle and layed out the foundations for the modern theory of probability.

Blaise Pascal and Pierre de Fermat took up the challenge.

After several letters, they solved the riddle and layed out the foundations for the modern theory of probability.

Although Méré thought that both choices had the same probability, they don't.

To understand the problem, let's analyze each one of the options separatedely.

To understand the problem, let's analyze each one of the options separatedely.

Let's solve for getting at least one 6 with 4 throws of a die:

1. Chance of not getting a 6 in one roll: 5/6.

2. If we roll 4 times, the chance of not getting a 6: (5/6)^4

3. Chance of getting at least one 6: 1 - (5/6)^4 ≈ 0.52.

Let's look at the second option now.

1. Chance of not getting a 6 in one roll: 5/6.

2. If we roll 4 times, the chance of not getting a 6: (5/6)^4

3. Chance of getting at least one 6: 1 - (5/6)^4 ≈ 0.52.

Let's look at the second option now.

Getting at least one double 6 with 24 throws of a pair of dice:

1. Chance of not getting a double 6 in one roll: 35/36

2. If we roll 24 times, the chance of not getting a double 6: (35/36)^24

3. Chance of getting at least one double 6 with 24 rolls: 1 - (35/36)^24 ≈ 0.49.

1. Chance of not getting a double 6 in one roll: 35/36

2. If we roll 24 times, the chance of not getting a double 6: (35/36)^24

3. Chance of getting at least one double 6 with 24 rolls: 1 - (35/36)^24 ≈ 0.49.

The options that Méré considered equal, had in fact 0.52 and 0.49 chances of happening, respectively.

Lossing money wasn't about luck, but a mistake reasoning about his chances.

This problem is known today as "De Méré's Paradox."

Lossing money wasn't about luck, but a mistake reasoning about his chances.

This problem is known today as "De Méré's Paradox."

As for Antoine, sure he didn't lose more money on that game, but that didn't make him less annoying.

He later claimed that he had discovered probability theory himself, and that mathematics was inconsistent.

Yeah, that didn't bode well for him.

He later claimed that he had discovered probability theory himself, and that mathematics was inconsistent.

Yeah, that didn't bode well for him.

Every week, I post 2 or 3 threads like this, breaking down interesting concepts and giving you ideas on applying them in real-life situations.

You can find more of these at @svpino.

If you find this helpful, stay tuned: a lot more is coming.

You can find more of these at @svpino.

If you find this helpful, stay tuned: a lot more is coming.

• • •

Missing some Tweet in this thread? You can try to

force a refresh