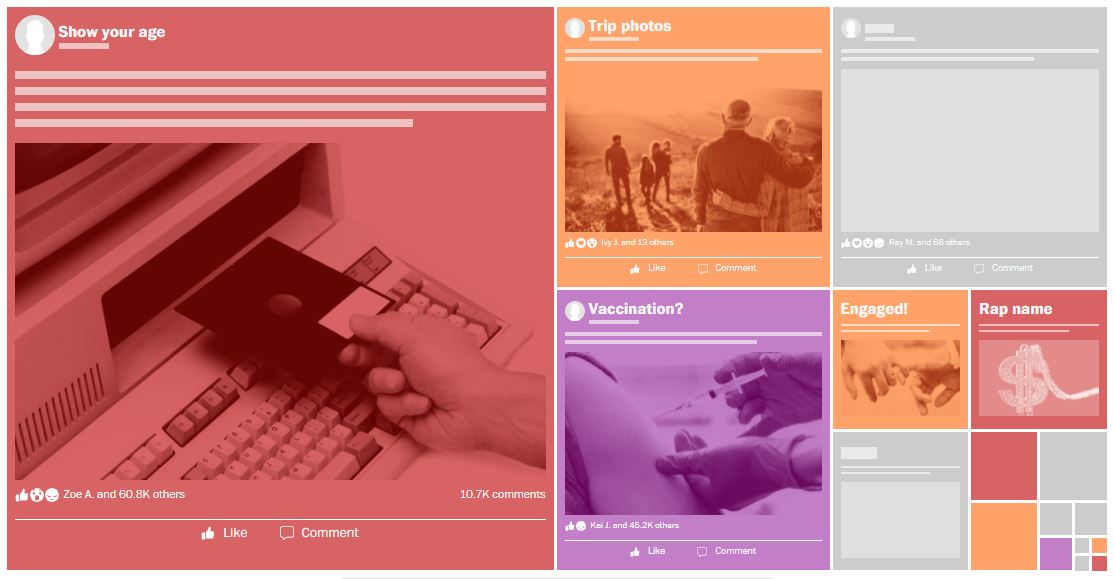

Most political parties in Poland have complaints about Facebook’s algorithms, the obscure formulas that decide which posts pop up on a user’s news feed and which fade into the ether.

The far-right Confederation party does not. wapo.st/3GvqiDC

The far-right Confederation party does not. wapo.st/3GvqiDC

The Confederation’s content generally does well, including a slew of anti-lockdown, anti-immigration, vaccine-skeptic posts often punctuated with large red exclamation marks.

It’s a “hate algorithm,” said the head of the party’s social media team. wapo.st/3GvqiDC

It’s a “hate algorithm,” said the head of the party’s social media team. wapo.st/3GvqiDC

That Facebook might be amplifying outrage — while driving polarization and elevating more extreme parties around the world — has been ruminated inside the company for years, according to the internal documents known as the Facebook Papers. wapo.st/3GvqiDC

In one April 2019 document detailing a research trip to the European Union, a Facebook team reported feedback from European politicians that an algorithm change the previous year had changed politics “for the worse.” wapo.st/3GvqiDC

That change was billed by Facebook’s chief executive Mark Zuckerberg as an effort to foster more “meaningful” interactions on the platform.

The team reported back specific concerns from Poland, where parties had described a “social civil war” online. wapo.st/3GvqiDC

The team reported back specific concerns from Poland, where parties had described a “social civil war” online. wapo.st/3GvqiDC

• • •

Missing some Tweet in this thread? You can try to

force a refresh