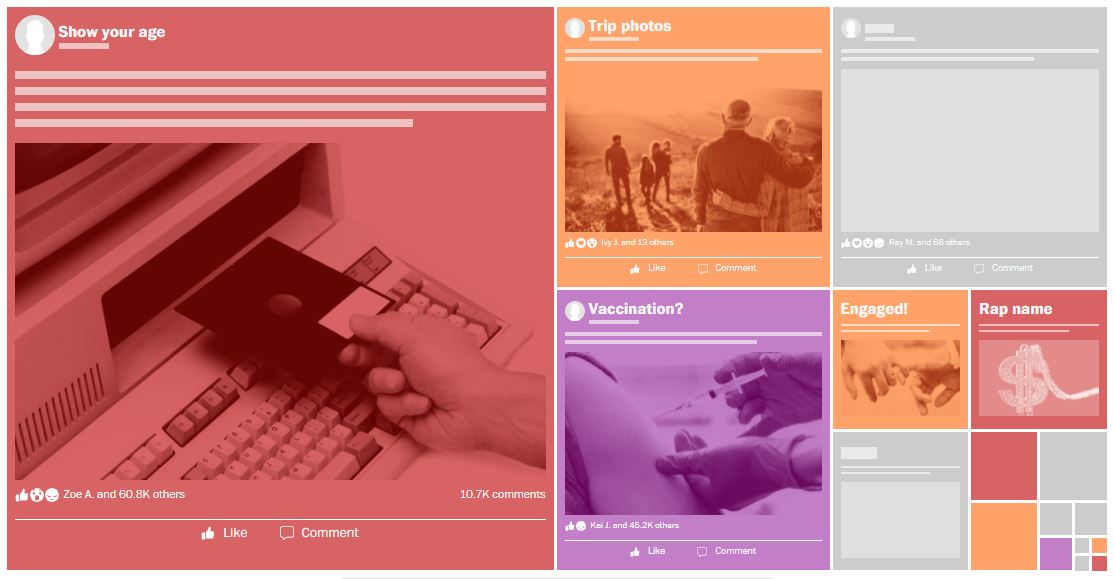

Facebook researchers had deep knowledge of how coronavirus and vaccine misinformation moved through the company’s apps, according to documents disclosed by Facebook whistleblower Frances Haugen. wapo.st/3mmERBx

But even as academics, lawmakers and the White House urged Facebook for months to be more transparent about the misinformation and its effects on the behavior of its users, the company refused to share much of this information publicly.

From August: washingtonpost.com/technology/202…

From August: washingtonpost.com/technology/202…

Internally, Facebook employees showed that coronavirus misinformation was dominating small sections of its platform.

Other researchers documented how posts by medical authorities, like the WHO, were often swarmed by anti-vaccine commenters. wapo.st/3mmERBx

Other researchers documented how posts by medical authorities, like the WHO, were often swarmed by anti-vaccine commenters. wapo.st/3mmERBx

Documents show how extensively Facebook was studying coronavirus and vaccine misinformation on its platform, unearthing findings that concerned its own employees.

Yet, executives focused on more positive aspects of the social network’s pandemic response. wapo.st/3mmERBx

Yet, executives focused on more positive aspects of the social network’s pandemic response. wapo.st/3mmERBx

• • •

Missing some Tweet in this thread? You can try to

force a refresh